FlightGear high-level architecture support

| This article or section contains out-of-date information

Please help improve this article by updating it. There may be additional information on the talk page. |

| Multicore |

|---|

|

Configuration |

|

Ongoing Efforts |

|

Proposals & RFCs |

|

Background |

|

For developers |

| The FlightGear forum has a subforum related to: AI Scripting |

| FlightGear AI |

|---|

|

|

Development & Issues |

| Rendering |

|---|

|

Rendering Pipeline |

|

Effects and Shaders |

|

Ongoing Efforts |

|

Standalone Tools |

|

IPC/Networking |

Objective

| what is very near is that we can do the phsyics in parallel with the cull and draw stage on a different cpu. That will utilie multi core machines in this current generation very well. Also osg allows us to feed multiple views from within one single application where each view runs its cull and draw on its own cpu. Osg provides much more here, but for the first cut this describes it well enough. Given that in the near future only the update step does communication between the physics part and the IG part we have many places where the physics pipeline stage feeds the IG pipeline stage identified, I can see a good chance to do that update step with data feed over a network interface. — Mathias (May 14th, 2007). Re: [Flightgear-devel] More ideas on dogfighting.

(powered by Instant-Cquotes) |

Also see Developing with HLA.

Document the ongoing HLA effort, to help people better understand how this affects a number of related FlightGear efforts and projects (particularly related to improved modularization, concurrency support and the multiplayer/AI system). FlightGear related limitations have been extensively discussed over the years, for a comprehensive summary, please see Modularizing, parallelizing and distributing FlightGear.

Although the FlightGear design fairly modular it's provided as a single binary. Everyone who wants to create a new I/O module must patch the FlightGear sources and compile the FlightGear binary from scratch. This may discourage those who want to use FlightGear as a tool and extend it in some way. Moreover, it's not always possible to include all functions in a single binary. Some functions may be mutually exclusive.

The plan is to use HLA/RTI as an aid to parallelize flightgear on a local machine. The basic advantage is that each federate is strictly programmed single threaded. All the thread synchronization is handled by the rti library and hidden in that thing. The trick is that each of these threads must be done in a way that you can just compile that alternatively in a single standalone binary and run the same component in a networked rti - the LinuxTag booth for example.

Basically, better HLA support will make it possible for FlightGear to be split into "components" (each running in a dedicated process or in separate thread within the main FlightGear process), making better use of multi-core architectures (SMP), while decoupling the flight simulation from the "viewer" part (i.e. visualization), so that steady/reliable frame rates can be achieved (see FGViewer), but also to formalize the concept of master/slave instances and synchronizing state among multiple instances (think AI traffic/weather).

| I will provide a different renderer sometime in the future where I aim to provide better isolation of this kind of renderer options so that people with very different aims can probably coexist better.[1] — Mathias Fröhlich

|

| supporting the option of different viewer / rasterizer front-ends is by far the best solution here, since there are different requirements for different use-cases and target users. Plenty of people are interested in the kind of effects Thorsten's uber-shader and atmospheric model can provide, but plenty of others care more about a solid 60Hz (or even higher), and still others would prefer a solution that works with the fixed-function pipeline. And then there's Rembrandt as yet another rendering configuration. My plan in parallel with / after 2.12 is to work with Mathias' HLA code to make

this separation possible, unless of course he beats me to it :)[2] — James Turner

|

| There's a larger issue here, that 'soon' (likely during the summer) I want to start restructuring the codebase into multiple processes, so we can support different rendering architectures (and use multiple CPUs) better. That's Mathias Fröhlich's HLA/RTI work, and indeed he has done the recent work on extending fgviewer to test changes to the current terrain system. [3] — James Turner

|

| concerning the larger issue of different rendering pipelines / approaches, my opinion is, and remains, that the long-term solution is separate viewer codebases - while a plethora would be bad, we would already benefit from a 'fixed-function, no shaders' renderer codebase distinct from a Rembrandt renderer and modern, forward-rendering OpenGL 3.x pipeline. This needs the viewer to be cleanly split out from the simulation backend, via HLA, which is exactly what Mathias (and soon, myself) are working towards, but slowly.[4] — James Turner

|

- ↑ Mathias Fröhlich (Sun, 27 Jan 2013 08:45:22 -0800). Color-shifts for textures.

- ↑ James Turner (Sun, 27 Jan 2013 09:35:43 -0800). Color-shifts for textures.

- ↑ James Turner (Mon, 11 Mar 2013 15:24:07 -0700). Generating ground textures on the fly?.

- ↑ James Turner (Thu, 25 Apr 2013 08:09:08 -0700). Atmospheric Light Scattering.

Status & News

| I've successfully replaced the HLA implemented in SimGear with SimKit (the name of Mathias' abstracting layer). This has allowed me to replace ~ 17000 lines of code in simgear/flightgear with 500 in flightgear alone. I'll need some help with the cmake plumbing before it can be checked in. I've also successfully joined an OpenRTI HLA federation, and received updates from an external source, which are displayed as an MP aircraft in the property tree. — Stuart Buchanan (Nov 16th, 2015). Re: [Flightgear-devel] HLA developments.

(powered by Instant-Cquotes) |

| I've now got an external HLA Federate being displayed in FlightGear. The implementation right now is very basic - I have a KC-135 circling above LOWI at 10kts and 500ft AGL - but it's enough to build on. The next steps I'm planning are as follows: - Flesh out the Viewer Federate implementation, possibly to include mapping of HLA data to properties (not sure exactly how to do this yet). - Publish the FlightGear aircraft instance position to the RTI so it can be picked up by other viewers. - Create a Multiplayer Proxy as a Federate that proxies between the MP network and the RTI. This will run in a separate thread or as a separate executable. - Split out the traffic Manager in a similar way. This is obviously focussing on the viewer aspect, and doesn't address splitting out the FDM or control elements. — Stuart Buchanan (Nov 17th, 2015). Re: [Flightgear-devel] HLA developments.

(powered by Instant-Cquotes) |

| I'm planning to use an IEEE 1516 standard RTI, of which OpenRTI is a conveniently free implementation. AFAICT this is one of the main standards for distributed simulation communication (the other being DIS). I think that will address the communications side of the equation, and OpenRTI can work both as a local RTI and over a network. It's also got a robust logical time implementation, and the ability to clock the simulation. — Stuart Buchanan (Nov 19th, 2015). Re: [Flightgear-devel] HLA developments.

(powered by Instant-Cquotes) |

| In terms of integration with the property tree, I'm thinking that in the short term all the different components that we split out into separate threads or executables will simply use their own properties trees, and use the RTI to reflect the particular (minimal) data that needs to be passed between components. — Stuart Buchanan (Nov 19th, 2015). Re: [Flightgear-devel] HLA developments.

(powered by Instant-Cquotes) |

| There is a lot of private code pushed into the repositry, and at some point this tied together will make a lot of sense I think. But up to now just a lot of loose ends. What is there is a sketch of an AI module working on top of hla objects, this sketch also shows how you can roll on ground - at least for solid ground. Also fgviewer gained a lot of unfinished hla code that enables fgviewer to sit on a hla configured view - more or less. At least a python script that I regularily use for development providing this works fine. Together with fgviewer there is also some work done on the scenegraph that is used there. fgviewer is since some time just able to display a whole world paged model that has the stg/btg files in the leafs. With this work there is also some level of detail work done in the leaf nodes that is used by the usual fgfs scene. On my setups here these changes accounted for some performance gains which are also partly visible with fgfs. So not sure if this is worth to be mentioned as there is nothing finally useful for an end user there. — Mathias Fröhlich (Jan 20th, 2013). Re: [Flightgear-devel] 2.10.0 Changelog - help required.

(powered by Instant-Cquotes) |

Simgear already has some RTI abstraction library that should help to implement HLA federates. Both, SimGear and FlightGear need to be configured/rebuilt with -DENABLE_RTI (after first installing openRTI).

Flightgear git already has an alternative multiplayer implementation in place that uses HLA. But that is only thought as a proof of concept. The next step is probably to provide a seperate hla federate that runs the AI traffic and feeds that into an rti federation (see the fgai section below).

Also see:

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg32045.html

- http://www.mail-archive.com/search?q=fgai&l=flightgear-devel%40lists.sourceforge.net

- FlightGear commit d79b238

- https://gitlab.com/flightgear/flightgear/blob/next/utils/fgai

Also see FlightGear CIGI Support (Common Image Generator Interface).

Background

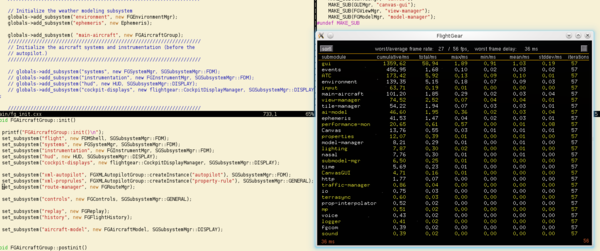

When you start up FlightGear on a 8-12-core machine, you'll find that most cores aren't really utilized at all. This is in stark contrast to X-Plane for example. There are many computations being run as part of the FG main loop, that don't stricly belong there, and which could obviously run outside the main loop, either in a separate thread or a dedicated process.

We have been adding more and more threading to FG because of this, but we are also seeing more and more segfaults related to multithreaded code in FG. Just look at some of the recently reported bugs (issue tracker), many of them are directly related to concurrency issues and multiple threads doing things in a fashion which isn't threadsafe.

Fixing this is something that requires explicit re-architecting, it cannot be just delegated to OSG assuming that it will just magically work. OSG parallelization is specifically related to rendering and following certain coding patterns and protocols, it will not directly help you otherwise. Even if you could run 99% of the rendering work asynchronously, that will not have any positive effect, because obviously it's our main loop that's becoming the showstopper here.

The HLA approach is different and more promising in that a process-based parallelization effort actually forces people to think about the problem at hand. Also, using an existing industry standard (such as i.e. HLA or CIGI) is going to make FlightGear increasingly relevant and attractive to industry leaders.

The idea is to divide parts of the FlightGear architecture such that multiple simulations collaborate and can be run in different threads or even processes. Basically, you would not have a single monolithic FG application with a conventional main loop, but rather a handful of modules (FDM, AI, weather/environment, scripting) that get to communicate with each other by using a central managing component, called a "Real-Time Infrastructure".

Normally, a process-based modularization strategy would inevitably mean that modules need to be run as separate processes, but Mathias mentioned a clever scheme to directly run certain "component subsystems" in worker threads, which would then be a part of the main FG process, while still using HLA and a central RTI to handle the communications across all HLA federates.

For professional users, it is extremely important to guarantee reliable frame rates, ideally separating the visualization from the underlying computation, so that separate computers can be used for different systems [2].

OSG Threading vs. HLA

Distributed multi-process configurations are in fact exactly the way professional simulators work, too: They are distributed and communicate using sockets. Mathias' ongoing HLA work is going to make that increasingly feasible.

OSG threading is neat and dandy, but obviously it is only directly useful for rendering related parallelization and related work. On the other hand, none of this comes for free. OSG's threading support really is impressive, but all those OSG threads must be kept busy. Properly. That's exactly the work that FlightGear is responsible for in its main loop.

OSG's multithreading support doesn't just come "automagically", just because FlightGear uses OSG. The multithreading support in OSG must be explicitly supported by following certain OSG coding patterns and coding protocols. Otherwise, you won't benefit at all.

Obviously, OSG really is extremely powerful and also pretty good at parallelizing things, but you cannot necessarily see that when running FlightGear now, but there is other OSG-based software which is making really good use of this.

Correct multithreading is incredibly hard to get right in any non-trivial application.

Especially in complex systems like FlightGear. This holds even more true because FlightGear is implemented in C and C++, none of which has language-level support for concurrency and parallelism.

Basically, there's no language-level support for multithreading in the C-family of languages. Everything needs to be built and emulated using library-functions and classes such as Boost (this is less so in Java).

Still, coding a threaded design in C or C++ with more than 3-5 threads is incredibly tricky to get right (this will also be a problem once more threading will be used in Nasal code, because it also lacks language-level threading support).

There are languages far better suited for multithreaded programming, such as Ada - because they have language-level primitives to create "tasks" and "protected types".

What is HLA/RTI

HLA/RTI is message distribution API used for distributed and paralell real time simulation systems. There are a few API variants out there where the newest two ones are an IEEE standard known as rti1516 and rti1516e. This api is used in some open source simulation systems and in plenty simulation systems you can buy on the market. The api might look difficult at the first time, but it provides a carefully designed set of communication methods that avoid communication latencies - the worst problem in real time simulations - for a distributed simulation system.

The API is standardized by an IEEE standard and used with commercial simulation systems as well, and depending on the RTI implementation, available with different language bindings like C++, java, ada. Additionally there are implementations hooking on to of those given ones for matlab, python, fortran and probably more. This should also extend the abilities to communicate with components using the tools and languages we cannot handle currently in any sensible way.

The nice thing is that the ipc is hidden behind something that is also able to distribute this across multiple machines. A local network connect is mostly sufficient. But doing the same by an infniband connect is possible too. Experimenting with shared memory did not bring notable improvements over a system local network connect. At least not on linux...

In any case I think this could be fast enough to do this stuff.

Also this stuff is based on a standard that is probably enables us to be a little more connective in the end. At least this is a slight hope from me.

While the interface is providing more than you need today, I think the major benefit is that it shields away a lot of synchronization stuff from you in a way that you can still program your local component in a single threaded way. The coupling of components *can* be that tight so that you can get deterministic time slicing for some of the components. Say you want to simulate glider towing you can do that with each fdm running in its own process while still deterministically exchanging simulation results at a the fdm's rate and st time step boundaries. The same goes for components within the aircraft which I consider a possible use case for your kind of application. In contrast to that, You can still run the viewers asynchronously to the simulation core providing hopefully 60 stable frames without being disturbed by synchronization needs of specific components.

So, you might have an Idea how to to that by ipc directly, and trust me I have considered this at some time. But what this standard provides is really driven by exactly those problems that need to be solved once you dig into such kind of implementation. So one of the benefits is that you gain a encapsulated communication library that does what you need. This library can be tested independently of such an application beast like flightgear. And this is IMO a huge benefit.

Benefits

the RTI c++ interface is defined in a way that you do not need to recompile anything. Everything is done with pure virtual classes and factories to get them. So however this is implemented in the shared object/dll you should just need to get a 'standard' implementation dependent RTI header and compile with that. So you should in theory be able to change the RTI library of an already compiled binary. For the case that a particular RTI implementation does not follow this rule, you need to compile flightgear explicitly for this particular library.

The spatial indices implemented in the RTI regions will be a huge benefit, since you will only receive the messages that are relevant near the region of your interest.

Also the way an RTI provides time management and time stamped messages, is beneficial. This enables hard synchronized HLA federates, exchanging data at relatively high rates with the least possible communication latency.

Regarding the ongoing threading discussion and the amount of cores an average cpu has today, an RTI will provide a way to implement components of the simulation in a single threaded way, living in its own process.

This can be done while still having a deterministic and tight coupling with other federates simulating in the same federation. This kind of coupling is required for example for a good simulation of glider towing.

The communications infrastructure based on RTI provides a huge potential to split the simulation into components that run on different cores or even machines but still being tight coupled.

Major benefits would be to move some code, such as the AI code out of the main loop - may be even into a separate process/thread. Also running one instance of that AI traffic for installations like we used to have at the linux tag booth would be a major advantage there.

Anyway, I also consider an RTI a possible multiplayer replacement. Even if we would handle local RTI federations different than internet wide stuff. But yes, an RTI provides almost all we would need to get that right.

What is to be committed is in this step is an alternative to the multiplayer protocol which does not cover really all what the multiplayer protocol can do today. But there is a proof of concept and some playground to start with. The current implementation in flightgear is flexible enough to adapt to the needs of very different simulation systems. The default object model that you get is adapted to the needs of flightgear, but the implementation is flexible enough to change the object model to the AviationSim object model for example at startup time.

So, this should not only provide a way to distribute flightgear's simulation systems across cpus or maybe machines, this should also provide a way to increase connectivity of flightgear to other simulation systems.

Also included is an RTI variant abstraction layer inside simgear to help with some common tasks that are often needed in an HLA/RTI implementation. One of the design goals of this layer was to provide a framework where interaction with the RTI api consumes as little overhead as possible.

In particular, this means that indies (fast mappings) are used wherever possible and allocations are minimized.

Currently the only implemented RTI variant is the old nonstandard HLA-1.3 api, which was the only api I had available from an open source project at the time I began with that work. But it should be straightforward to extend that to the two IEEE standard RTI variants.

I personally think that there are still too much opportunities to break stuff. Well, with break I do not mean that it does not run or compile. With break I mean that people just put something together that works either for their private needs and they do not care further, or break in the sense that if you would know the bigger picture it would already be very clear that this cannot scale for the kind of use that will most probably happen in the not so distant future. I can really observe this in the scene and model area. I have really seen incredibly fast osg applications with stable framerates and nifty looking models. But the way we use osg and put together models leaves osg and below that the driver only little chance to be fast. Which leaves only little headroom for sensible further development.

Getting back to components: Latencies are a critical part of running in multiple threads/applications. This is not a particular problem of hla, this is a generic problem when running real time applications in parallel. I know a lot of really smart people who can even understand complex environments very well, but have no clue about the problems introduced by round trips. Seen this, this is the reason why I started that hla stuff, since this provides a framework which supports and even encourages a programming model that is able to hide latencies as good as possible. Anyway, there is a chance that you even use this api in a way that really hurts in this corner. And this is actually the really bad thing I want to avoid: If you are the first component that does not get that right you might just notice little to nothing - especially if you are running on extremely fast hardware. But when the next kind of problem is introduced this really starts to hurt more and more. And most people do not have any chance to see what happens and why.

Use Cases

Weather Simulation

We can have a completely independent weather module using the HLA stuff that runs in an own process/thread. So, at that time you might be able to do more sophisticated stuff. May be it will then be possible to do a full cfd for subparts of the scene. That might be a good thing for a glider scene where you might want to have a more detailed fluid behavior ...

A weather module running in a different process/thread/machine that computes positions for clouds that are consistently displayed on each attached viewer. That being a module that is exchangeable. The simple version just interpolates metars like today, but more sophisticated versions might do a local weather simulation to get good results for thermals in some small area. The same goes for every component you can think of splitting out.

AI Traffic

|

|

A simple AI model does just the trick what it does today. But more sophisticated modules might contain an own fdm so that these machines really live in the same fluid that the weather module provides data for.Note that the RTI API already provides a subscriber model that ensures that you don't feed data to participants that don't need that.May ba a radar controller screen that can be attached there to see the machines in this word. But sure that radar screen is not interested in flap animations for the aircraft ...

For over a decade, we've been talking about moving the AI traffic subsystem out of the fgfs main loop, not just to free computing resources, but also to make it easier to synchronize AI traffic with multiple instances, i.e. using multiplayer or master/slave setups with possibly dozens of computers (see Decoupling the AI Traffic System) and the link at [3]. This requirement has been also extensively discussed over at the fgms project, and in the Distributed Interactive Simulation article.

The fgai proof-of-concept, is just a brief sketch chat could be done in that corner. It already contains most if what is needed in complex infrastructure. You can inject vehicles and you can now get ground queries. I am for a long time running here also a brief python script which does about the same than fgai. While a scripting language is not fast enough to drive a lot of ai traffic, it is rather nice to do only a few such objects without much worries.

The basic idea to have an external component that drives ai traffic, or at least a thread that does the same is a good one I think. For the long term I do not care about the multiplayer protocol since it has severe limitations that would require a very similar solution that one the OpenRTI implementation does. For the short term, I am not sure if we are already at the point where I can just switch over. Therefore this bridge component bridging the mp protocol with hla. For the ADS-B traffic, packing this into a module that you can attach to your simulation instead of an artificial traffic component is an nice addition. Having

Whatever happens here, if you are really driving a lot of ai models it requires a huge amount of thoughts to get that scalable. Updates to specific models as well as specific parts of those need to be updated in an sparse and intelligent way to make this somehow work. This includes the basic part of the fgai implementation which aims to have steady and differentiable movements. This is at this time not made use of so much, but the classes that I called something like *Physics* in there are mostly there to make sure that all components can see and even extrapolate the same steady movements than the originating component does. There is also infrastructure there to play some tricks with timing so that nobody needs to wait for ai traffic updates to arrive - they should just already be there.

There is also a small gatweay application translating between the old multiplayer and hla that might go into the utils directory past the next release.

Standalone Viewers

Or take the viewers (also see FGViewer), if you just exchange data by a shared memory segment, you are limited to a single machine. So that's nice for the 3-chanel thing you have. But I know of installs with 9 chanels I have been visiting some few time ago. They run flightgear on that beast by the way. Or I know of installs that currently run 14 or 16 chanels within a single view. For that reason I thought: better have a technology that is also extensible for this kind of installs instead of programming everything on top of something limited machine local.

Standalone GUIs / Managers

In thinking about it a bit and being reminded of the existing HLA interface that FlightGear has, I'm leaning toward proposing something built with Python and the PyQT4 GUI library. Both components are cross-platform and there is a Python binding for the CERTI HLA library (PyHLA).

The idea here is to create a stand-alone application that replaces all the built-in GUI functionality and communicates with FlightGear via the HLA interface. When the manager application meets that goal, the existing GUI can be either removed completely or simply "unbound" at compile time so it's not available.

Such a gui manager application must be included somehow in the basic set of functionality that is still running in the threaded mode. You would just control your simulation with that component. May be restart with a different aircraft by just shutting down the running federate and start up a new one with a different fdm. And as such, doing this core component in python is something I am not sure about.

So, python in the area of *optional* rti components, is a great tool. If I remember well, you do have some bigger install at home that might benefit from such components very much! And in fact this is one reasons I am pushing this direction.

Python also has one major problem with threads. There is the big interpreter lock in python which makes python essentially single threaded. While this is not a problem when such a component runs in its own process, python in core components that need to run in threaded mode is essentially a no go.

Instructor Station

The management application wouldn't be running any of the sub-systems, just "observing" them. One of the issues that caught me up short was the requirement to sift through the chosen aircrafts configuration and Nasal files in order to take into account all the little custom menu items and controls that would have to be replicated on the manager interface. That was pretty discouraging all by itself.

My initial concept would essentially perform the same functions as the instructor console in a commercial FFS. You could tweak any parameter of the simulator from that point, including aircraft selection, position, configuration, etc. This app wouldn't be running on the same machine as the simulator & scene generator itself. (What would be neat is a three machine setup - one for the scene generator, one for the flight/systems model and one for the instructor/manager console.)

FDM Server

We've toyed with the idea of an FDM server for a while, and there was even some work done on JSBSim towards making that happen. Recently, HDWysong has added the capability to use FlightGear as a visual front end for JSBSim as a separate, scripted application. It certainly would be a huge paradigm shift.

Multiplayer

The author of fgms would be pretty much interrested to implement fgms as part of a HLA infrastructur. What detained me from going that way is, that I found no free (as is free beer) documentation on HLA specifications and the quite complex structure (too complex for a one-man-show). Additionaly I'm not sure about license issues involved. Are we allowed to publish all parts of (our) HLA infrastructur under the GPL (which will kind of undermine cash-flow of documentation providers like the IEEE)?

Regarding the multiplayer in FlightGear I see two options: 1) Either to implement a FlightGear proprietary protocol for multiplayer with a gateway to HLA, or 2) to actually use native HLA as a multiplayer protocol.

The solution 1) means a new protocol and a new server (updated fgms) needs to be implemented, but the implementation requires no IEEE standards and the solution doesn't depend on a 3rd party framework.The solution 2) doesn't require any new protocol nor HLA gateway to be implemented (HLA RTI will be used instead of fgms), but introduces an additional dependency on a 3rd party software.

I see no point in implementing our own protocol and an additional gateway, when we can directly use HLA. As long as we can implement and redistribute the federate code under GPL (or compliant license) we can make flightgear act as a HLA federate and use an open-source RTI (instead of fgms).

I also consider an RTI a possible multiplayer replacement. Even if we would handle local RTI federations different than internet wide stuff. But yes, an RTI provides almost all we would need to get that right.

I have thought about the use cases and needs for communication that I can see. I came to the conclusion that the RTI abstracts away the communication stuff in a way that is highly matching exactly the needs of a distributed simulation. Where distributed is just the same if it's distributed across processes, threads or machines. That's the reason I did prepare the groundwork by starting that own project with OpenRTI. So this project is purely driven by distributing flightgear across more computation power.

What will come in the shorter term is a standalone viewer (see FGViewer that can sit on any of the configured views. There are beginnings of that checked in but there is a lot more in preparation.

OpenRTI

Source: http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg32973.html

The RTI implementation from www.certi.org was used most of the time during development. While this one works somehow, it is sometimes difficult to handle and relatively slow.

Meanwhile, this has been replaced almost entirely with OpenRTI, a new RTI from https://sourceforge.net/p/openrti/OpenRTI/ci/master/tree/ that is way easier to set up and use and that provides way more features we might make use of with flightgear.

OpenRTI provides one mode where you can just access a process local federation from multiple threads. There is no network configuration needed and you do not setup any server in this operation mode (sure it also provides the usual networking mode). So the plan is to use this mode as an aid to paralellize flightgear on a local machine. The basic advantage is that each federate is strictly programmed single threaded. All the thread syncronization is handled by the rti library and hidden in that thing. The trick is that each of these threads must be done in a way that you can just compile that alternatively in a single standalone binary and run the same component in a networked rti - the LinuxTag booth for example.

Testing

What do you need:

- Latest simgear and flightgear as well as the data package.

- An RTI-1.3 implementation (such as OpenRTI)

libHLA is part of simgear (see simgear/hla). To build flightgear with "-D ENABLE_RTI=ON", you'll first need to build simgear with "-D ENABLE_RTI=ON". Before building SG and FG, you should build and install OpenRTI.

So, the implementation in flightgear does not make any assumptions about some internal features of the RTI implementation, except the way the rti is configured to connect to its federation server. That means, that you can use any RTI implementation you like, if you care for the apropriate connection setup. But for the default setup that we might choose, I intent to provide a configuration that makes use of some properties of OpenRTI. So in theory, you should not need to know anything about that, until you make sophisticated use of these features.

Start flightgear with --hla=bi,<hz>,<federateType>,<federationName>,<profile>", where <hz> is the communication frequency, <federateType> is the name of your federate - note that this must be unique with certi - and <federationName> is the federation you want to join. The <profile> argument defaults to the top level configuration file mp-aircraft.xml, which defines some flightgear adapted RTI/HLA federation definition. But there is already an alternate av-

aircraft.xml top level configuration file that should enable flightgear being used in an AviationSim federation that is the c++ hardcoded federation type of the other flightgear hla implementation out there.

You still can use the the --hla= commands, but there is a shortcut --hla-local which optimizes away a lot of successive ,,, in the command line that I usually had. So, --hla-local=rti://localhost/FlightGear is a shortcut for a longer --hla= line.

Additional info

Just search the archives (list/forum) for "HLA" or "RTI":

- http://www.mail-archive.com/search?q=hla&l=flightgear-devel%40lists.sourceforge.net

- http://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=hla

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg39181.html

Related

- http://wiki.flightgear.org/images/1/1e/New_FG_architecture.pdf

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg16023.html

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg37646.html

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg37647.html

- http://virtualair.sourceforge.net/flightgear.html

- http://www.chromium.org/developers/design-documents/multi-process-architecture

- http://blog.chromium.org/2008/09/multi-process-architecture.html

- http://news.softpedia.com/news/Multi-Processes-in-Browsers-Chrome-Internet-Explorer-Firefox-and-WebKit-140535.shtml

- http://blog.mozilla.org/products/2011/07/15/goals-for-multi-process-firefox/