Howto:Shader programming in FlightGear

| The FlightGear forum has a subforum related to: Effects & Shaders |

| Rendering |

|---|

|

Rendering Pipeline |

|

Effects and Shaders |

|

Ongoing Efforts |

|

Standalone Tools |

|

IPC/Networking |

This is meant to become an introduction to shader programming in FlightGear, for the time being (03/2010), this is work in progress, please feel free to ask questions or suggest topics. Your help in improving and updating this article is appreciated, thanks!

If you are creating a shader from scratch, you should follow the Shader Style Guide.

Keep in mind that this article assumes GLSL version 120. Some of the syntax might be outdated for modern GLSL versions (330 onwards).

Tutorials about GLSL Programming in general are collected at GLSL Shader Programming Resources. For an OpenGL quick reference, please see: http://www.khronos.org/files/opengl-quick-reference-card.pdf for an GLSL quick reference see glsl_quickref.pdf

What is GLSL?

GLSL (OpenGL Shading Language or "GLslang") is the official OpenGL shading language and allows you to write programs, so called "shaders" in a high level shading language that is based on the C programming language to create OpenGL fragment (pixel) and vertex shaders.

With the recent advances in graphics cards, new features have been added to allow for increased flexibility in the rendering pipeline at the vertex and fragment level. Programmability at this level is achieved with the use of fragment and vertex shaders.

GLSL was created to give developers more direct control of the graphics pipeline without having to use assembly language or hardware-specific languages. Shaders provide the possibility to process individual vertices or fragments individually, so that complex rendering tasks can be accomplished without stressing the CPU. Support for shader was first introduced via extensions in OpenGL 1.5, but is now part of the core OpenGL 2.0 standard.

Shaders are written and stored as plain text files, which can be uploaded (as strings) and executed on the GPU (processor of the graphics card).

What is a shader?

A shader is a programmable replacement for parts of the fixed OpenGL function pipeline, you can imagine it sort of like a "plugin" to customize rendering for specific scene elements.

GLSL shaders are not stand-alone applications; they require an application that utilizes the OpenGL API. A shader is a program, to be run it must be loaded, compiled and linked. Shaders will be compiled when the 3D application starts. They will be validated and optimized for the current hardware.

Actually each vertex and fragment shader must have one entry point (the main function) each, but you can create and link more shaders.

GLSL shaders themselves are simply a set of strings that are passed to the hardware vendors driver for compilation from within an application using the OpenGL APIs entry points. Shaders can be created on the fly from within an application or read in as text files, but must be sent to the driver in the form of a string.

GLSL has explicit ties to the OpenGL API - to the extent that much of the OpenGL "state" (for example which light sources are bound, what material properties are currently set up) is presented as pre-defined global variables in GLSL.

Shaders offer:

- Opportunity for Improved Visual Quality

- Algorithm Flexibility

- Performance Benefits

Shaders have access to textures and the render state (parameters, matrices, lights, materials etc). A "pass" is the rendering of a 3D Model with a vertex and pixel shader pair. An effect can require multiple passes, while each pass can use a different shader and/or model pair. A Pass can render to a texture (to be used by another pass). Think of the "fixed functionality" as the default Shader.

To make it simple, a shader is a program that is loaded on the GPU and called for every vertex or pixel: this gives programmers the possibility to implement techniques and visual effects and execute them faster. In modern games or simulators lots of shaders are used: lights, water, skinning, reflections and much more.

We can create as many shader programs as needed. You can have many shaders of the same type (vertex or fragment) attached to the same program, but only one of them can define the entry point — the main() function.

Each Shader program is assigned an handler, and you can have as many programs linked and ready to use as you want (and your hardware allows). Once rendering, we can switch from program to program, and even go back to fixed functionality during a single frame.

To really understand shaders, you should have a knowledge about the rendering pipeline; this helps to understand where and when the shaders act in the rendering process. In general, you must know that vertex are collected, processed by vertex shaders, primitives are built, then are applied colors, textures and are also called fragment shaders; finally it comes to the rasterization and the frame is put on the buffer.

Some benefits of using GLSL are:

- Cross platform compatibility on multiple operating systems, including Linux, Mac OS and Windows.

- The ability to write shaders that can be used on any hardware vendor’s graphics card that supports the OpenGL Shading Language.

- Each hardware vendor includes the GLSL compiler in their driver, thus allowing each vendor to create code optimized for their particular graphics cards architecture.

Language features

While GLSL has a C-Like syntax, it introduces some new types and keywords. To get a detailed view of the language, please see the GLSL specification you can find on http://www.opengl.org/documentation/glsl/

The OpenGL Shading Language provides many operators familiar to those with a background in using the C programming language. This gives shader developers flexibility when writing shaders. GLSL contains the operators in C and C++, with the exception of pointers. Bitwise operators were added in version 1.30.

Similar to the C programming language, GLSL supports loops and branching, including if, else, if/else, for, do-while, break, continue, etc.

User defined functions are supported, and a wide variety of commonly used functions are provided built-in as well. This allows the graphics card manufacturer the ability to optimize these built-in functions at the hardware level if they are inclined to do so. Many of these functions are similar to those found in the math library of the C programming language such as exp() and abs() while others are specific to graphics programming such as smoothstep() and texture2D().

Error Reports, Debugging, Troubleshooting

Shaders are compiled at FG startup.

Shader compilation errors can be found in the fgfs.log file. More about the fgfs.log here.

As of FG 2016.4.4, shaders do not seem to recompile upon Debug/Reload Aircraft Model or File/Reset. So the only option to re-compile/test a shader is to quit a re-start FG altogether.

Shader types

There are two types of shaders in GLSL: vertex shaders and fragment shaders (with geometry shaders being a part of OpenGL 3.2).

These are executed by vertex and fragment processors in the graphics hardware.

- Vertex shaders transform vertices, set up data for fragment shaders

- Fragment shaders operate on fragments generated by rasterization

- Geometry shaders create geometry on the GPU

Typically, vertex shader files use the file extension .vert, while fragment shader files use the .frag extension.

In FlightGear, these files can be found in the Shaders subdirectory of the base package, in essence $FG_ROOT/Shaders.

For a list of currently available shaders, you may want to take a look at: $FG_ROOT/Shaders.

So, shaders generally go around in pairs - one shader (the vertex shader) is a short program that takes in one vertex from the main CPU and produces one vertex that is passed on to the GPU rasterizer which uses the vertices to create triangles - which it then chops up into individual pixel-sized fragments.

A vertex shader is run once per vertex, while a fragment shader is run once per fragment covered by the primitive being rendered (a point, a line or a triangle). A fragment equate a pixel except in the case of multi-sampling where a pixel can be the weighted average of several fragments. Multi-sampling is used to remove aliasing and jagged edges. Many such executions can happen in parallel. There is no communication or ordering between executions. Vertex shaders are flexible and quick.

Vertex shaders

| The vertex shader doesn't know anything about the mesh it renders, it just knows one single vertex at a time and all the info that is attached to the vertex (normals, tangents, binormals, color,...) And the vertex shader doesn't really draw anything, it just takes care of all the things which have to do with 'where in space' you are.

The way this works is that for all the vertices of an object you want to render, the position of the object gets attached to all vertices (currently in the color spot). The vertex shader then just adds the offset vector to the vertex coordinate with respect to the origin. — Renk Thorsten (2014-05-11). Re: [Flightgear-devel] cities in FG & how to move forward.

(powered by Instant-Cquotes) |

| Input | Vertex attributes |

|---|---|

| Output | At least vertex position (in the clip space) |

| Restrictions | Cannot access any vertex other than the current one |

| Note | Loading a vertex shader turns off parts of the OpenGL pipeline (vertex shaders fully replace the "Texturing & Lighting unit"). |

Objects in a computer graphics scene are usually meshes that are made up of polygons. The corner of each of those polygons is called a vertex. A vertex shader receives input in the form of per-vertex variables called attribute variables, and per-polygon variables called uniform variables. The vertex shader must specify the coordinates of the vertex in question. This way, the geometry of the object can be modified.

Vertex shaders operate on each vertex, the vertex shader is executed for every vertex related OpenGL call (for example glVertex* or glDrawArrays).

Accordingly, this means for example, that for meshes that contain for example 5000 vertices, the vertex shader will also be executed 5000 times.

A single vertex itself is composed of a number of attributes (vertex attrib), such as: position, texture coordinates, normal and color for the most common. The position (attribute) is the most important one. The coordinates (x, y and z) of the vertex's entering position are those which have been given by the 3D modeler during the creation of the 3D model. The vertex's position is defined in the local space of the mesh (or object space).

A vertex shader provides almost full control over what is happening with each vertex. Consequently, all per-vertex operations of the fixed function OpenGL pipeline are replaced by the custom vertex shader.

Vertex shaders take application geometry and per-vertex attributes as input and transform the input data in some meaningful way.

- A vertex shader must write to

gl_Position - A vertex shader can write to

gl_PointSize,gl_ClipVertex gl_Vertexis an attribute supplying the untransformed vertex coordinategl_Positionis an special output variable for the transformed vertex coordinate

A vertex shader can also set other variables which are called varying variables. The values of these variables are passed on to the second kind of shader, the fragment shader. The fragment shader is run for every pixel on the screen where the polygons of the mesh appear. The fragment shader is responsible for setting the final color of that little piece of the mesh

Common tasks for a vertex shader include:

- Vertex position transformation

- Per vertex lighting

- Normal transformation

- Texture coordinates transformation or generation

- Vertex color computation

- Geometry skinning

- Animation

- Setting up data for fragment shaders

The vertex shader runs from start to end for each and every vertex that's passed into the graphics card – the fragment process does the same thing at the pixel level. In most scenes there are a heck of a lot more pixel fragments than there are vertices – so the performance of the fragment shader is vastly more important and any work we can do in the vertex shader, we probably should.

A minum vertex shader example may looks this:

void main(void)

{

gl_Position = ftransform();

}

Fragment shaders

| the fragment shader basically knows only the pixel it is about to render and whatever information is passed from the vertex shader. Based on 'where' the vertex shader says the pixel is, the rasterizer stage determines what the texture for the pixel should be.

But there are techniques to do this in a different way, for instance the water depth map uses world coordinates to look up a world texture, and the gardens would have to be drawn in a similar way. — Renk Thorsten (2014-05-11). Re: [Flightgear-devel] cities in FG & how to move forward.

(powered by Instant-Cquotes) |

| Input | Interpolation of the vertex shader outputs |

|---|---|

| Output | Usually a fragment color. |

| Restrictions | Fragment shaders have no knowledge of neighboring pixels. |

| Note | Loading a fragment shader turns off parts of the OpenGL pipeline (pixel shaders fully replace the "Texturing Unit"). |

The other shader (the fragment shader - also (incorrectly) known as the "pixel shader") takes one pixel from the rasterizer and generates one pixel to write or blend into the frame buffer.

A fragment shader can write to the following special output variables:

gl_FragColorto set the color of the fragmentgl_FragData[n]to output to a specific render targetgl_FragDepthto set the fragment depth

Common tasks of fragment shaders include:

- Texturing (even procedural)

- Per pixel lighting and material application

- Ray tracing

- Fragment color computation

- Operations on Interpolated Values

- Doing operations per fragment to make pretty pictures

A minimum fragment shader may look like this:

void main(void)

{

gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0);

}

A fragment shader takes perspective-correct interpolated attribute values as input and either discards the fragment or outputs the fragment's color.

Fragment shaders operate on every fragment which is produced by rasterization. Fragment shaders give you nearly full control over what is happening with each fragment. However just like vertex shaders, a fragment shader replaces all per-fragment operations of the fixed function OpenGL pipeline.

Data types in GLSL

Note that there is no implicit type conversion in GLSL, all conversions and initializations have to be done using explicit constructor calls!

Scalars

float– 32 bit, very nearly IEEE-754 compatibleint– at least 16 bit, but not backed by a fixed-width registerbool– like C++, but must be explicitly used for all flow control

Vectors

vec2,vec3,vec4– 2D, 3D and 4D floating point vectorivec2,ivec3,ivec4– 2D, 3D and 4D integer vectorbvec2,bvec3,bvec4– 2D, 3D and 4D boolean vectors

Accessing a vector can be done using letters as well as standard C selectors.

TODO: explain swizzling

One can use the letters x,y,z,w to access vectors components; r,g,b,a for color components; and s,t,p,q for texture coordinates.

Matrices

mat2– 2x2 floating point matrixmat3– 3x3 floating point matrixmat4– 4x4 floating potint matrix

Samplers

In GLSL, textures are represented and accessed using so called "samplers", which are used for sampling textures and which have to be uniform. The following samplers are available:

sampler1D,sampler2D,sampler3D– 1D, 2D and 3D texturesamplerCube– Cube Map texturesampler1Dshadow,sampler2Dshadow– 1D and 2D depth-component texture

Arrays

GLSL supports the same syntax for creating arrays that is already known from C or C++, e.g.:

vec2 foo[10];

So, arrays can be declared using the same syntax as in C, but can't be initialized when declared. Accessing array's elements is done as in C.

Structures

Structures can also be created like in C or C++, e.g.:

struct foo {

vec3 pos;

};

Global storage qualifiers

Used for communication between shaders and application:

const– For declaring non-writable, compile-time constant variablesattribute– For frequently changing (per vertex) information passed from the application to a vertex shader (no integers, bools, structs, or arrays)uniform– For infrequently changing (per primitive) information passed from the application to a vertex or fragment shader:constant shader parameters that can be changed between draws (cannot be written to in a shader, do not change per-vertex or per-fragment)varying– For information passed from a vertex shader to a fragment shader, will be interpolated in a perspective-correct manner during rasterization (can write in vertex shader, but only read in fragment shader)

Functions

- Much like C++

- Entry point into a shader is

void main() - Overloading based on parameter type (but not return type)

- No support for direct or indirect recursion

- Call by value-return calling convention

As in C, a shader is structured in functions. At least each type of shader must have a main function declared with the following syntax: void main()

User defined functions may be defined. As in C a function may have a return value, and use the return statement to pass out its result. A function can be void. The return type can have any type, except array.

Parameter qualifiers

The parameters of a function may have the following qualifiers:

in– Copy in, but don't copy back out (still writable within function)out– Only copy out; undefined at function entry pointinout– Copy in and copy out

If no qualifier is specified, by default it is considered to be in.

Built-ins

Vertex shader

vec4 gl_Position;– Must be writtenvec4 gl_ClipPosition;– May be writtenfloat gl_PointSize;– May be written

Fragment shader

float gl_FragColor;– May be writtenfloat gl_FragDepth;– May be read/writtenvec4 gl_FragCoord;– May be readbool gl_FrontFacing;– May be read

Vertex attributes

Only available in vertex shaders.

attribute vec4 gl_Vertex;attribute vec3 gl_Normal;attribute vec4 gl_Color;attribute vec4 gl_SecondaryColor;attribute vec4 gl_MultiTexCoordn;attribute float gl_FogCoord;

Uniforms

uniform mat4 gl_ModelViewMatrix;uniform mat4 gl_ProjectionMatrix;uniform mat4 gl_ModelViewProjectionMatrix;uniform mat3 gl_NormalMatrix;uniform mat4 gl_TextureMatrix[n];

struct gl_MaterialParameters {

vec4 emission;

vec4 ambient;

vec4 diffuse;

vec4 specular;

float shininess;

};

uniform gl_MaterialParameters gl_FrontMaterial;uniform gl_MaterialParameters gl_BackMaterial;

struct gl_LightSourceParameters {

vec4 ambient;

vec4 diffuse;

vec4 specular;

vec4 position;

vec4 halfVector;

vec3 spotDirection;

float spotExponent;

float spotCutoff;

float spotCosCutoff;

float constantAttenuation

float linearAttenuation

float quadraticAttenuation

};

uniform gl_LightSourceParameters gl_LightSource[gl_MaxLights];

Varyings

An interface between vertex and fragment shaders is provided by varying variables: Vertex shaders compute values per vertex and fragment shaders compute values per fragment. The value of a varying variable defined in a vertex shader, will be interpolated (perspective-correct) over the primitive being rendered and the interpolated value in the fragment shader can be accessed.

Varying variables can only be used with the data types float, vec2, vec3, vec4, mat2, mat3, mat4. (and arrays of them too.)

varying vec4 gl_FrontColor; // vertexvarying vec4 gl_BackColor; // vertexvarying vec4 gl_FrontSecColor; // vertexvarying vec4 gl_BackSecColor; // vertexvarying vec4 gl_Color; // fragmentvarying vec4 gl_SecondaryColor; // fragmentvarying vec4 gl_TexCoord[]; // bothvarying float gl_FogFragCoord; // both

Functions

Anatomy of a shader

A shader's entry point is the main function which returns void and takes no arguments (void)

Anatomy of a vertex shader

The function void main() is called afresh for each vertex in the 3D object model:

// Vertex Shader

void main() {

gl_Position = gl_Vertex;

}

Anatomy of a fragment shader

The function void main() is called afresh for each fragment/pixel in the 3D object model:

// Fragment Shader

void main() {

gl_FragColor = vec4(1.0, 1.0, 1.0, 1.0);

}

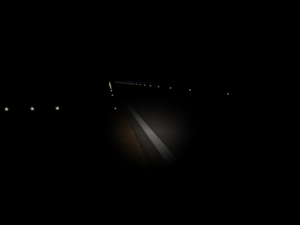

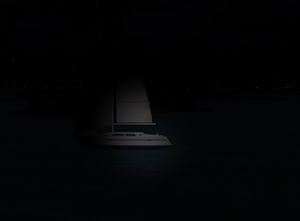

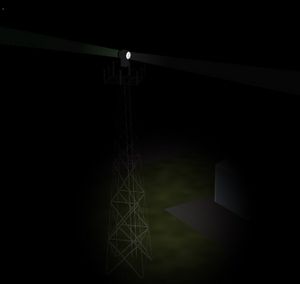

Practical application – ALS landing lights – spotlight

The ALS landing lights-spotlight (we'll call it ALS lights from now on) is a good example for showing how to incorporate a shader effect into FlightGear as it touches many parts of the visuals we see and many parts of the coding pipeline.

In the case of ALS Lights, you have to add the effect to every visual item rendered on the screen that you want to see a light shining on. If you want it to be capable of shining on everything, you have to account for each separate item and how that item is rendered. That is a lot of code to touch.

The list might include

- Terrain

- Runway

- Dirtrunway

- Agriculture

- Models

- AI

- Aircraft

- Tree

- Buildings

- Weather

- Fog

- Clouds

- Hazes

- Water

- Inland

- Ocean

- Stream

Some of these items may be controlled or rendered by the same effect and shader file. They might have to be accounted for individually. They may have special lighting influences that have to be accounted for. You have to take each one separately and account for all its needs.

The example highlighted in this article is what was added to tree.eff to shine the lights on trees.

Program flow simplified

Preferences/Nasal/XML → Property tree → Effect file → Shader → Rendered to screen

Preferences/Nasal/XML

Any combination of Preferences, Nasal or XML manipulates data in the property tree.

In this case the switch to turn on the landing or spot light and a couple other needed data containers are defined in $FG_ROOT/preferences.xml with the following lines.

<als-secondary-lights>

<use-searchlight type="bool">false</use-searchlight>

<use-landing-light type="bool">false</use-landing-light>

<use-alt-landing-light type="bool">false</use-alt-landing-light>

<landing-light1-offset-deg type="float">0.0</landing-light1-offset-deg>

<landing-light2-offset-deg type="float">0.0</landing-light2-offset-deg>

</als-secondary-lights>

They show up in the property tree under sim/rendering/als-secondary-lights and can be activated or manipulated by normal Nasal calls or XML.

Property tree

The property tree is like the CPU of the FlightGear program at a user level. It's a go-between that allows the user to see and influence many aspects at the heart of the program in ALMOST real time. More of the internals of FlightGear are being exposed to the property tree than ever before. This allows us to have user level access in areas of the code that used to only be reserved for programmers. Because of the manner in which the property tree is fed information, and the one step removed from the C source, care must be taken in how it is used. Depending on how it is used it won't be as responsive to manipulation as it would be if you were to change the same information at the C source level.

Effects file

The effects file is the mechanism we use to combine and manipulate all the necessary data to create stunning visual effects. It's the link between the data contained and produced in Nasal, XML and the property tree and the graphics rendering pipeline. It's there to allow us to create these affects without having to know or use the C++ code base. Its flexible framework allows for an almost infinite range of sophisticated effects.

See this page for more details: Effect Framework

Parameters

Parameter entries defined in the Effect file correspond to a property tree data container (static or variable). They will contain the data needed by the shader program to perform its magic. The type of information contained in the property tree might be program control data or variable/static data that the shader program can manipulate prior to sending on to render. In the case of ALS lights, below is some of the data passed to, and used by, the shader program.

<small>

<display_xsize><use>/sim/startup/xsize</use></display_xsize>

<display_ysize><use>/sim/startup/ysize</use></display_ysize>

<view_pitch_offset><use>/sim/current-view/pitch-offset-deg</use></view_pitch_offset>

<view_heading_offset><use>/sim/current-view/heading-offset-deg</use></view_heading_offset>

<view_fov><use>/sim/current-view/field-of-view</use></view_fov>

<use_searchlight><use>/sim/rendering/als-secondary-lights/use-searchlight</use></use_searchlight>

<use_landing_light><use>/sim/rendering/als-secondary-lights/use-landing-light</use></use_landing_light>

<use_alt_landing_light><use>/sim/rendering/als-secondary-lights/use-alt-landing-light</use></use_alt_landing_light>

<landing_light1_offset><use>/sim/rendering/als-secondary-lights/landing-light1-offset-deg</use></landing_light1_offset>

<landing_light2_offset><use>/sim/rendering/als-secondary-lights/landing-light2-offset-deg</use></landing_light2_offset>

<quality_level><use>/sim/rendering/shaders/landmass</use></quality_level>

<tquality_level><use>/sim/rendering/shaders/transition</use></tquality_level>

</small>

Note the use-searchlight entry, it is pointing to the use-searchlight entry in the property tree under sim/rendering/als-secondary-lights that was defined in preferences.xml.

Some of this data may play a duel role inside the shader program. In other words it might be used to control other functions in addition to ALS lights. There will also be other parameter entries that have nothing to do with ALS lights. They might be used for other actions or effects the shader is handling.

Technique

In general, the shader program and the uniforms are defined in between the technique tags. The technique is assigned an index to distinguish one technique from another (technique n="1"). As is the case with tree.eff, sometimes the shader program and its uniforms are defined and needed in more than one technique. In the case of tree.eff it is used in technique 4 and 5. Which means in FlightGear, the tree shader set to either of the the highest two shader settings still produces ALS lights when activated.

Shader program

Next comes the entry to define what shader program the parameters data is going to be passed to. This is where you specify what shader program is to be used by the technique. ALS has the lowest techniques, with higher quality preceding lower quality.

<program>

<fragment-shader>Shaders/tree-ALS.frag</fragment-shader>

<fragment-shader>Shaders/secondary_lights.frag</fragment-shader>

</program>

In the case of ALS Lights, so far we only have to deal with the fragment shader.

The program section of the effect file is a nifty method used to allow users to add shaders to FlightGear without having to add code at C level language base. The C level base is programed to recognize the XML tag pair of <program></program> and thus incorporate the GLSL program files pointed to between the tags. Otherwise you would have to add the GLSL program calls in the base C requiring a completely different set of programing skills and also the necessity of compiling FlightGear everytime you want to add new shader. It can work this way because shader programs are compiled at run-time.

We'll describe the contents of the shader programs below. For now, suffice it to say tree-ALS.frag contains the main program and secondary_lights.frag has functions that are passed uniform data that is manipulated and returned to main for processing.

Uniforms

The uniforms section is the mechanism that feeds the parameter data to the shader program.

<small>

<uniform>

<name>view_pitch_offset</name>

<type>float</type>

<value><use>view_pitch_offset</use></value>

</uniform>

<uniform>

<name>view_heading_offset</name>

<type>float</type>

<value><use>view_heading_offset</use></value>

</uniform>

<uniform>

<name>field_of_view</name>

<type>float</type>

<value><use>view_fov</use></value>

</uniform>

<uniform>

<name>landing_light1_offset</name>

<type>float</type>

<value><use>landing_light1_offset</use></value>

</uniform>

<uniform>

<name>landing_light2_offset</name>

<type>float</type>

<value><use>landing_light2_offset</use></value>

</uniform>

<uniform>

<name>use_searchlight</name>

<type>int</type>

<value><use>use_searchlight</use></value>

</uniform>

<uniform>

<name>use_landing_light</name>

<type>int</type>

<value><use>use_landing_light</use></value>

</uniform>

<uniform>

<name>use_alt_landing_light</name>

<type>int</type>

<value><use>use_alt_landing_light</use></value>

</uniform>

<uniform>

<name>display_xsize</name>

<type>int</type>

<value><use>display_xsize</use></value>

</uniform>

<uniform>

<name>display_ysize</name>

<type>int</type>

<value><use>display_ysize</use></value>

</uniform>

</small>

Note the name, use_searchlight, which was originally defined in preferences.xml and then became an entry in parameters is now being passed to the program shader by the uniform. Below in the "Shader program" section, we will show you how the shader receives the uniform's data.

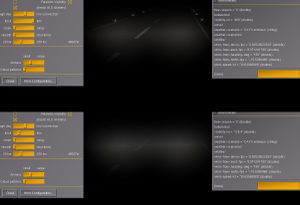

Shader programs

The shader programs used in this example are tree-ALS.frag and secondary_lights.frag.

secondary_lights.frag

secondary_lights.frag consists of

- Uniform inputs (data coming into the shader to be manipulated

- Functions that manipulate the uniform data

Following it the actual GLSL code in secondary_lights.frag.

Uniform input

uniform int display_xsize;

uniform int display_ysize;

uniform float field_of_view;

uniform float view_pitch_offset;

uniform float view_heading_offset;

Functions

float light_distance_fading(in float dist)

{

return min(1.0, 10000.0/(dist*dist));

}

float fog_backscatter(in float avisibility)

{

return 0.5* min(1.0,10000.0/(avisibility*avisibility));

}

vec3 searchlight()

{

vec2 center = vec2 (float(display_xsize) * 0.5, float(display_ysize) * 0.4);

float headlightIntensity;

float lightRadius = (float(display_xsize) *9.16 /field_of_view);

float angularDist = length(gl_FragCoord.xy -center);

if (angularDist < lightRadius)

{

headlightIntensity = pow(cos(angularDist/lightRadius * 1.57075),2.0);

//headlightIntensity = headlightIntensity *

//headlightIntensity*= clamp(1.0 + 0.15 * log(1000.0/(dist*dist)),0.0,1.0);

return headlightIntensity * vec3 (0.5,0.5, 0.5);

}

else return vec3 (0.0,0.0,0.0);

}

vec3 landing_light(in float offset)

{

float fov_h = field_of_view;

float fov_v = float(display_ysize)/float(display_xsize) * field_of_view;

float yaw_offset;

if (view_heading_offset > 180.0)

{yaw_offset = -360.0+view_heading_offset;}

else

{yaw_offset = view_heading_offset;}

float x_offset = (float(display_xsize) / fov_h * (yaw_offset + offset));

float y_offset = -(float(display_ysize) / fov_v * view_pitch_offset);

vec2 center = vec2 (float(display_xsize) * 0.5 + x_offset, float(display_ysize) * 0.4 + y_offset);

float landingLightIntensity;

float lightRadius = (float(display_xsize) *9.16 /field_of_view);

float angularDist = length(gl_FragCoord.xy -center);

if (angularDist < lightRadius)

{

landingLightIntensity = pow(cos(angularDist/lightRadius * 1.57075),2.0);

//landingLightIntensity *= min(1.0, 10000.0/(dist*dist));

return landingLightIntensity * vec3 (0.5,0.5, 0.5);

}

else return vec3 (0.0,0.0,0.0);

}

tree-ALS.frag

tree-ALS.frag consists of

- Uniform inputs (data coming into the shader to be manipulated

- Functions that manipulate the uniform data

Following it the actual GLSL code in tree-ALS.frag.

While there is significantly more code in tree-ALS.frag, only the code that was included for the ALS lights is being shown and discussed here.

Uniform input

Uniform data is brought into the shader in the following manner.

uniform float landing_light1_offset;

uniform float landing_light2_offset;

uniform int use_searchlight;

uniform int use_landing_light;

uniform int use_alt_landing_light;

uniform int quality_level;

uniform int tquality_level;

Note use_searchlight and how it is defined as incoming uniform data.

Variable data

Variable data can be defined in the shader program. An example of variable data defined in the shader program that is needed for ALS lights is

vec3 secondary_light = vec3 (0.0,0.0,0.0);

Functions

Function calls to the function defined in secondary_lights.frag are

vec3 searchlight();

vec3 landing_light(in float offset);

You don't have to use any path information or includes in the code because the GLSL compiler program takes care of linking all the shader programs as long as they are defined correctly in the "programs" section of the effect file. Variable data can be passed to and returned from GLSL functions just like any other language.

Main program

The main() function is the heart of the shader program. This is where the shader program manipulates all the data made available to it.

void main()

{

vec3 secondary_light = vec3 (0.0,0.0,0.0);

if ((quality_level>5) && (tquality_level>5))

{

if (use_searchlight == 1)

{

secondary_light += searchlight();

}

if (use_landing_light == 1)

{

secondary_light += landing_light(landing_light1_offset);

}

if (use_alt_landing_light == 1)

{

secondary_light += landing_light(landing_light2_offset);

}

}

vec4 fragColor = vec4 (gl_Color.rgb +secondary_light * light_distance_fading(dist),1.0) * texel;

}

Note how use_searchlight is used in the main function to determine if the property defined in preferences.xml and manipulated in the property tree is set to true or 1.

Some of the variable data contained in the shader program is used for other purposes and is introduced into the shader program from other property, parameter and uniform definitions not pertaining to ALS lights.

File list

Files that are directly touched by this effect include

preferences.xmlagriculture.eff:- Inherits properties from

crop<<terrain-default - Adds program shaders (technique 2)

- Fragment

agriculture-ALS.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 2)

- Inherits properties from

airfield.eff:- Inherits properties from

terrain-default - Adds program shaders (technique 2)

- Fragment

airfield-ALS.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 2)

- Inherits properties from

building.eff:- Inherits properties from

model-combined-deferred<<model-combined - Adds program shaders (technique 4)

- Fragment

model-ALS-ultra.fragandsecondary_lights.frag

- Fragment

- Inherits uniforms from

model-combined-deferred<<model-combined

- Inherits properties from

dirt-runway.eff:- Inherits properties from

crop<<terrain-default - Adds program shaders (technique 2)

- Fragment

drunway-ALS.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 2)

- Inherits properties from

model-combined.eff:- Inherits properties from

model-default - Adds program shaders (technique 4)

- Fragment

model-ALS-ultra.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 4)

- Inherits properties from

model-default.eff:- Adds properties

- Adds program shaders (technique 5)

- Fragment

model-ALS-base.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 5)

runway.eff:- Inherits properties from terrain-default

- Adds program shaders (technique 2)

- Fragment

runway-ALS.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 2)

terrain-default.eff:- Adds properties

- Adds program shaders (technique 3)

- Fragment

terrain-ALS-ultra.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 3)

tree.eff:- Adds properties

- Adds program shaders (technique 4 and 5)

- Fragment

tree-ALS.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 4 and 5)

urban.eff:- Inherits properties from

terrain-default - Adds program shaders (technique 1 and 2)

- Fragment

urban-ALS.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 1 and 2)

- Inherits properties from

water.eff:- Inherits properties from

terrain-default - Adds program shaders (technique 1)

- Fragment

water-ALS-high.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 1)

- Inherits properties from

water-inland.eff:- Inherits properties from

terrain-default - Adds program shaders (technique 2)

- Fragment

water-ALS-high.fragandsecondary_lights.frag

- Fragment

- Adds uniforms (technique 2)

- Inherits properties from

General comments from forum discussion

In principle, we always do the same steps in the fragment shaders to determine the color of a pixel:

what is displayed on the screen in the end is whatever gl_FragColor is set to But the location where this happens isn't always obvious - often (part) of the light is computed in the vertex shader already, in which case it typically enters the fragment shader as gl_Color. So, the lighting equation in tree-haze.frag is indeed vec4 fragColor equals vec4 (gl_Color.xyz,1.0) * texel; and your change to the light should happen just before that. But you can't do gl_Color.rgb equals gl_Color.rgb + my_light; because gl_Color.rgb is a varying variable type, and you can't assign new values to them inside the shader, so you need to either make a new variable or just do vec4 fragColor equals vec4 ((gl_Color.rgb + my_light),1.0) * texel; (note that color.rgb is the same as color.xyz, GLSL doesn't really care which convention you use, but I took a few months to learn that, so early code by myself often uses xyz indexing convention for color vectors as well).[1]— Thorsten Renk

|

| An effect is a container for a series of techniques which are all the possible things you could do with some object.

The <predicate> section inside each effect determines which of the techniques we actually run. Typically the predicate section contains conditionals on a) rendering framework properties b) OpenGL extension support and c) quality level properties. The renderer searches the effects from lowest to highest technique number and uses the first one that fits the bill (which is why high quality levels precede low quality levels). The rendering itself is specified as <pass> - each pass runs the renderer over the whole scene. We may sometimes make a quick first pass to fill the depth buffer or a stencil buffer, but usually we render with a single pass. Techniques may not involve a shader at all, for instance 12 in terrain-default is a pure fixed pipeline fallback technique, but all the rendering you're interested in use a shader, which means they have a <program> section which determines what actual code is supposed to run. If you run a shader, that needs parameters specified as <uniform>, and textures which need to be assigned to a texture unit _and_ be declared as a uniform sampler. In addition, each technique contains a host of parameter configuring the fixed pipeline elements, like alpha tests before the fragment stage, or depth buffer writing/reading, alpha blending,... you can ignore them on the first go. So if you want to look at which shader code is executed when you call the water effect at a certain quality level, you need to work your way through the predicate sections of the techniques from lowest to highest till you find the one that matches, and then look into the program section of that technique. Now, to make matters more complicated, all the parameters and textures that are assigned to uniforms and texture units in the techniques need to be declared and linked to properties were applicable in the <parameters> section heading each effect declaration. So a texture you want to use has to appear three times - in the parameters section heading the effect, inside the technique assigned to a texture unit and assigned to a uniform sampler. Now, inheritance moves all the declared parameters and techniques from one effect to the one inheriting. In the case of water, that means the technique is actually _not_ inherited because terrain-default.eff doesn't contain a technique 1 at all, but the general <parameters> section is inherited. So you don't need to declare the additions to <parameters> again, but you do need to make the changes to the <programs> and the <uniform> sections.[2]— Thorsten Renk

|

| At low water shader quality, water isn't rendered separately from the terrain, i.e. it runs via terrain-default.eff - since you modified that to allow light, light works for water out of the box.

At high water slider, the techniques in water.eff kick in, and only then do you need to modify the specific water shader code. Now, the peculiar thing about water is that there are two effects (water.eff and water_inland.eff) sharing the same shader code (but calling it with somewhat different parameters). So the function not found error was presumably caused by you modifying water.eff whereas the water you were seeing was initiated by water_inland.eff, and in that effect, the secondary_lights.frag wasn't initially in the program section. So if you alter the water shader code, you need to modify two effect files rather than one to pass the right parameters and access the functions you need. .......... .......... Adding them _after_ fogging, isn't what you want - you'll see that if visibility is <100 m, everything will go black at night, because fog color is black at night, so finalColor.rgb of a heavily fogged object will also be black, and then when you light it up, you multiply your light value with black and it'll be black. Or, if you add the light value (which you should only do if the object you add it to is a light color itself), then you'll get a featureless grey. You want to add light after texel color and before fogging.[3]— Thorsten Renk

|

| So, in old times when rendering textures was slow and complicated, we rendered objects with monochromatic surface colors. Then the (schematic) lighting equation (without specular, and the sum of ambient and diffuse already computed) was

visibleColor.rgb equals objectColor.rgb * light.rgb + objectEmissive.rgb Now, we have textures and so we get visibleColor.rgb equals objectColor.rgb * texel.rgb * light.rgb + objectEmissive.rgb + lightMapTexel.rgb Since we can have the full color information in the texture, objectColor.rgb is usually (1.0,1.0,1.0) because the info is redundant. But if you don't use a texture, of course objectColor.rgb has the actual color value (incidentially, I think we shouldn't texture with monochromatic surfaces at all, it creates a jarring visual impression which can be cured by using even a small texture...) But if you do, the rendering pipeline is set up to compute color.rgb equals objectColor * light.rgb in the vertex shader, so the equation we have in the fragment shader is something like visibleColor.rgb equals color.rgb * texel.rgb + objectEmissive.rgb + lightMapTexel.rgb and if we add a secondary light like visibleColor.rgb equals (color.rgb + secLight.rgb) * texel.rgb it of course can never recover the color information, because color.rgb is zero at night since you multiplied the actual color with zero sunlight and the texel doesn't carry information for an untextured object. Since the secondary light is in screen coordinates, it can't appear in the vertex shader, so the solution would be to pass the actual color and light rather than their product to the fragment shader. Which is expensive, because we need another varying vec3, and varying variable types fill memory and need to be computed an interpolated per vertex/per fragment - which is why I'm not sure whether we shouldn't accept the loss of the color...[4]— Thorsten Renk

|

| Inheritance works like this:

The base effect has a list of things A1 B1 C1 D1 The second effect inherits 1 but just declares C2 and E2, so then the list is A1 B1 C2 D1 E2 The third effect inherits from 2 and declares A3, so then the list is A3 B1 C2 D1 E2 whereas if the third effect would inherit from 1, then the list would be A3 B1 C1 D1 So if already present, inheritance overrides, if not present it adds. I suspect that's why programs need to be indexed, so they they override and don't add...[5]— Thorsten Renk

|

- ↑ Thorsten Renk (Tue Oct 07, 2014 12:04 -0700). ALS landing lights.

- ↑ Thorsten Renk (Wed Oct 08, 2014 1:58 -0700). ALS landing lights.

- ↑ Thorsten Renk (Wed Oct 08, 2014 11:19 -0700). ALS landing lights.

- ↑ Thorsten Renk (Sat Oct 11, 2014 1:28 -0700). ALS landing lights.

- ↑ Thorsten Renk (Sat Oct 11, 2014 11:33 -0700). ALS landing lights.