CompositeViewer support

| |

| Started in | 2020 July |

|---|---|

| Description | Support for multiple independent scene views |

| Contributor(s) | Julian Smith |

| Status |

|

| Rendering |

|---|

|

Rendering Pipeline |

|

Effects and Shaders |

|

Ongoing Efforts |

|

Standalone Tools |

|

IPC/Networking |

Motivation

Until mid-2020, FlightGear only supported one view position at a time.[4].

Aircraft could define their own views and so on. But only one view could be active at a time. So no matter how many windows and cameras you defined in Defaults.xml, they were all relative to the current view in FG (i.e. cockpit, tower...). [5]

Back in 2008, Tim Moore provided a patch (mailing lists search for CameraGroup FlightGear Mailing Lists) to use the osgViewer class to set up windows, manage the main camera, etc. [6]

However, these windows had to use the same camera group as the main window so could only show the view from the same eye position, though typically at a different angle/offset so that one could emulate things like side windows of a cockpit displayed in a different window or monitor.[7]

Mathias Fröhlich used the slave camera feature of osgViewer to provide a "video wall"-style of multiple displays that was demonstrated at LinuxTag for years. Later on, Tim generalized this to support general monitor arrangements (like a panoramic arc) and general combinations of screens and graphics cards. [8]

The default OSG model is that slave cameras are different views off-set from a common viewpoint. This is easy to understand when considering a camera's view matrix, but not necessarily intuitive when thinking about the projection matrix. Because FG has its own view system we mostly treated the slaves as independent. It seems that most other uses of cameras during rendering -- for example, render to texture cameras for effects -- are best handled by slave cameras with independent views as well.[9]

People requiring multiple independent views on the same scenery, e.g. cockpit and tower view [...] these each need their own camera groups and so require OSG's CompositeViewer.[10]

And that was not really supported by the previous architecture, neither by the tile cache nor by osgViewer::Viewer. We needed to move to a CompositeViewer model, which supports several scene graphs, and rely completely on the OSG database paging machinery.[11]

That required a change in the previous FlightGear architecture to use a CompositeViewer instead of a single Viewer, but we were contemplating that anyway.[12]

The cameras in a camera group don't need to render directly to the screen. They can render to a texture which can be used either in the scene, like in a video screen in the instrument panel, or for distortion correction in a projected or dome environment. [13]

Open Scene Graph supports a CompositeViewer object that supports rendering from several widely-separated viewpoints, complete with support for multiple terrain pager threads. We had to move to CompositeViewer to support simultaneous views from e.g., the tower, AI models, drones, etc.[14]

Neither of these were previously supported. We had to start using a different OSG class, CompositeViewer, to support multiple views from independent view points. Our terrain pager needed a complete overhaul to use the PagedLOD scheme of OSG, and the Flightgear View manager would need to be aware multiple active views.[15]

CompositeViewer

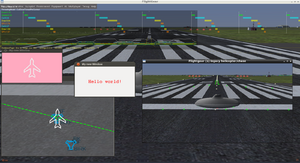

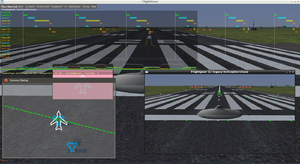

CompositeViewer allows multiple independent views of the Flightgear scene, e.g. in independent top-level windows, which allows for multiple independent views from different locations, allowing each view to have separate scenery loaded, using its own scenery pager.[16]

This is primarily needed/useful when views may need acccess to scenery (tiles) that are no longer in the cache of the main aircraft, for instance a tower view of the destination airport, or payload-specific views (think missiles) that may be out of range, and thus need their own scenery DB pager. In other words, CompositeViewer (CV) support is needed whenever a view can no longer be easily defined/described as a "child view" of the main aircraft.

Previously, we were using the View(er) class which "is a" View, rather than "has a" View. The Viewer class is special case just for handling a single View and makes it easy as possible to just set up viewer with a single View of a single Scene. Slave Camera's can be used in conjunction with the View(er) in cases where the view is made up of separate windows or rendering effects that all contribute towards provided the view in intended. Think of a car simulator where you have a monitor for the windscreen, and a left and right monitor for the left and right door windows - you'd have three windows that are are controlled by a single master view direction, with each one just offset from this direction, each window maps to a GraphicsWindow.

The CompositeViewer is a more complex and more flexible class that "has a" list of View(s). Each View can have it's own unique Scene or share a Scene. Each View can be composed of a single master Camera or from a set of slave Cameras as above. Multiple Views are useful when you have independent views of scene such as 3D view and a map view insert where the scene is the same but the camera that controls the view direction can be moved independantly. Alternatively you can independent Scenes in each View, or mix and match to your hearts content. [17]

Status updates

- 2021-08-27: Fernando has begun working on moving Canvas cameras out of the scene graph into dedicated PRERENDER cams (This was a long-standing issue where Canvas cameras were rendered multiple times, once per slave camera. In the case of the Classic pipeline they were being rendered twice.), see:

- 2021-08-13: Flickering (and eventually crashing) of Canvas avionics/displays (textures) in conjunction with CompositeViewer use seems related to using some of the more sophisticated OSG threading modes in combination with vector graphics (based on Shiva via Canvas Path elements) that are lacking serialization, which shows up when OSG tries to render frames concurrently.[18], for now please use single-threaded mode only because Shiva cannot be considered thread-safe, i.e. needs explicit serialization in conjunction with OSG threading [19]

- 2021-08-06: Various improvements to extra views - see: FlightGear commit 7ed93e4

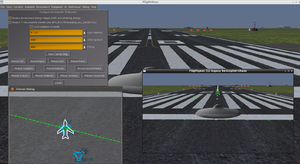

- 2021-08-03: Jules pushed a change to fgdata on next to default to CompositeViewer (by setting

--prop:/sim/rendering/composite-viewer-enabled=1). It can be turned off with command-line option--composite-viewer=0.[20] - 2021-05-14: Fernando committed a preliminary fix for the osgText issue which might mean that it will be possible to switch to OSG 3.6 soon'ish, i.e. once we have gathered sufficient feedback [21]

- 2021-2-8:

- Pan extra view windows in response to mouse left button drag. Unlike main view, panning is scaled by fov/windowsize, so easy to control even at high zoom levels.

- Support Tower View AGL in extra view windows.

- Added damping to chase views in extra view windows.

- Possible fix for crash on reset with composite viewer.

- 2020-12-12: One can now configure extra view windows using properties.

- 2020-11-21: Merged into next: CompositeViewer:Support for multiple view windows using osgViewer::CompositeViewer [22]

- 2020-11-16: Some progress on Canvas Views was made at the Hackathon.

- 2020-10-5: Issues with window resize/close appear to be bugs in OpenSceneGraph-3.4, and are fixed by building with OpenSceneGraph-3.6.

- 2020-9-27: Extra view windows now show textures and clouds etc, and rendering appears to be identical to the main view. This works by creating a new Compositor instance for each extra view window, and calling its update() method each frame.

General information

- Use of CompositeViewer is enabled at runtime with: --composite-viewer=1

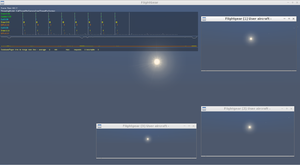

- Video showing extra view windows and initial implementation of Canvas View: cvcanvas-demo.ogv

- When enabled, CompositeViewer requires OpenSceneGraph-3.6 to work well.

- For more details see: ExtraViewWindows

- CompositeViewer support will allow us to render a view to a canvas and implement things like rear-view mirrors etc - see: Canvas_View_Camera_Element.

Problems

- for the time being, use of reset/re-init and aggressive OSG threading options seems to cause stability problems even without having cloned any views [23]

- the PUI based fps/frame spacing counter implemented in $FG_ROOT/Nasal/gui.nas could probably be replaced by a Canvas implementation rendering to the Canvas desktop using the Tooltips backend [24]

- for some people there seem to be Compositor related event handling regressions which we should keep track of once we begin supporting events per view [25]

Current limitations

- for the time being, Canvas based features using Canvas Path (shiva) [28] (MFDs/avionics) won't play nicely with CompositeViewer in multi-threaded mode [29] [30] A patch shared on the forum to add explicit synchronization using a lock/mutex[31], seems to solve the problem for a number of users[32] Specifically, people reported:

- The canvas displays are flickering when compositeviewer is at play...enabling CV support completely breaks canvases: https://seafile.merspieler.tk/f/defeb82cbabf40198169/?dl=1 [33]

- Even after closing the compositeviewer window some canvases stay broken. [34]

- The Canvas glass panels flicker [...]It happens only in multi-display mode with threading enabled: https://www.youtube.com/watch?v=byU0dv7bjHg [35]

- we cannot currently move/use any PUI/Canvas UI in separate windows

- users have been reporting a delay/freeze when cloning views [36]

- Extra view windows don't have keyboard handling, so for example one cannot zoom with x/X.

- Sview does not currently support Fly-past view.

Code

Latest code can be found on the 'next' branch of flightgear, simgear and fgdata. As of 08/2021, CompositeViewer support is enabled by default on next.

Testing

| Caution For the time being, it's recommended to explicitly enable single-threaded mode when using CompositeViewer in conjunction with Canvas related features[37][38] |

You can try using several windows on the camera group to test, no need to use several monitors. Here is the test setup Fernando is using, just two 800x600 windows [39]: https://pastebin.com/5HVsre8G

<?xml version="1.0"?>

<PropertyList>

<sim>

<rendering>

<camera-group>

<window>

<name type="string">main</name>

<host-name type="string"></host-name>

<display>1</display>

<screen>0</screen>

<x>0</x>

<y>0</y>

<width>800</width>

<height>600</height>

<decoration type = "bool">true</decoration>

<fullscreen type = "bool">false</fullscreen>

</window>

<window>

<name type="string">secondary</name>

<host-name type="string"></host-name>

<display>1</display>

<screen>0</screen>

<x>800</x>

<y>0</y>

<width>800</width>

<height>600</height>

<decoration type = "bool">true</decoration>

<fullscreen type = "bool">false</fullscreen>

</window>

<camera>

<name type="string">middlecamera</name>

<window>

<name>main</name>

</window>

<view>

<heading-deg type="double">45.0</heading-deg>

</view>

<frustum>

<top>0.133</top>

<bottom>-0.133</bottom>

<left>-.1668</left>

<right>.1668</right>

<near>0.4</near>

<far>120000.0</far>

</frustum>

</camera>

<camera>

<name type="string">leftcamera</name>

<window>

<name>secondary</name>

</window>

<view>

<heading-deg type="double">0.0</heading-deg>

</view>

<frustum>

<top>0.133</top>

<bottom>-0.133</bottom>

<left>-.1668</left>

<right>.1668</right>

<near>0.4</near>

<far>120000.0</far>

</frustum>

</camera>

<gui>

<window>

<name type="string">main</name>

</window>

</gui>

</camera-group>

</rendering>

</sim>

</PropertyList>

Background

The natural way to manage an application that has two views on to two different scenes is to use a osgViewer::View for each separate scene, and then an osgViewer::CompositeViewer to manage these two scenes. These two views can share the same GraphicsWindow, or have their own. They may even be added/removed from the CompositeViewer, or have their rendering toggled on/off via NodeMask's on the master Camera for each View.[40]

The Viewer class is the simplist for of viewer and inherits from osgViewer::View, so has a single master Camera, and 0 or more slave Camera. While the CompositeViewer class contains a list of osgViewer::View, again each of these View has a single master Camera, and 0 or more slave Camera.[41]

The osgViewer:::CompositeViewer/Viewer architecture is designed to support one frame loop driving all the windows associated with that viewer, not multiple places trying to dispatch frame(). So you use a single timer. Or use multiple viewers.[42]

- As a general approach, if you want multiple View's which have their own or share Scene's then the appropriate class to use is CompositeViewer as it's written specifically for this purpose.[43]

- The usual way to manage multiple window views of a single scene graph is to use a CompositeViewer with multiple View's each view using its own or sharing a graphics window. [44]

- What CompositeViewer provides is not so much performance improvement across the board, but rather far better granularity of design.[45]

- The CompositeViewer and Viewer should have exactly the same performance characteristics w.r.t managing multiple cameras - as it's exactly the same ViewerBase code underneath that is managing all the threading and graphics rendering.[46]

- CompositeViewer and Viewer share much of their implementation, the only key difference is that Viewer "is a" View, while CompositeViewer has a list of Views. All the event handling, camera manipulator and scene graph setting is done a the View level so has identical API to access. [47]

Views

The OSG is designed to allow you to rendering multiple views at once, there is no need to clone the scene graph, you simply add another View to a CompositeView to add the extra rendering. You can toggle optional View's on/off as you need them. [48]

For example, you could have a working set of View's that share the scene graph, and share

the same graphics context. All these Views would be added to the

CompositeViewer. At start up these View's would be disabled by setting their

View Camera's NodeMask to 0x0.

When you need to render a View for a client you'd enable a View of one is attached and not enabled, enable by setting the Camera's NodeMask to 0xffffffff. If you run out of View then simply create a new one for the purpose. Potentially you could do this on demand - so have none at start up.

When you no longer need a View you could remove it, but it's lighter to just disable it via the NodeMask trick.

With this approach you aren't creating/deleting graphics contexts, will lower memory usage and you'll get better performance.[49]

Note: the slave osg::Camera aren't direct children of the View's master osg::Camera, but they have their view and projection matrices updated from the master camera.

Note II: osgViewer only threads Camera that are in the viewer, not ones enclosed in the scene graph, so your Camera in Camera won't thread.[50]

- The right way to remove a view is outside of frame(). Not from an event handler from within the view, this will crash as you'll be deleting the object you are doing the work from.[51]

- If you want to keep the view around for future use then perhaps the easiest way to do it would be to disable the View's camera by setting its NodeMask to 0x0 i.e. view->getCamera()->setNodeMask(0x0); [52]

In terms of closing a View, does this View have it's own GraphicsWindow(s)? If not then just setting the View's Camera's NodeMask to 0 will switch if off and set it to 0xffffffff to switch it back on again. If the View does have it's own GraphicsWindow then you'll need to close the window and switch off the rendering via the NodeMask [...] The other approach is to simply removing the View from the CompositeView and add back in a new one when you need it. The NodeMask route is lightest weight route though and is what I'd do if it's possible. [53]

DatabasePager

The OSG has the osgDB::DatabasePager which sole purpose is to do multi-threaded paging of databases. The osgViewer::Viewer and CompositeViewer both support it out of the box, as did osgProducer::Viewer before it. There is nothing you need to do in your app other than load a paged database. Paged dabases in the OSG are ones that contain osg::PagedLOD node or loaded via the TXP plugin.[54]

- osgViewer is set up so that the Scene object manages the scene graph and the database pager assocaited with that scene graph. There is one Scene object per scene graph, and multiple views should share the same Scene instance if there share the same scene graph. Virtue of this sharing the Scene shouldn't go out of scope while at lest on View still references, and neither should its associated DatabasePager go out of scope either.[55]

- osgViewer::Viewer/CompositeViewer all have the DatabasePager built into them, and will automatically run the database pager thread on demand and take care of all the operations required to manage a paged database.[56]

- it might just be far more productive to use the OSG's built in database paging support. All you need to do is decorate your subgraphs with a osg::PagedLOD or osg::ProxyNode, with osg::PagedLOD being the method of choice as it'll do load balancing for you - both loading new tiles on demand and deleting ones that are no longer required, all automatically done by osgDB::DatabasePager/osgViewer. [57]

- The OSG has an database paging class call osgDB::DatabasePager that is built into the osgViewer::Viewer/CompositeViewer that will automatically load databases and merge them with the main scene graph during the update traversal. This is built around the osg::PagedLOD class, but you can also use the osg::ProxyNode.[58]

- Normally one should ever need to worry about constructing or destructing the DatabasePager, it should happen behinds the scenes managed by osgViewer. osgViewer::Scene is used internally by osgViewer to manage one DatabasePager per scene graph. The Scene object will be shared automatically between Views if you assign the same Node pointer then you call View::setSceneData. This sharing is done automatically for you. When a Scene object is destructed it's DatabasePager will be destructed if no other references to it exist. If you have multiple Views that you should be using CompositeViewer, not multiple Viewer. If you are creating and destroying views regularly then you are probably best to enable/disable them by setting the View's master Camera's NodeMask to 0x0 and back to 0xffffffff, as this will switch off rendering but keep the backend around ready to be re-enabled.[59]

- osgViewer automatically shares a single DatabasePager between Views when the Views share the same scene graph. This is required to avoid inconsistencies in the scene graph causing errors. Sharing a single DatabasePager doesn't prevent that pager from handling multiple viewpoints at the same time, if fact it knows nothing about viewpoints, it only handles database requests for tiles, so it totally agnostic to how you manage your viewpoints. Everything should basically just work out of the box without any need for specific settings from yourself.[60]

The existing DatabasePager functionality can page over the network,

but this isn't an issue for the DatabasePager - its purely a function

of the net plugin that does the loading across the http. This means

paging and reading across the network are completely orthogonal and

can be mixed and matched at will.

The Viewers already have support for adding and remove subgraphs from

the main scene graph via the DatabasePager. You needn't add your own

code as long as the database is set in a way that utilises

DatabasePager.

You can write your own DatabasePager functionality, but it its likely to be alot less work just to use the built in paging support, this way the task for you is just how to build you database. Teaching you how to reimplement existing functionality really is way beyond the level of support I can provide for free.[61]

Crashing

SingleThreaded means that frame() doesn't return until the draw threads have completed. At that time, you are free to make any changes you want. In other threading models, the draw threads might still be active after frame() returns, but you are guaranteed that objects with dynamic data variance have been processed (only static or unspecified data variance is left for the draw threads to work on).

The update NodeVisitor is for convenience only and isn't required for any modifications.

If your instability (crash?) goes away in SingleThreaded mode, then the issue is either improper use of data variance, or you have a thread safety issue in one of your callbacks. If you are seeing a crash when running single threaded then DataVariance won't be an issue, you'll need to look elsewhere. Unfortunately there is too information about the exact problem you are seeing on your system to be able to guess at what the problem might be.

A general note about DataVariance, in the OSG there are internal Nodes of the scene graph - such as Group, Transform, Geode etc, setting DataVariance on these is only a hint to code that might optimize the scene graph about what is safe to replace and change and what isn't, unless you are calling the osgUtil::Optimizer is pretty likely that setting DataVariance on Node in the scene graph will have no effect on the end result - as the update, cull and draw traversals all ignore the Node's DataVariance settings.

The only time that DataVariance is used is when the viewer is run multi-threaded with the DrawThreadPerContext or CullThreadPerCameraDrawThreadPerContext threading models, and where it's only the draw traversal that uses the DataVariance, and the since the draw traversal has only references to Drawable and StateSet leaves on the scene graph it's only the DataVariance on these that is checked. This means the nodes and any sate attributes attached to StateSet are ignored.[62]

First try, CullDrawThreadPerContext thread model to see if that works safely. If it does then the issue is almost certainly down to some StateSet or Drawable in your scene graph that you are modifying the contents that don't have the DataVariance set to DYNAMIC.[63]

ou only need to set the Node DataVariance as a hint to the osgUtil::Optimizer that is typically only used after loading a new model, the Node DataVariance is not used during the update, event, cull or draw traversals.

However, when using DrawThreadPerContext or CullThreadPerCameraDrawThreadPerContext threading models the StateSet and Drawable DataVariance is used to prevent dynamic leaves of the scene graph being updated and rendered at the same time - the draw traversal holds back the main thread till all the dynamic objects have been dispatched.[64]

When running in DrawThreadPerContext or CullThreadPerCameraDrawThreadPerContext threading models, these threading models use the DataVariance of StateSet and Drawable to decide when it's safe to let the next frame advance.

It's only the contents of StateSet and Drawables that your need to mark as DYNAMIC if their contents are changing. If only their ownership is changing they you'd don't need to set them to DYNAMIC, even if you remove the StateSet or Drawable from the scene graph it's still safe as the rendering back end takes a reference to StateSet and Drawables that it's about to render.

Node do not need to be set to DYNAMIC for the purposes of update, event, cull and draw traveresals. The only part of the OSG that checks the DataVariance of Nodes is the osgUtil::Optimizer. [65]

Changing the scene graph during event traversal shouldn't be a problem and shouldn't require you do set the Drawable and StateSet to DYNAMIC unless you are modifying the contents of these objects. The only area that might be worth looking at is if the draw traversal is access the parent lists of the Drawable and/or StateSet while these are being modified by the event traversal.[66]

Data Variance

The OSG is built for multi-threading of osgViewer View's in various ways, it's not general multi-threading support, it's designed specifically for a scene graph so overheads for that multi-threading to a minimum.

The multi-threading that osgViewer provides is managed by osgViewer itself so it can marshal all the tasks and synchronization correctly. [67]

OpenGL only supports thread per context, so each context can only be driven by a single thread at any time, The OSG's viewer threading is built around this constraint. Principally the rendering threads (cull and draw) don't write to the scene graph, they just read from it. There are a limited number of exceptions where nodes are view dependent and cache state within the node. This means it should be safe to run an intersection traversal at the same time as the viewer.renderingTraversals() is running.

The OSG by default will use separate OpenGL objects and associated buffers for each graphics context. If you enable sharing of GL objects between contexts then you'll need to run the application single theaded to avoid these shared GL objects and associated buffers being contend.

You can't use DrawThreadPerContext when sharing contexts except when your scene graph uses no OpenGL objects whatsoever. So no display lists, no VBO's, no textures etc. Basically if you want shared contexts you have to use it SingleThread so the different threads don't contend with the same resources.[68]

n the most general and conservative sense, you should set dynamic data variance on anything you need to change.

But in reality, you only need to do this for objects that are referenced by the draw list / render graph. Unfortunately, there is no (header file doxygen) documentation that tells you what goes in the render graph and what doesn't. So you can either take the conservative approach, or you can dig into the source to determine whether you can get away with not marking something dynamic, even though you know you're going to modify it.

For me, this boils down to a 5 second code change (to mark it dynamic) versus possibly hours of digging into the code. So I usually take the conservative approach.

Specifically regarding MatrixTransform, marking it as dynamic (as I did in the Quick Start Guide) isn't necessary, as OSG's render graph is composed of copies / concatenations of the matrix itself, and doesn't hold references to the MatrixTransform node per se.

Note also that data variance is used by the osgUtil::Optimizer. Other code in OSG might also reference data variance for various purposes.

The DataVariance property of osg::Object is used in couple of ways. For internal nodes of the scene it is used as a hint to operations such as the osgUtil::Optimizer that parts of the scene graph are static or dynamic and to use this information as guide to what can be optimized and in what ways - generally it means leaving DYNAMIC nodes alone. The DataVariance property on Nodes isn't used during the update, cull and draw traversals so it's effectively ignored.

For the leaves of the scene graph - the osg::StateSet and osg::Drawable (and it's subclasses), the DataVariance is only used when using the DrawThreadPerContext and CullThreadPerCameraDrawThreadPerContext threading models and is used during the draw traversal to prevent the next frame from commensing till all DYNAMIC StateSet and Drawables are dispatch. For SingleThreaded and CullDrawThreadPerContext threading models the DataVariance is not used as the next frame doesn't commence till the whole draw dispatch is completed.

The DataVariance of the StateAttribute subclasses is not used in update, cull or draw traversals, and like nodes is only used be specialist traversals like some of the ones contained in the Optimizer. So if you want to dynamic update a Uniform then the way to make sure that draw doesn't overlap the update of it is to set the DataVariance of the StateSet that enclose it to DYNAMIC. There isn't an automatic scheme to check the DataVariance of StateAttributes as it would be prohibitively expensive to do during the draw traversal.[69]

In all the OSG 2.x series the DataVariance is used during the draw traversal to monitor when all DYNAMIC StateSet and Drawables have been dispatched, as once they have been the next frame can be started in a parallel with the remaining STATIC objects are rendered (in DrawThreadPerContex, CullThreadPerCameraDrawThreadPerContext threading models.)

The rule of thumb is that the Data Variance should be set to DYNAMIC on instances that change once that have been added to the Scenegraph. The short reason why is that this allows the Cull and draw threads to correctly handle and stage any changes that may be made in the app threads to the instances [70]

With the DrawThreadPerContext and DrawThreadPerContextCullThreadPerCamera threading models the static part of the rendering can be done in parallel with the next frame. You guess this correct.

The one thing I'd add is that the OSG itself doesn't attempt to sort DYNAMIC objects so that are drawn first. You can set up your StateSet::RenderBinDetails to force the dynamic objects to be drawn first, but you can only do this for objects that don't affect the rendering of other objects, or are affected by what is the fame buffer already.

StateSets and Drawables must be marked as DYNAMIC is you plan to change them. That's because they are used by the rendering stage, which can overlap the next frame's update.

Everything else (scene graph structure, etc.) is safe to change during the Update traversal/callbacks.[71]

Threading

In single threaded mode, you can safely have a single threaded viewer and still have the DatabasePager working with multi-threading in the background.[72]

As a general note, there isn't a big difference between single threaded and multithread usage of the OSG in terms of OpenGL dispatch, with two separate windows there should be two separate graphics contexts and it should make no difference if you drive these from one thread one two, the state for each context should be kept local to each one.[73]

running multiple windows multi-threaded will give you the best performance, the OSG is designed for this usage model, and most easily set up using the native windowing support that the OSG provides.[74]

As long as your run the viewer multithreaded the OSG will use a barrier so that each graphics thread waits at the end of draw dispatch, then once all the threads join this barrier then all move on together and then call swap buffers. This is done to try and achieve synchronized swapping, however, it's not a full proof scheme as it doesn't use any low level driver and hardware synchronization.

Extensions to some OpenGL drivers exist to enable the low level synchronisation, such as swap groups, swap ready and gen lock.[75]

- osgViewer::CompositeViewer is designed for applications that have multiple Views. The only thing to be careful of is when you are adding and removing View's from the CompositeViewer you should do is calling stopThreading() on the viewer prior to adding or removing views, then call startThreading() afterwards. If you are running SingleThreaded or CullDrawThreadPerContext you won't need to worry about stop and starting threads.[76]

- osgViewer::CompositeViewer runs all of the views synchronously - one frame() call dispatches update, event, cull and draw traversals for all the views. [77]

- OpenGL doesn't support multi-threading within a single graphics context, so you are constrained to doing the rendering for each context in a single thread. The threading models that the OSG provides reflect this, enabling threading of the update, event and cull traversals in parallel with the draw thread. [78]

- if any code executed by the cull or draw threads (such as your own callbacks or custom nodes) isn't thread safe, then you must use SingleThreaded. [79]

- as long as you have two GPU's the most efficient way to drive them should be multi-threaded - there is a caveat though, hardware and drivers aren't always up to scratch, and even then they should be able to manage the multi-threads and multi-gpus seemless they fail too.[80]

- Cull and draw can only run in a parallel once all the dynamic geometry has been dispatched, otherwise the draw will be dispatching data that is being modified by the next frames update and cull traversals. Perhaps you have some dynamic geometry or StateSet's that are holding back the next frame. [81]

- if you are using a single graphics card for best performance one usually tries to use a single graphics window and have two cameras or more share this context.[82]

The ThreadPerCamera is just shorthand for CullThreadPerCameraDrawThreadPerContext, which will explain a bit more what's actually happening - it's meant to allow the draw thread to progress in parallel with the next frame.

There is a mechanism built into the backend to hold back the next

frame if there are any Drawables or StateSet's with their DataVariance

marked as DYNAMIC, however, if your whole scene is STATIC then this

will allow the next frame to advance. There isn't any default

additional mechanism for holding back the next frame. There are

mechanisms for doing a swap ready check for multi-context systems [83]

As for general notes about threading, if you are working on the same graphics context as you are then all the draw dispatch and the draw GPU can only be done by a single graphics thread so there is little opportunity to make it more parallel without using another graphics card/graphics context and interleaving of frames.

Cull and draw can only run in a parallel once all the dynamic geometry has been dispatched, otherwise the draw will be dispatching data that is being modified by the next frames update and cull traversals. Perhaps you have some dynamic geometry or StateSet's that are holding back the next frame.[84]

There are limits with the time of threading you can do with cameras that share the same graphics context - the draw traversals has to be single threaded for each camera's rendering, this applies to FBO's as well as normal rendering to a graphics window.

You should be able to still run multi-threaded if you have multiple graphics contexts, but the osgdistortion example just uses on graphics context, and multiple FBOs. [85]

You can have a slave Camera in the Viewer that is used a prerendering camera or a Camera in the scene graph that is used a prerender camera. Cameras in the scene graph are treated almost exactly the same way as slave Camera, it's just high level things like threading and how the view and projection matrices are assigned per frame that differ. [86]

If you want to create and chain RenderStages then using an osg::Camera in the scene graph is often one of the best ways to do this, and use the Camera::setRenderOrder(..) to control the order. You can also use a custom cull traversal callback to create RenderStage/RenderBin and assign these to the rendering backend, but this does require a greater knowledge of the internals of the rendering backend.[87]

Scene Modification

You should only update a stateset, drawable, or the scene graph structure from the update traversal, update callback, or update operation. Are you modifying anything at runtime?

Outside of the Optimizer, DataVariance has no effect in SingleThreaded mode. It also has no effect on Nodes (only Drawables and StateSets).

Modifying the scene graph outside of the frame call is safe in SingleThreader, and CullDrawThreadPerCamera threading models as they don't leave any threads active after the end of the renderingTraversals() method (called from frame()).

With DrawThreadPerContext and CullThreadPerCamewraDrawThreadPerContext the draw threads will still be active on completion of the renderingTraversals(), so if you modifying drawables and state that the thread is still reading from in the draw traversal you will end up with problems - and potential crashes. There is a standard mechanism to deal with this issue - and that is the renderingTraversals() method to block till all dynamic objects in the draw traversals have been dispatched. The way you tell the draw traversal that an drawable or stateset will be modified dynamically is to set its data variance to DYNAMIC.[88]

drawable->setDataVariance(osg::Object::DYNAMIC);

stateset->setDataVariance(osg::Object::DYNAMIC);

If you are modifying the stateset then you'll need to set its data variance to DYNAMIC. For a StateSet that decorates the whole scene graph you'll end you holding back the frame till the whole scene graph is completed, so it won't have any performance advantage over CullDrawThreadPerContext. You can double buffer objects to allow you to retain the STATIC data variance and keep the threads overlapping, to do this you do:

osg::Camera* cam = getViewer()->getView(i)->getCamera();

cam->setStateSet() = new StateSet; // this is where we just use a new

StateSet rather than modify the previous one

cam->getOrCreateStateSet()->setGlobalDefaults();

... And some other changes ...

The draw traversal takes a reference to the StateSet and Drawables so it's safe to go an remove them from the scene graph outside the frame() call, this isn't something makes then dynamic so you won't need to set their data variance to DYNAMIC.

Sharing scenes

The osgViewer has a mechanism for avoid multiple traversals of shared scene graphs if mutiple View's share the same root node of the scene graph. If shared component isn't the topmost node then the OSG has no straight forward way to know whether a subgraph has been traversed or not that frame. One could implement a mechanism to avoid this visiting a node multiple times in one frame but it would be really costly to do, an expense that would only be a benefit for a very small number of users, but would slow performance for everyone else.

If you have a shared subgraph that you don't want traversed multiple times per frame then use an UpdateCallback that has a frameNumber member variable that keep track of the the frameNumber (use NodeVisitor::getFrameStamp()'s FrameNumber) of the last traversal, when a traversal calls the update callback you only traverse the subgraph if the frameNumber is different and then set the frameNumber to the present frame, if the frameNumber is the same then you just return immediately. This custom UpdateCallback you'd place as high as you can in your scene graph to make sure the traversal stops as soon as possible.

Another approach is to move this frameNumber tracking into your existing update callbacks, and simple return right away with the frameNumber is the same. This requires a small tweak to the callbacks but is such a small change it's generally pretty easy to integrate.[89]

- Each View has one scene graph, and can share its scene graph between other instances of View. The View can also share the same GraphicsWindow, or have its own GraphicsWindow. The View also has a master Camera, and an optional list of slave Camera so you can scale from simple views up to complete distortion correction or multiple display output setups. Each View has its own event handlers and cameras handlers. Its extremely flexible and configurable. [90]

- sharing a scene between View's is OK within one CompositeViewer as they will Views on the same scene will share the same FrameStamp i.e. there will be all at the same point in time. Sharing one scene between multiple Viewers will hit up against the problem that in one set of traversals the scene graph is one time and then the traversals from the other viewer will try to change the time back - and likely to cause a mess. This timing issue isn't likely to cause problems with high level rendering though - it should just mess up things like particle systems and sequences.[91]

- When sharing a scene graph the osgViewer library automatically assigns an osgViewer::Scene for each unique scene graph, while sharing the Scene if you assign that scene graph to multiple View's. The Scene holds the DatabasePager for that scene graph so there isn't any overlap with multiple pagers trying to load stuff for the scene graph in an uncoordinated way.[92]

- One way that could break this mechanism is sharing portions of the scene graph and assigning the subgraph to each View as the Scene won't pick up on the fact that it's the same overall scene graph. If you have this situationist then sharing the same complete scene graph and use a combination of NodeMask and TraversalMask for each View to make sure on the part you want visible in each View is seen.[93]

- The best thing we could do would be to create a single GraphicsWindow and then share this between all our Views, we then won't have any problems with rendering order and sharing of textures or FBOs as it'll all be on one graphics context. See the sogcompositeviewer example for how to set up the Views/Camera & GraphicsWindow.[94]

- Sharing a single window between multiple views is demonstated in the osgcompositeviewer example - you simply assign the same GraphicsWindow to the Camera's in each of the Views. You change views you can stop the viewer threads and then add/remove views you need then restart the threading, this will drop a few frames though due to stopping/start of threads. The other way is to switch off the rendering of the view by setting its Camera's NodeMask to 0x0 to disable it.[95]

Context sharing

The OSG allows you to create graphics context, make the context current and then dispatch rendering and do swap buffers yourself but this requires you to individually set everything up yourself.

What osgViewer does is provide all the basic functionality that 99% of

users need out of the box, including handling context creation,

multiple contexts, handling of database threading, viewer threading,

event handling etc. It makes what would be a complicated task

trivial, but with encapsulating all this functionality it has to make

some assumptions about the way it's used[96]

A sharing OpenGL contexts doesn't mean actually sharing of the context, its just sharing some data between contexts, so you don't have a "common OpenGL context", you have two separate OpenGL contexts that are sharing display lits/texture objects etc.

Each GraphicsWindow "is a" GraphicsContext which maps directly to a single OpenGL graphics context. Each OpenGL graphics context has its own state machine which is mapped by a single osg::State object - which you'll find on the GraphicsContext.

Sharing of display lists/texture objects between contexts on the OSG just requires you to set the State::ContextID to same value. If the GraphicsWindow implementation is set up correctly then it'll automatically assign the same ContextID for each of the seperate osg::State objects.[97]

The scene graph is shared between the two slave Cameras. If the slave Camera have different graphics contexts then you can have separate OpenGL objects per context, but the OSG will automatically manage this all for you, it also offers the opportunity for threading the graphics contexts.[98]

- It's possible to share contexts in the OSG [...] As for general desirability of share GL objects between contexts, yes it can reduce memory usage, but it forces you to use the OSG single threaded otherwise two contexts will be contended for the same resources that deliberately aren't mutex locked for performance reasons. There is also on a limited set of cases where drivers/hardware will actually share OpenGL contexts. [99]

- Neither the OSG or OpenGL can provide thread safe sharing of GL objects when sharing contexts. If you want to run multiple context with multiple threads you will have to keep these contexts independent. [100]

- If all your views share the same graphics context then it's only possible to single thread the draw dispatch. With this usage you'll be able to use DrawThreadPerContext which will allow the update and cull traversals to overlap the previous frames draw traversal, but overlap will only extend from the dispatch of the last dynamic object in the draw traversal being dispatched. If you have a large static scene then the overlap can be the whole frame, if you have lots of StateSet and Geometry with a DataVariance of DYNAMIC then the scope for threading is reduced, and at worst case will essentially be serialized and equivilant to SingleThreaded. Things that affect the draw traversals sometimes need draw threads to be stopped completely. Things like adding views to a CompositeViewer, or changing the graphics context on a camera, or things like that. It's pretty rare you need to do this. It's also pretty costly, because stopThreading() will only return once the draw threads have been stopped and deleted, and startThreading() only returns once new draw threads have been created and started.[101]

- an OpenGL context is tied to a single window or pixel buffer. [102]

- Sharing contexts is also something the forces a few limits on how you use the graphics contexts, such as it's only really safe to use them single threaded. [103]

- If you are creating new graphics contexts and applying and old scene graph to it then you can't use the Texture::setUnRefImageDataAfterApply(true) feature of osg::Texture as this will discard the imagery once it's applied to all the graphics contexts that it knows about. [104] The typical problem is that the scene graph has been set up to unref texture images after apply so when it comes to reloading the texture images there aren't the to download. [105]

Scene View Buffering

Both DrawThreadPerContext and CullThreadPerCameraDrawThreadPerContext modes use osgViewer::Renderer thread with double buffered SceneViews.

SingleThreaded and CullDrawThreadPerContext use a single SceneView for rendering. (CullDrawThreadPerContext also uses Renderer but only with one SceneView see osgViewer::Rendered::cull_draw method in comparison to osgViewer::Renderer::draw & osgViewer::Renderer::cull)

Double buffered SceneViews means that there are two interleaved SceneViews performing cull and draw operations for subsequent odd/even frames. These two scene views share some resources but may also create some separate resources. For example, if texture is attached to RTT camera, each of these SceneViews will create two separate FBOs for this camera but these FBOs will share camera texture. But when you attach the image to RTT camera, each of these FBOs will create spearate render buffer and will read pixels to the camera image from the buffer.[106]

Swap Buffers

OpenGL drivers have a FIFO, your app fills the fifo with tokens and data, at the end of the frame you send in a swap buffers token and this goes into the FIFO with everything else. Normally the swap buffers call itself doesn't block (although some implementations do this), but the FIFO itself can only be cleared at the rate for one swap buffers call per frame so it'll fill and once filled up it will effectively block until previous frame was begun dispatching. The driver may allow several frames worth data in the fifo before block, this is driver dependent, and also dependent on just how data you have to pass to OpenGL- if you have massive models the CPU will be block on the FIFO right on the same frame rather than more than one frame begin backed in the FIFO.

The end result of this is simpler though - put vsync on, and your frame loop will block and should iddle while its waiting for the FIFO to begin accepting new data. [107]

The driver will be queuing up multiple frames in the FIFO, something it does to help improve the framerate, but increases frame latency.

We aren't powerless in this though, modern drivers and hardware support putting fences into the pipeline and waiting on these to be completed on the GPU. In the svn/trunk vesion of the OSG you'll find a swap buffers SyncSwapBuffersCallback implementation that does this for you. You can enable this via the env var OSG_SYNC_SWAP_BUFFERS=ON, or --sync on the command line for examples like osgviewer.[108]

There is an OpenGL extension that supports syncronizing of swap buffers across multiple graphics contexts that allows you to assigns contexts to swap groups[109]

some graphics drivers will do swap buffers in sequence if you have multiple windows being rendered too, with each swap doing a vsync, which ends up with each window blocking till the end of each screen refresh. Use of the swap groups extension would be one way around this issue[110]

The GraphicsContext::swapBuffers() is normally what does the swap buffers and then calls GraphicsContext::clear(), with GraphicsWindowEmbedded::swapBuffers() it's a non op, because there is no way it can do a swap buffers as it doesn't actually know about a real graphics context.[111]

Image Sharing

Sharing of images is possible by using a frame buffer copy to osg::Image, or just having multiple FBO's all within one graphics context. [112]

If you want to use the result in a separate window/display you'll need to copy the result back to an osg::Image and then use this image on another texture on the other windows. osg::Camera supports attaching an osg::Image to it and will automatically copy the frame buffer(or FBO) to the image, and osg::Texture* all are able to detect an update to an osg::Image so will automatically download the result, so it's possible to do this wiring up relatively easily, but.. performance won't be great as it requires a round trip to the CPU/main memory.[113]

The best way to deal with the high cost of these operations is to avoid them completely. Try to use algorithms that can use render to texture using FBO's and read this textures directly in other shaders. Never try to copy the results back to the CPU/main memory, this does force you to do more work on the GPU and rely on more complex shaders but in the end it means that you don't have to force a round trip to the GPU. It's the flushing of the fifo that is the problem, that's why it's so slow, not the data transfer itself. Once you flush the fifo you loose the parallelism between the CPU and GPU.

The only way to hide this is to use PBO's to do the read back and do the actual read back on the next frame rather than in the current frame. In your case you might be able to get away with this, a frames latency might not be a big issue if you can keep to a solid 60Hz and the values you are reading back aren't changing drastically between frames. osgscreencapture uses a frame latency when it double buffers the PBO's. It doesn't matter whether it's frame buffer or FBO, the PBO is only related to memory management.[114]

- Sharing of images is possible by using a frame buffer copy to osg::Image, or just having multiple FBO's all within one graphics context. If you can have the frames all done synchronously then perhaps you could have one frame loop and just disable the cameras via camera->setNodeMask(0x0); that you don't need updating on each frame, i.e. main viewer runs at 60Hz, and the other RTT cameras run at 20Hrz so get update on frame in 3.[115]

- ImageStream is updated by a background thread. The xine-lib and QuickTime plugins both subclass from ImageStream and OpenThreads::Thread to provide a class thatautomatically runs updates on itself - this thread updates the image data on the ImageStream and then calls dirty to tell the rendering thread that it needs to download the data to any associated texture.[116]

- It's better to update the data stored in the Image directly and call dirty, or to allocate the image memory separately and disable the deletion of the data on the image so the osg::Image(Stream) never calls delete on the data.[117]

Offscreen rendering

The two options for off screen rendering are a PixelBuffer context or FrameBufferObject, if you have an existing on screen window in your application then using a FrameBufferObject becomes preferable. The way to do it would be to set up your viewer's off screen Camera with FBO settings and a custom final draw callback to do the read to main memory. The read will be more efficient if you use a pair of PixelBufferObjects - the osgscreencapture example illustrates this in action.

If all you ever want is to render offscreen, then go with the other suggestion of creating a PBuffer (pixel buffer) context instead of a normal graphics context. The default contexts will spawn a window, the PBuffer will not.

If you are already using CompositeViewer the most natural thing to do would be to have a dedicated View with it's master Camera as the offscreen camera, this way you can control the Camera's view matrix in straight forward manner in the same way to the rest of the Camera's. Also during debugging having the option of making this Camera an onscreen one would give you means to visually QA things as you go along. [118]

FBO's aren't a direct replacement for PixelBuffer objects so you can't just miminc a osg::PixelBufferX11 by using a FBO.

FBO's are GL object objects, while PixelBuffer's are graphics contexts. To create a FBO you need a graphics context. A PixelBuffer is a graphics context that has it's own frame buffer that isn't assigned to the display like a GraphicsWindow graphics context.

In the past OpenGL didn't have the ability to render to anything other than graphics context's frame buffer so to PixelBuffer were the standard way to doing multi pass rendering. With the advent of FrameBufferObject OpenGL now had an ability to render to a frame buffer other than the one assigned to the graphics context for the purpose of display on the screen, as the FBO is OpenGL object within a graphics context it's more efficient than using two graphics context to do render to texture so is very much the preferred way of doing multipass techniques like Render to Texture used in shadowing, lighting etc.

These days the need for PixelBuffer is much diminished as FBO's work really well for most applications, however, there are times with PixelBuffer's are still useful - if you want to render completely offscreen with any on screen window then a PixelBuffer is a perfect tool as is still the appropriate tool for the job.[119]

Multiple Viewers

One possible solution would be to have two separate viewers, each running their own frame() when required - you can't mix scene graphs or graphics contexts in this case though.[120]

If you don't want the main rendering loop to wait for the rendering of all these extra views then you'll need to use a separate viewer(or compositeviewer) with it's own threading. You'll need to manage your own frame loops in the secondary viewer.

The only reason to copy data is if it the data is being modified by the different threads. [121]

this should probably be possible given separate threads for each of the viewers frame loops i.e. run them all in a background thread.[122]

You could easily just create an entirely separate viewer for doing the screenshots. You can have this run in the background with no need to affect the main viewer's threading/graphic contexts.[123]

you will be able to do is use two separate Viewer's. You are likely to want to run two threads for each of the viewers frame loops as well. To get the render to image result to the second viewer all you need to do is assign the same osg::Image to the first viewer's Camera for it to copy to, and then attach the same osg::Image to a texture in the scene of the second viewer. The OSG should automatically do the glReadPixels to the image data, dirty the Image, and then automatically the texture will update in the second viewer. You could potentially optimize things by using an PBO but the off the shelf osg::PixelBufferObject isn't suitable for read in this way so you'll need to roll you own support for this.[124]

For viewers to share the same node group, you need take care of explicitly sync'ing the FrameStamp between each traversal as otherwise the state of the scene graph can get thrashed between different times.[125]

Related

Docs

- http://www.openscenegraph.org/index.php/documentation/guides/programming-guides/93-viewer-vs-compositeviewer

- http://www.openscenegraph.org/documentation/OpenSceneGraphReferenceDocs/a00108.html

- osg::FrameStamp and osg::Timer in OSG

Discussions

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg17001.html

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg17263.html

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg27134.html

- http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg28869.html

- http://forum.flightgear.org/viewtopic.php?f=17&t=18813

- http://forum.flightgear.org/viewtopic.php?f=4&t=18785&p=199916#p199916

Search

- https://www.mail-archive.com/search?q=compositeviewer&l=osg-users%40lists.openscenegraph.org

- https://www.mail-archive.com/search?q=pagedlod&l=osg-users%40lists.openscenegraph.org

- http://www.mail-archive.com/search?q=compositeviewer&l=flightgear-devel%40lists.sourceforge.net

- http://www.mail-archive.com/search?l=flightgear-devel%40lists.sourceforge.net&q=PagedLOD+

- https://sourceforge.net/p/flightgear/mailman/search/?q=%22CompositeViewer%22

- https://sourceforge.net/p/flightgear/mailman/search/?q=%22PagedLOD%22

- https://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=CompositeViewer