Cppunit effort

| Started in | 02/2017 |

|---|---|

| Description | foo |

| Contributor(s) | Bugman |

| Status | Under active development as of 04/2018 |

| Folders | foo |

| Changelog | foo |

| This article is a stub. You can help the wiki by expanding it. |

Status

Edward found recently some time to work on his CppUnit test suite branches for flightgear/simgear, rebasing to the latest code. [1]

For related/recent devel list postings, see: https://sourceforge.net/p/flightgear/mailman/search/?q=cppunit

Objective

The aim is to make it very easy for people to write unit or system/functional tests for fgfs (rather than simgear), which should make it much easier for new developers to dive into the flightgear sources. A long term goal might be to have good test coverage of the whole binary so that fgfs is harder to break and is more stable.[2]

The long term assumption here is that one day we will have significant test coverage of both fgfs and fgdata, and that running a full set of tests would take a significant amount of time (say 30 min to 1 hour). The idea was that this could be run on a Jenkins VM, or a similar system, triggered in the same way Gene's Jenkins set up is currently run, i.e. "Started by an SCM change". If run on Jenkins, then it could either be run together with the flightgear build or as a separate project (with "flightgear" set as an upstream project). This would help identify who accidentally broke what.[3]

Motivation

|

|

currently there are basically no tests of the fgfs binary or fgdata.[4]

The original testing approach was designed to make running individual tests quite light-weight and easy during development, but allow the full test-suite to be run during a Jenkins build and James would like to see both of those remain in any change to the testing setup. There’s may ways to achieve that end result of course, but some core developers rely on the ‘make changes, hit build-and-run, see tests fail or pass, repeat’ as a workflow very often, so it’s a priority for them that this workflow remains.[5]

Why Cppunit

The benefit of using an established and well tested test suite framework, rather than writing and maintaining our own framework, is that you have a clear separation of the framework maintainer and the test writers. The complexity is solely at the feet of the framework maintainer. However once CppUnit is included in the 3rdparty/ directory and the framework is established, the job of the framework maintainer is done. For my project relax, the complex code for the framework has essentially been untouched since 2006. Well, apart from some superficial refactoring and cleaning, and addition of the GUI test category in 2011. For the test writer, the advantage is the simplicity of test writing. They do not need to worry about the complexity of the framework implementation. They only need to write a setUp() function, the test*() code, and a tearDown() function to clean up (setUp and tearDown are optional). No knowledge of CMake is required. And they don't have to chase down all the sources required to build the binary. The protected execution of the setUp() and test*() functions is also a major advantage. I think this simplicity for test writers would make it easier for more potential developers to learn about the fgfs internals. They could start contributing to fgfs by writing unit or system/functional tests to learn about the fgfs internal design. If we had good test coverage, then you could say that all new developments need to come with one or more tests. That is how I do things. [6]

Background

Edward developed this as part of his FGPythonSys experiments to have full system test coverage. The test suite is based on the venerable, yet old CppUnit framework. Edward has spun this out into its own standalone branches rebased to 2016.4.0 and made independent of embedded Python.

The test suite is an almost direct Python->C++ translation my own comprehensive test suite for one of my own software projects [4]. The benefits of such a framework, assuming a good test coverage, include:

- Enabling quick and brutal refactorisation of the entire code base - ensuring that the end result works perfectly well.

- Interest in independence and modularisation of all FlightGear components to simplify tests.

- If all subsystems are tested for standalone creation, destruction, init(), reinit(), shutdown(), etc., then repetitive resetting of FlightGear should work flawlessly.

- Allow valgrind and other heavy tools to be run on the absolute minimal code paths, by running individual tests.

The functionality of the test suite can be very much expanded in the future. For example there are CppUnit classes for XML output, rather than text, so this test suite is ideal for automated testing and presenting the results through web pages (e.g. Jenkins).

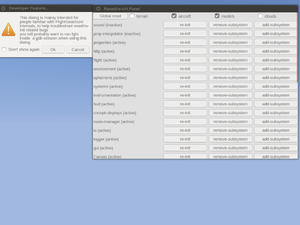

As a demonstration there are now 4 initial categories of tests:

- System/functional tests (currently 0 tests).

- Unit tests (currently 2 tests).

- GUI tests (currently 0 tests).

- Simgear unit tests (currently 2 test).

There are an addition 242 unit tests in the FGPythonSys branches. One of the unit tests and one of the simgear unit tests are the old tests/test-mktime.cxx and tests/test-up.cxx programs respectively (the rest of the tests/ directory might be better suited for FGMeta). A list of the test suite features are:

- Run 'make test_suite' to build the test suite as the $BUILD_DIR/test_suite/run_test_suite standalone binary (with full independence from fgfs) and to run the test suite.

- The absence of CppUnit causes 'CppUnit not found' to be printed by CMake, then nothing happens.

- No CMake installation target, so it remains in the build directory.

- A setUp() function called before every test that can be customised for each grouping or suite of tests (for FGPythonSys, set up a new subsystem instance, and inject an empty property tree).

- A tearDown() function pre suite unconditionally called after every test (for FGPythonSys, delete the subsystem).

- Minimal output of '.', 'F', and 'E' per test for a pass, fail, or error state [6].

- Comprehensive simgear logstream and STDOUT+STDERR capture and reporting only for failed tests [7] (printf() function calls cannot be captured).

A final synopsis of all tests.

For full details, see the branches. The framework is powerful enough to bring in all types of tests under one roof, including all those with main() functions and currently scattered all over the place.

Challenges

The hardest one would be to set up and run only a small subset of subsystems and other infrastructure. But it is possible to write unit tests now. Writing a system/functional test is much more difficult but, with a bit of work, it should be possible.[8]

Roadmap

The idea is to make it possible to set up a single subsystem and all its dependencies in a few lines of test code. Then it should be easy to write a test. This is the dependency arrays, subsystem manager/factory fusion, and dynamic dependency resolution algorithm discussed in another thread. Edward is currently working on some ideas, he is also working on subsystem robustness testing for the test suite. This will make it much easier to work with isolated subsystems or sets of subsystems, as many subsystems are quite brittle and if you run them in a way unintended compared to how they are run in fgfs, you see segfaults all over the place (see for example Howto:Reset/re-init Troubleshooting).[9]

Structure

We could encourage beginners to add code where they wish in the test suite directories. Keeping it all in a separate directory keeps the codebase much cleaner. In addition, the test suite directories end up having a lot of test data added. Probably, such a chaotic collection of cross-subsystem tests, different test categories, and piles of test data should be isolated from the flightgear src/ directory. This could encourage potential developers to learn about fgfs internals without having to worry about any conventions or stepping on anyone's toes.[10]

Note that for system/functional tests that look at the interaction

between code in different directories in src/, my cppunit design is

that the test code is located in the new

test_suite/{gui,system,unit}_tests/*/ directories. There

standardisation of the naming convention is not so important. Because

the framework covers a lot more than just basic unit tests, in my

branches I also placed the unit tests within this directory structure.

I feel that this would encourage non-core developers to contribute

random code here, as a learning tool, as long as their tests pass on

all OSes. The code in these directories does not need any real

organisation.[11]

Another point is that one new test category curently being considered is adding a FGData Nasal script category, where the FGNasalSys subsystem is repetitively initialised and the FGData code is then extensively tested, these tests should be probably not be in $FG_ROOT/Nasal/. [12]

Getting involved

| This article or section contains out-of-date information

Please help improve this article by updating it. There may be additional information on the talk page. |

We would recommend copying existing tests in SimGear or more recently, FlightGear - we have a few.

You don’t need to learn much CMake, just copy the test helper macro James wrote.

The framework we have is very simple, just some macros in SimGear. If you want to integrate CppUnit, we have a proposal to do that from another contribution; I don’t mind but it we need to add it to the Windows dependency list. So I’d suggest starting with CrappySimgearUnit and if you decide you would like CppUnit, shout and I can add it.

Test code can either go next to the sources it’s testing, or in the case of flightgear, in a subdir of tests/ (again, see the code for examples).

For Yasim and FDM/Yasim/tests dir might make sense [13]

The SimGear test macros are in [14]

https://sourceforge.net/p/flightgear/simgear/ci/next/tree/simgear/misc/test_macros.hxx

For usage example, just look at one of the many *_test.cxx modules in SimGear (except those still using boost test macros), for instance:

https://sourceforge.net/p/flightgear/simgear/ci/next/tree/simgear/misc/strutils_test.cxx https://sourceforge.net/p/flightgear/simgear/ci/next/tree/simgear/misc/argparse_test.cxx https://sourceforge.net/p/flightgear/simgear/ci/next/tree/simgear/io/iostreams/zlibstream_test.cxx

For the FG testing framework: it has been only very recently integrated (and I believe is currently disabled on jenkins because the tests failed there for a still-unknown reason), I haven't used it myself, and Edward wrote a description of his work here:

https://sourceforge.net/p/flightgear/mailman/message/35661026/

New SubSystem Design

For the new testing framework Edward would like to discuss ideas for refining the design of the subsystems, the subsystem manager, and the subsystem factory.

These ideas were touched on in other threads on the devel list. Currently, the subsystem dependency order is hardcoded into the fgCreateSubsystems() function of fg_init.cxx.

But for the test suite we need far more flexibility. What Edward would really like is two new access points. These would require converting the basic subsystem manager to be far more "intelligent", probably requiring the merging of the subsystem factory ideas into the manager itself, as well as adding a dynamic dependency resolution algorithm to the manager.

- The first access point would be to initialise a subsystem manager instance at the start of a test. Then asking the manager for subsystem X to be started along with all its dependencies (essential ones that is, and maybe with a flag for optional dependencies as well). For this, each subsystem could return a vector of dependency names. The manager would then instantiate each of the subsystems (if not already done) and then ask them for their dependencies. The dependency chain could then be built up and stored, and the dependency resolution code could order the subsystems. This order could then be used for ordering all of the subsystem API calls (init, shutdown, and reinit being of interest).

- The second access point would be to initialise a subsystem manager and then ask it for subsystems X, Y, and Z without dependencies.

On top of this, the subsystem manager add() function would be used to pre-insert instantiated subsystems or dummy subsystems set up as desired for the test. These ideas could easily be implemented side-by-side with the current code, gradually merging in the subsystem factory code. Would such ideas fit into to the current architecture at all? [15]

One thing to be aware of, we do rely on the subsystem-groups to control order of processing with the update() call - and this is important for reducing latency in some situations (eg, FDM is processed before Nasal). There's probably no problem here, but in the past when the (hard-coded) subsystem creation code got changed, we did get some odd bugs due to a subsystem running before another, when previously it had run after. Hopefully, all the cases of that were fixed at the time, but there’s probably a few more lurking. [16]

Howeve, if a new type of "update dependency" was also added, then it wouldn't be a problem. The subsystem manager could then build the dependency tree by asking for all required, optional, and update dependencies.[17]

For now, the idea is writing an initial example on a testing branch just as a first experiment. But we could do this on next. We should develop this in combination with basic tests to avoid problems in unrelated tests. For the subsystem supplied dependencies, the following SGSubsystem base class public virtual functions are under consideration:

- dependenciesRequired()

- dependenciesOptional()

- dependenciesUpdate()

- dependenciesNonSubsystem()

The last would be useful in the test suite with subsystem manager flags tracking the initialiation status of non-subsystem components, e.g. FGRenderer and SGSky. Parsing this for all subsystems might make an interesting subsystem conversion todo list ;) The functions could return empty std::vector<std::string> objects for leaf nodes and non-implemented subsystems. For the rest, we will need to experiment a little. A nice initial isolated subsystem set would be useful to find.[18]