Canvas development: Difference between revisions

(→An integrated Canvas based launcher: Template:Note → Template:Mergeto) |

Philosopher (talk | contribs) m (→Placement/Element for Streaming: Computer Vision: little bug in citation) |

||

| Line 758: | Line 758: | ||

Basically, this would translate to feeding FlightGear the FDM data and visualizing the image generated by FlightGear in another computer, across a network, using for example streaming video. | Basically, this would translate to feeding FlightGear the FDM data and visualizing the image generated by FlightGear in another computer, across a network, using for example streaming video. | ||

I suppose this is a bit of a far-fetched idea, but is there any sort of support for this (or something similar) already implemented? <ref>{{cite web |url=http://www.mail-archive.com/flightgear-devel%40lists.sourceforge.net/msg10528.html|title=Flightgear visualization as streaming video|author=Antonio Almeida | I suppose this is a bit of a far-fetched idea, but is there any sort of support for this (or something similar) already implemented? <ref>{{cite web |url=http://www.mail-archive.com/flightgear-devel%40lists.sourceforge.net/msg10528.html|title=Flightgear visualization as streaming video|author=Antonio Almeida |date=Tue, 22 May 2007 10:14:46 -0700}}</ref>|Antonio Almeida}} | ||

Revision as of 21:09, 30 May 2014

| This article is a stub. You can help the wiki by expanding it. |

| The FlightGear forum has a subforum related to: Canvas |

| Note This article is primarily of interest to people familiar with Building FlightGear from source, and wanting to extend the Canvas system in SimGear. Readers are assumed to be familiar with C++ and OSG

, the Property Tree and basic FlightGear APIs like SGPropertyNode, Property Objects, SGSubsystem and SGPropertyChangeListener. The Canvas code itself makes extensive use of the STL and Boost. The latest Canvas/Doxygen docs can be found here.

There are two main ways to extend FlightGear's Canvas system:

Whenever all existing elements (group, map, text, image, path) should benefit from an addition, such as for example adding effects/shader support, it makes sense to extend the underlying base class itself, i.e. Canvas::Element. In addition, the map element (a subclass of group) can be extended to support additional map projections. People just wanting to add a new layer to an existing dialog or instrument, will probably want to refer to Canvas MapStructure instead. |

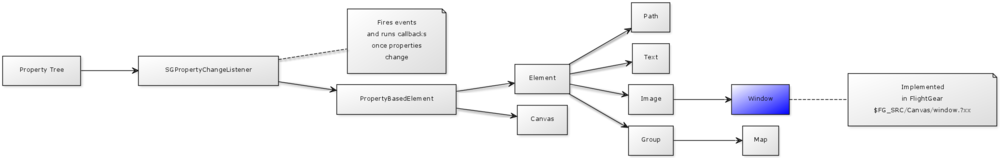

The canvas system is a property-driven FlightGear subsystem that allows creating, rendering and updating dynamic OpenGL textures at runtime by setting properties in the FlightGear Property Tree.

The property tree is the sole interfacing mechanism in use by the Canvas system. A so called listener-based subsystem (via SGPropertyChangeListener) will watch the canvas sub tree in the main property tree for "events" (i.e. properties being set, written to/modified), and then update each associated texture accordingly, e.g. by adding a requested vector or raster image, drawing a map, placing symbols or placing text labels with custom fonts.

Elements can be nested and added to groups which support showing/hiding and clipping of segments. Vector drawing is handled via ShivaVG.

All property updates result in native C++/OSG data structures being updated (typically using OSG /STL/Boost containers), so that the property tree and scripting are solely used to send update events, which ensures that Canvas-based systems are typically fast enough, often delivering frame rates beyond ~40-60 fps.

Animations are currently not directly supported, instead these can be implemented by using separate canvas groups and hiding/showing them as needed, or simply by changing the size/color/styling attributes of a canvas group using Nasal timers. Another option to update a canvas without relying on Nasal timers (i.e. due to GC considerations) is using so called "Property Rules", which are currently not yet exposed to Nasal, but which can be used for any needs where scripting overhead should be minimal.

The Canvas fully supports recursion, by allowing other canvases (and sub-regions of them via texture-mapping) to be referenced and used as raster images, so that multiple canvases can be chained together, but also through the notion of "groups", which are containers for other canvas elements, including child groups or elements referencing other canvases.

This can be particularly useful for projects requiring multi-texturing and other multi-pass texturing stages. This mechanism is also one of the main building blocks used by the MapStructure charting framework to implement caching support via texture maps, without needing any changes on the C++ side.

The canvas itself is developed with a focus on primarily being an enabling technology. In other words, the canvas is not about implementing individual features like a PFD, ND, EFIS, EICAS or other MFD instruments like a moving map or a GUI library. Rather, the Canvas system is all about coming up with a flexible and efficient system that allows end-users (aircraft developers and other base package contributors) to develop such features themselves in user space (i.e. the base package) via scripting - without having to be proficient C++ programmers, without having to rebuild FlightGear from source, and without having to be familiar with OpenSceneGraph, OpenGL or other technologies that typically involve a steep learning curve (i.e. STL/Boost).

This approach has several major advantages - in particular, it frees core developers from having to develop and maintain end-user features like a wxradar, groundradar or Navigational Display/PFD by empowering content (base package) developers to handle the implementation of such features themselves.

Thus, development of content is moved to user space (i.e. the base package). Recently, we've seen a shift in trend here, because more and more core developers focusing on end user requests, instead of implementing those feature requests, they implement the building blocks and infrastructure to delegate the implementation of these features to user space.

Besides, core developers are generally overstretched, and there are not enough core developers to handle all core development related tasks:

| Unfortunately, most of the active FG developers are currently very overstretched in terms of the areas that they have ownership of, which is affecting how much can actually be done. Fundamentally we need more core devs. [1] — Stuart Buchanan

|

- ↑ Stuart Buchanan (Thu, 25 Apr 2013 07:28:28 -0700). Atmospheric Light Scattering.

The only way to deal with this is to occasionally shift the core development focus from developing complex high level end users features (such as an ND, TCAS or WXRADAR) that take years to develop, to just providing lower level API s (like a navdb API or a 2D drawing API like Canvas) to enable base package developers to develop those really high level features themselves.

This is the route that seemed to work out fairly well for the local weather system, which was prototyped and implemented by a single base package developer in scripting space, who just asked for certain scripting hooks to be provided at some point.

For example, when Stuart, Torsten or Erik implemented LW-specific core extensions, these were about providing new hooks to be used by Thorsten. They didn't commit to implementing a weather system, they just enabled somebody else to continue his work. So this strategy is as much about delegation, as it is about organizing core development.

Core developers cannot possibly implement all the ideas and feature requests that end users may have, but they can at least provide a toolbox for base package developers to implement such features. Now, without doubt, implementing a WXRADAR, TCAS, AGRADAR or even a full ND /MFD is incredibly complex and time-consuming.

Exposing a 2D drawing API or a navdb API to base package developers would have been much simpler and less time-consuming, at the cost of possibly not providing certain instruments/features directly - while still providing the building blocks for skilled base package contributors to implement such instruments eventually within the base package, rather than within the C++ source code where evolution and maintenance of such instruments is inherently limited by the availability of C++ developers.

Given the progress we've seen in Canvas-related contributions boosted by having a 2D API, this is a very worthwhile route for developing MFD-style instruments or other end-users features without being limited by our shortage of core developers.

Furthermore, the amount of specialized code in the main FlightGear code base is significantly reduced and increasingly unified: One major aspect of adopting the Canvas system was Unifying the 2D rendering backend via canvas, so that more and more of the old/legacy code can be incrementally re-implemented and modernized through corresponding wrappers, which includes scripting-space frameworks for existing features like the Hud system, but also our existing PLIB/PUI-based GUI, and the old 2D panels code or the Map dialog.

Many of these features are currently using legacy code that hasn't been maintained in years, causing issues when it comes to making use of certain OSG optimizations.

In addition, widgets and instruments will no longer be hard-coded, but rather "compiled" into hardware-accelerated Canvas data structures while being initialized, which will be typically animated by using timers or listeners (via scripting or property rules). The fact that previously hard-coded widgets or instruments are now fully implemented in scripting space also means that deployment of updates no longer requires manual installations of binaries necessarily.

This is analogous to how more and more software programs, such as browsers like Firefox/Chrome, are using an increasingly scripted approach towards implementing functionality, i.e. using JavaScript/XUL to move the implementation of certain features out of native code.

Finally, an increasingly unified 2D rendering back-end also provides the opportunity to make porting/re-targeting FlightGear increasingly feasible, no matter if this is about mobile gaming platforms, mobile phones (e.g. Android) or embedded hardware like a Rasberry PI: Without a unified 2D rendering back-end, all other subsystems doing 2D rendering would need to be manually ported (hud, cockpit, instruments, GUI etc).

A unified 2D rendering back-end using the Canvas system ensures instead that all Canvas-based features will remain functional, as long as the Canvas itself is able to run on the corresponding platform/hardware, because there's really just a single subsystem that handles all 2D rendering via different user-space wrappers, and that would need porting (e.g. to support OpenGL ES).

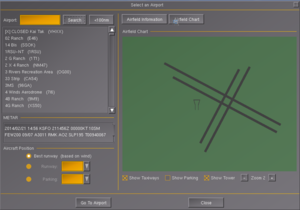

Also, GUI dialogs and instruments can make use of other Canvas-based features, e.g. for showing a GUI dialog on an instrument, or instruments in dialogs.

The property tree centric implementation approach of the Canvas also means that all Canvas-based frameworks could technically work in a standalone FGCanvas/FGPanel mode, but also in multi-instance (master/slave) setups such as those common for FSWeekend/LinuxTag. This is yet another novelty, because most existing hard-coded instruments cannot be easily modified to work in such multi-instance setup scenarios. The Canvas system however -being based on the property tree- could retrieve property updates from external instances, e.g. via telnet/UDP or HLA without requiring major re-architecting.

Frameworks

Obviously, the Canvas API s themselves are not intended for specific end-user features like developing a PFD, ND or EICAS - therefore, you will typically see wrappers implemented in scripting space for certain needs - i.e. Canvas frameworks intended to help with the development of certain types of instruments for example. Frameworks will usually use the Canvas scripting space API directly, while providing a more concrete, use-case specific API, on top.

- Canvas EFIS Framework (2020/02): jsb

- Canvas MapStructure (2013/2014: Philosopher & Hooray)

- NavDisplay (2013/2014: Gijs, Hyde)

- Canvas GUI (2013-2015: TheTom)

- Canvas MCDU Framework (2012: TheTom)

Elements

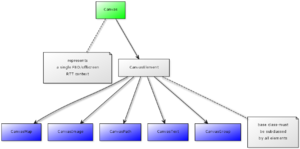

All new canvas elements need to implement the Canvas::Element interface (new elements can also sub-class existing elements, e.g. see the implementation of the Map and Window($FG_SRC/Canvas) elements), the canvas system currently supports the following primitives (see $SG_SRC/canvas/elements):

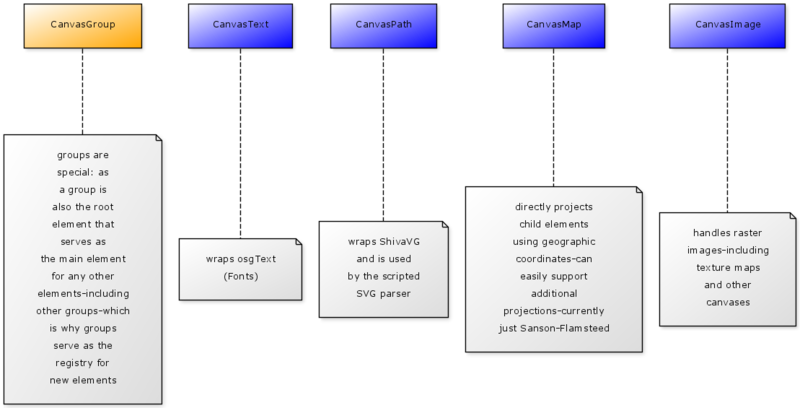

- CanvasGroup - main element: for grouping (a group of arbitrary canvas primitives, including other groups)

- CanvasText - for rendering texts (mapped to osgText)

- CanvasPath - for rendering vector graphics (mapped to OpenVG, currently also used to render SVGs into groups)

- CanvasMap - for rendering maps (automatic projection of geographic coordinates to screen coordinates, subclass of group)

- CanvasImage - for rendering raster images (mapped to osg::Image)

- CanvasWindow - this is part of $FG_SRC/Canvas/Window.?xx, it's a subclass of CanvasImage, used to implement windows (as of 05/2014 also to be found in simgear)

Most end-user features can be decomposed into lower-level components that need to be available in order to implement the corresponding feature in user-space.

Thus, the canvas system is based on a handful of rendering modes, each supporting different primitives - each of those modes is implemented as a so called "Canvas Element", which is a property-tree controlled subtree of a canvas texture using a certain name, that supports specific events and notifications.

According to the development philosophy outlined above, you obviously won't see new canvas elements that are highly use-case specific, such as a "night vision" or FLIR element. Instead, what is more likely to be supported are the lower level building blocks to enable end-users creating such features, i.e. by adding support for running custom effects/shaders and by rendering scenery views to canvas textures.

Adding a new Element

You will want to add a new Canvas::Element whenever you want to add support for features which cannot be currently expressed easily (or efficiently) using existing means (i.e. via existing elements and scripting space frameworks). For example, this may involve projects requiring camera support, rendering scenery views to a texture, rendering 3D models to a texture or doing a complete moving map with terrain elevations/height maps (even though the latter could be implemented by sub-classing Canvas::Image to some degree).

To add a new element, these are the main steps:

- Navigate to $SG_SRC/canvas/elements

- Create a new set of files myElement.cxx myElement.hxx

- add them to $SG_SRC/canvas/elements/CMakeLists.txt (as per Developing using CMake)

diff --git a/simgear/canvas/elements/CMakeLists.txt b/simgear/canvas/elements/CMakeLists.txt

index bd21c13..9fdd48d 100644

--- a/simgear/canvas/elements/CMakeLists.txt

+++ b/simgear/canvas/elements/CMakeLists.txt

@@ -1,6 +1,7 @@

include (SimGearComponent)

set(HEADERS

+ myElement.hxx

CanvasElement.hxx

CanvasGroup.hxx

CanvasImage.hxx

@@ -14,6 +15,7 @@ set(DETAIL_HEADERS

)

set(SOURCES

+ myElement.cxx

CanvasElement.cxx

CanvasGroup.cxx

CanvasImage.cxx

@@ -23,4 +25,4 @@ set(SOURCES

)

Next, open the header file and add a new Element classs:

#ifndef CANVAS_MYELEMENT_HXX_

#define CANVAS_MYELEMENT_HXX_

#include <simgear/props/propsfwd.hxx>

#include "CanvasElement.hxx"

namespace simgear

{

namespace canvas

{

class myElement : public Element

{

public:

static const std::string TYPE_NAME;

static void staticInit();

myElement( const CanvasWeakPtr& canvas,

const SGPropertyNode_ptr& node,

const Style& parent_style = Style(),

Element* parent = 0 );

virtual ~myElement();

protected:

virtual void update(double dt);

private:

myElement(const myElement&) /* = delete */;

myElement& operator=(const myElement&) /* = delete */;

};

} // namespace canvas

} // namespace simgear

#endif /* CANVAS_MYELEMENT_HXX_ */

)

Next, add the source file implementing the new myElement class:

#include "myElement.hxx"

#include <simgear/props/props.hxx>

#include <simgear/misc/sg_path.hxx>

namespace simgear

{

namespace canvas

{

const std::string myElement::TYPE_NAME = "myelement";

void myElement::staticInit()

{

if( isInit<myElement>() )

return;

}

//----------------------------------------------------------------------------

myElement::myElement( const CanvasWeakPtr& canvas,

const SGPropertyNode_ptr& node,

const Style& parent_style,

Element* parent ):

Element(canvas, node, parent_style, parent)

{

SG_LOG(SG_GENERAL, SG_ALERT, "New Canvas::myElement element added!");

}

//----------------------------------------------------------------------------

myElement::~myElement()

{

SG_LOG(SG_GENERAL, SG_ALERT, "Canvas::myElement element destroyed!");

}

void myElement::update(double dt)

{

}

} // namespace canvas

} // namespace simgear

Next, edit CanvasGroup.cxx to register your new element (each canvas has a top-level root group, so that's how elements show up), navigate to Group::staticInit() and add your new element type there (don't forget to add your new header):

diff --git a/simgear/canvas/elements/CanvasGroup.cxx b/simgear/canvas/elements/CanvasGroup.cxx

index 51523f4..24e19d3 100644

--- a/simgear/canvas/elements/CanvasGroup.cxx

+++ b/simgear/canvas/elements/CanvasGroup.cxx

@@ -21,6 +21,7 @@

#include "CanvasMap.hxx"

#include "CanvasPath.hxx"

#include "CanvasText.hxx"

+#include "myElement.hxx"

#include <simgear/canvas/CanvasEventVisitor.hxx>

#include <simgear/canvas/MouseEvent.hxx>

@@ -60,6 +61,7 @@ namespace canvas

return;

add<Group>(_child_factories);

+ add<myElement>(_child_factories);

add<Image>(_child_factories);

add<Map >(_child_factories);

add<Path >(_child_factories);

Next, navigate to $FG_ROOT/Nasal/canvas/api.nas and extend the module to add support for your new element:

diff --git a/Nasal/canvas/api.nas b/Nasal/canvas/api.nas

index 85f336a..81c0fa0 100644

--- a/Nasal/canvas/api.nas

+++ b/Nasal/canvas/api.nas

@@ -314,6 +314,18 @@ var Element = {

}

};

+# myElement

+# ==============================================================================

+# Class for a group element on a canvas

+#

+var myElement = {

+# public:

+ new: func(ghost)

+ {

+ return { parents: [myElement, Element.new(ghost)] };

+ },

+};

+

# Group

# ==============================================================================

# Class for a group element on a canvas

@@ -958,7 +970,8 @@ Group._element_factories = {

"map": Map.new,

"text": Text.new,

"path": Path.new,

- "image": Image.new

+ "image": Image.new,

+ "myelement": myElement.new,

};

Next, rebuild SG/FG and open the Nasal Console and run a simple demo to test your new element:

var CanvasApplication = {

##

# constructor

new: func(x=300,y=200) {

var m = { parents: [CanvasApplication] };

m.dlg = canvas.Window.new([x,y],"dialog");

m.canvas = m.dlg.createCanvas().setColorBackground(1,1,1,1);

m.root = m.canvas.createGroup();

##

# creates a new element

m.myElement = m.root.createChild("myelement");

m.init();

return m;

}, # new

init: func() {

var filename = "Textures/Splash1.png";

# create an image child for the texture

var child=me.root.createChild("image")

.setFile( filename )

.setTranslation(25,25)

.setSize(250,250);

}, #init

}; # end of CanvasApplication

var splash = CanvasApplication.new(x:300, y:300);

print("Script parsed");vyou may also want to check out $FG_SRC/Scripting/NasalCanvas.?xx to learn more about exposing custom elements to scripting space via Nasal/CppBind. Next, you'll want to implement the update() methods and the various notification methods supported by CanvasElement:

- childAdded

- childRemoved

- childChanged

- valueChanged

For event handling purposes, you'll also want to check out the following virtual CanvasElement methods:

- accept()

- ascend()

- traverse()

- handleEvent()

- hitBound()

Discussed Enhancements

| Note The features described in the following section aren't currently supported or being worked on, but they've seen lots of community discussion over the years, so that this serves as a rough overview. |

An integrated Canvas based launcher

Also see ticket #1295, Reset & re-init#Benefits and [1]

| people have been working on making external launchers obsolete for most use-cases in future versions, which is likely to materialize around FG 4.2+ according to recent discussions and commits.[1] — Hooray

|

| An integrated GUI launcher is something that we'd all like to see, and which we are working towards - it will probably happen within the next 12-18 months (just a guess), not because it's such a high priority, but because, by then, we should have all the pieces for it in place - and the 3-4 main core developers (main, in terms of activity) agree that this is the way forward, so from a middleware (Nasal/XML, Canvas) standpoint, we're going to help with creating a framework to parse our existing GUI dialogs and turn them into canvas-driven GUI widgets, which will need to be developed first of all though - that's the kind of stuff that Philosopher has been doing in his REPL interpreter: custom GUI work.

The chances are pretty good that the upcoming GUI will look much less 1940s style than the existing GUI, i.e. fairly modern actually. But the good news for you is that styling/skinnig is intended to be supported eventually. Tom has in fact added quite a bit of code to make that fast and flexible, not just Nasal, but also C++ - so he seems to consider that a priority, too.[2]— Hooray

|

| The main thing here is "run-time reinitialization" - i.e. having code that doesn't need to be shut down to change settings, but that simply fetches the new settings and applies them.

Zakalawe has been working towards this as part of the reset/re-init effort - mid-term, the idea is to make GUI launchers obsolete for most people, by providing an in-sim GUI launcher where such settings can be directly changed, including changing aircraft, but also saving/loading flights. For these reasons, most of us are not too eager to extend external GUI launchers. Canvas-wise, there isn't much missing to provide an integrated GUI launcher - what would be useful though, is having a new element that renders arbitrary 3D models/camera views to a texture, i.e. for aircraft/scenery previews. But otherwise, this is mostly a matter of having scripting (Nasal) and Canvas available earlier, so that a GUI launcher can created using ~200-300 lines of Nasal. This is also where we could provide a pulldown menu with settings for a handful of startup/run-time profiles. And of course there are still a handful of subsystems that cannot currently be re-intialized without shutting down the whole thing. For non programmers, it's helpful to imagine it like exchanging your car radio, seats, brakes or even engine, while going 100 mph - not impossible to pull off, but quite a few - especially because the "car" (FG) was never designed with this requirement in mind.[3]— Hooray

|

| I made some experiments with creating a GUI using Canvas, but it’s not an area I especially enjoy or feel comfortable working in, so progress was slow, and recently, non-existant. I have considered making a placeholder GUI using PUI widgets, which would only take a couple of days for something basic, but again hacking the PUI code is far from enjoyable.[4] — James Turner

|

Supporting Cameras

Note People interested in working on this may want to check out the following pointers:

|

Background:

- Howto:Use a Camera View in an Instrument

- $FG_ROOT/Docs/README.multiscreen

- http://docs.freeflightsim.org/flightgear/classflightgear_1_1CameraGroup.html

- https://gitorious.org/fg/zans-flightgear/commits/09832d8076a985a329500c027c1ed4f9b72bb1a9

- http://trac.openscenegraph.org/projects/osg/wiki/Support/ProgrammingGuide/ViewerVSCompositeViewer

Also see The FlightGear Rendering Pipeline

| I'm looking to replicate a camera with a fixed viewpoint from the aircraft. For example looking directly down.

Is there a way I can use some scripting method to call a new window displayed in the bottom right hand side of the screen showing a fixed camera view, without having to edit the preferences for my machine? I'd like it to be easily distributable. [5] — Avionyx

|

| I was wondering if it were possible to restrict the camera output to only one half of the running FG window? I'm hoping to do this so that I may have the map and route manager GUIs active in the other half, so that they aren't obscuring the camera view (and also have the entire HUD visible). So basically, half the window straight down the center - left half is just black, right half is the camera.

Although this would also be solved if there were an external FG dynamic navigational map program, that also displayed waypoints... (I don't think there is one, right?). Additionally, I would love to hear that this question can be answered with Nasal, as I really can't afford to edit the source code and recompile (it's for a project, and I have no admin rights on the laboratory machines).[6]— seabutler

|

| I'm trying to debug reflection shader I'm working on. I have a camera attached to a scene graph, which pre-renders (osg::Camera::PRE_RENDER) scene into offscreen surface (osg::Camera::FRAME_BUFFER_OBJECT); For a debugging purposes I have to see the result of that render pass.

I'm not very good yet in FG internal structure, so I'd like to ask - can this camera be somehow attached to FG camera views (v), or embedded as separate window ?[7] — Vladimir Karmisin

|

| I want to give access to every stage of the rendering to the effect system. The geometry pass outputs to render target, but the fog, the lights, the bloom need to have access to the textures of the buffer, and there is a separate one for each camera associated to windows or sub windows. [8] — Frederic Bouvier

|

| It would be nice if the Effects framework had a way to load arbitrary textures and make them available to effects.I don't know if there is a better way to create your texture offline than write C++ code in simgear. OSG will read a TIFF file with 32bits per component as a floating point texture... assuming you can create

such a thing.[9] — Tim Moore

|

| modify the Renderer class to separate from the scenegraph, terrain and models on one hand, the skydome and stars on the other, and finally the clouds.

These three elements are passed to the CameraGroup class in order to be treated separately in the new rendering engine (and put together in the current one).[10] — Frederic Bouvier

|

| I want to point out my work on my "newcameras" branch: https://gitorious.org/~zan/fg/zans-flightgear/commits/newcameras which allows user to define the rendering pipeline in preferences.xml. It does not (yet?) have everything Rembrandt's pipeline needs, but most likely is easily enhanced to support those things.

Basically this version adds support for multiple camera passes, texture targets, texture formats, passing textures from one pass to another etc, while preserving the standard rendering line if user wants that. I wish this work could be extended (or maybe even I can extend it ;) to handle the Rembrandt camera system. This will not solve all problems in the merge, but some of them.[11]— Lauri Peltonen

|

| I was not aware of your work. But given what you write here, this looks pretty promising. Fred mentioned your name in an offline mail. I would highly apprechiate that we do not lock out low end graphics boards by not having any fallback. May you both should combine forces?

From what I read, I think both are heading in the same global direction and both implementations have some benefits over the other?[12] — Mathias Fröhlich

|

| I would like to extend the format to avoid duplicating the stages when you have more than one viewport. What I see is to specify a pipeline as a template, with conditions like in effects, and have the current camera layout refer the pipeline that would be duplicated, resized and positioned for each declared viewport[13] — Frederic Bouvier

|

| Mapping cameras to different windows, which can be opened on arbitrary screens, will absolutely still be supported. I know that multi-GPU setups are

important for professional users and our demos.[14] — Tim Moore

|

| I believe that we need to distinguish between different render to texture cameras. Camera nodes must be accessible from within flightgear. That ones that will end in mfd displays or hud or whatever that is pinned into models. And one that are real application windows like what you describe - additional fly by view, and so on. And I believe that we should keep that separate and not intermix the code required for application level stuff with building of 3d models that do not need anything application level code to animate the models ... I think of some kind of separation that will also be good if we would do HLA between a viewer and an application computing physical models or controlling an additional view hooking into a federate ...[15] — Mathias Fröhlich

|

| I've done some work with setting up a model of a pan/tilt camera system that can point at a specific wgs84 point or along a specific NED vector

(i.e. nadir, or exactly at my shadow, etc.) This was [unfortunately] for a paid consulting project so that code doesn't live in the FlightGear tree. However, it's really easy to configure a view that stays locked on a specific lon/lat and I hacked a small bit of nasal to copy the point you click on over into the view target variables so you can click any where in the scene and the pan/tilt camera will hold center on that exact location. FlightGear offers a lot of flexibility and comparability in this arena.[16] — Curtis Olson

|

| Would it be possible to place the new "view" into a window instead of having a dedicated view? That would allow you to have an instrument panel with a blank cut-out that could hold this newscam/FLIR window.The easiest way to visualize the idea I have is to think about the view you'd see in one of the rear-view mirrors that most fighters have along the canopy bow (and the Spitfire has mounted on top of the canopy bow, outside the cockpit). You'd see your full screen view as usual, but you'd also have these "mirrors" showing the view behind you at the same time.[17] — Gene Buckle

|

| One thing we have to consider with rear view mirrors is that we don't currently have the ability to flip the display for the "mirror" affect.There's got to be a very simple view transform matrix that would invert the display in the horizontal direction. Probably the identity matrix with the appropriate axis negated (-1). It might be a relatively simple thing to add to the view transform pipeline at some point.[18] — Curtis Olson

|

| I had a look at the this idea a while back - the problem I came across was that the camera would show the view to the rear NOT the mirror image. I

couldn't see a way around that without a great deal of processing. At hat point I gave up.[19] — Vivian Meazza

|

- ↑ Hooray (Thu May 15, 2014 1:33 pm). Dev and test environment.

- ↑ Hooray (Thu May 15, 2014 1:33 pm). Dev and test environment.

- ↑ Hooray (Thu May 15, 2014 1:33 pm). Dev and test environment.

- ↑ James Turner (2014-05-24 09:33:20). 24 days from freeze: what happened to....

- ↑ Avionyx (Wed Mar 12, 2014 7:08 am). Sub window view.

- ↑ seabutler (Fri Jan 24, 2014 5:38 am). "Half" the FG window?.

- ↑ Vladimir Karmisin (Thu, 08 Jan 2009 05:17:07 -0800). FG - camera for debugging purposes..

- ↑ Frederic Bouvier (Sun, 01 Jan 2012 07:14:43 -0800). Announcing Project Rembrandt.

- ↑ Tim Moore (Tue, 24 Jul 2012 22:38:35 -0700). Functions to textures?.

- ↑ Frederic Bouvier (Wed, 07 Mar 2012 05:08:06 -0800). RFC: changes to views and cameras.

- ↑ Lauri Peltonen (Wed, 07 Mar 2012 04:58:44 -0800). Rembrandt the plan.

- ↑ Mathias Fröhlich (Wed, 07 Mar 2012 10:15:31 -0800). Rembrandt the plan.

- ↑ Frederic Bouvier (Wed, 07 Mar 2012 05:08:06 -0800). RFC: changes to views and cameras.

- ↑ Tim Moore (30 Jun 2008 22:46:34 -0700). RFC: changes to views and cameras.

- ↑ Mathias Fröhlich (30 Jun 2008 22:46:34 -0700). RFC: changes to views and cameras.

- ↑ Curtis Olson (Tue, 15 May 2012 14:19:34 -700). LiDAR simulation in FG and powerline scenery.

- ↑ Gene Buckle (Thu, 23 Jul 2009 10:11:05 -0700). view manager "look at" mode.

- ↑ Curtis Olson (Thu, 23 Jul 2009 10:11:05 -0700). view manager "look at" mode.

- ↑ Vivian Meazza (Thu, 23 Jul 2009 10:11:05 -0700). view manager "look at" mode.

Effects / Shaders

| I'm currently experimenting with a 2D Canvas and rendering everything to a texture. For this I use FGODGauge to render to texture and

FGODGauge::set_texture to replace a texture in the cockpit with the texture from the fbo. This works very well [...] I have just extended the ReplaceStaticTextureVisitor::apply(osg::Geode& node) method to also replace texture inside effects. It works now by using the same technique as for the SGMaterialAnimation where a group is placed in between the object whose texture should be changed and its parent. This group overrides the texture:virtual void apply(osg::Geode& node)

{

simgear::EffectGeode* eg =

dynamic_cast<simgear::EffectGeode*>(&node);

if( eg )

{

osg::StateSet* ss = eg->getEffect()->getDefaultStateSet();

if( ss )

changeStateSetTexture(ss);

}

else

if( node.getStateSet() )

changeStateSetTexture(node.getStateSet());

int numDrawables = node.getNumDrawables();

for (int i = 0; i < numDrawables; i++) {

osg::Drawable* drawable = node.getDrawable(i);

osg::StateSet* ss = drawable->getStateSet();

if (ss)

changeStateSetTexture(ss);

}

traverse(node);

}

stateSet->setTextureAttribute(0, _new_texture,

osg::StateAttribute::OVERRIDE);

stateSet->setTextureMode(0, GL_TEXTURE_2D, osg::StateAttribute::ON);

— Thomas Geymayer

|

| If you want to pass substantial amounts of data, I’d suggest to use a texture (with filtering disabled, probably) to pass the info. Since we don’t have much chance of using the ‘correct’ solution (UBOs) in the near future.

If you need help generating a suitable texture on the CPU side, let me know.[2] — James Turner

|

- ↑ Thomas Geymayer (Tue, 01 May 2012 15:34:41 -0700). Replace texture with RTT.

- ↑ James Turner ( 2014-03-07 10:27:40). Passing arrays to a shader.

At some point, the canvas system itself could probably benefit from being also able to use the Effects/Shader framework, so that canvas textures can also be processed via effects and shaders optionally, before they get drawn. That should make all sorts of fancy effects possible, such as night vision cameras or thermal view, rendered to canvas textures/groups.

It is currently not yet clear how to address this best, the easiest option might be to specify if effects or vertex/fragment shaders shall be invoked via properties (boolean), including their file names referring to $FG_ROOT?

That would then disable the default rendering pipeline for those canvas textures and use shaders.

Basically, anything that's not directly possible via the core canvas system or via its Nasal wrappers, would then be handled via effects/shaders. So we would gain lots of flexibility, and performance benefits.

Ideally, there could be a simple interface class, so that these things can be customized via listeners, like the property-observer helper, just specific to enabling shaders for a canvas texture. So if people really want to create really fancy textures or camera views, they could use effects/shaders then, which would keep the design truly generic, and it would ensure that there's no bloat introduced into the main canvas system.

We did have some discussions about supporting per-canvas (actually per canvas::Element) effects and shaders via properties, TheTom even mentioned that he was interested in supporting this at some point, especially given the number of projects that could be realized like that (FLIR, night vision, thermal imaging etc) - but so far, there are quite a few other things that are taking precedence obviously - so, as far as I am aware, there's nobody working on effects/shader support for canvas, even though I am sure that this would be highly appreciated.

At the time of writing this (02/2014) the Canvas does not yet include any support for applying custom effects or Shaders to canvas elements or the whole canvas itself - however, supporting this is something that's been repeatedly discussed over time, so we're probably going to look into supporting this eventually[2].

If the canvas can internally be referenced by a texture2D() call, then it should be easy - the fragment shader knows screen resolution pixel coordinates, so it's straightforward to look up the local pixel from the texture and then blur or recolor it, distort it or whatever you have in mind.

Menu lighting based on light in the scene might be cool

These shouldn't even be very complicated to do

Assuming the canvas is internally a quad with a properly uv-mapped texture, then:

- making the vertex shader just pass everything through and

- uniform sampler2D myTexture; should make that texture available to the fragment shader

- vec2 coords = gl_TexCoord[0].xy; should get the coordinates of the local pixel inside the texture

#version 120

uniform sampler2D input_tex;

void main() {

// get the texture coords of the pixel

vec2 coords = gl_TexCoord[0].xy;

//look up the pixel color from the input texture

vec4 color = texture2D( input_tex, coords) ;

// and pass the pixel color through

gl_FragColor = color;

}

There are at least 2-3 people who can help with pointers, but we don't have time to implement this ourselves - so if anybody is interested, please get in touch via the canvas subforum.

The Effects framework is implemented in SimGear: http://gitorious.org/fg/simgear/trees/next/simgear/scene/material

void main(void) {

gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0);

}

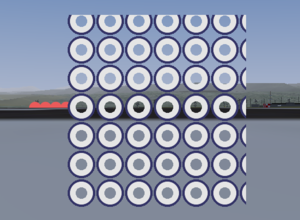

// http://people.freedesktop.org/~idr/OpenGL_tutorials/03-fragment-intro.html

void main(void)

{

vec2 pos = mod(gl_FragCoord.xy, vec2(50.0)) - vec2(25.0);

float dist_squared = dot(pos, pos);

if (dist_squared < 400.0)

gl_FragColor = vec4(.90, .90, .90, 0.0);

else gl_FragColor = vec4(.20, .20, .40, 0.8);

}

Implementation-wise, supporting shaders per canvas seems straightforward to support - but it would probably be better to support shaders per element, where each element would render its own sub-texture if shaders/effects are specified, and apply the canvas' osg::StateSet otherwise. We could add an Interface on top of SimGear's "Effects" framework which would be implemented by the Canvas itself, but also by Canvas::Element

- Probably need to extend the Effects framework to support reloading effects/shaders from disk for testing purposes

Also see:

- http://trac.openscenegraph.org/projects/osg/wiki/Support/Tutorials/ShadersIntroduction

- http://trac.openscenegraph.org/projects/osg/wiki/Support/Tutorials/ShadersUpdating

- http://en.wikipedia.org/wiki/OpenGL_Shading_Language

- http://www.cuboslocos.com/tutorials/OSG-Shader

- http://forum.flightgear.org/viewtopic.php?f=71&t=22166

- http://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=canvas+shader

- http://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=canvas+shaders

- http://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=canvas+effects

| References

|

Discussed new Elements

The previously mentioned primitives alone can already be used to create very sophisticated avionics and dialogs - however, depending on your needs, you may want to extend the canvas system to support additional primitives. Typically, you'll want to add new primitives in order to optimize performance or simplify the creation of more sophisticated avionics and/or dialogs (e.g. for mapping/charting purposes). If you are interested in adding new primitives, please take a look at the sources in $SG_SRC/canvas/elements.

For example, there's been talk about possibly adding the following additional primitives at some point. However, none of these are currently a priority or being worked on by anybody:

- support for a vertical mapping mode (e.g. to create Vertical Situation Displays or flight path evaluation dialogs), would probably make sense to use PROJ4 for additional projcetion support?

- support for rendering scenery views (e.g. for tail cameras or mirrors etc) [3] [4] ticket #1250

- support for ESRI shapefiles (instead of using shapelib, it would make sense to use GDAL/OGR here, or directly the OSG/ReaderWriterOGR plugin) [5] (FlightGear/osgEarth now depends on GDAL, so should be straightforward dependency-wise).

- support for GeoTIFF files or terrain height profiles using the tile cache

- rendering 3D objects

- support for ortographic moving map displays, e.g. using atlas [6] (ideally usingCompositeViewer Support)

If you are interested in working on any of these, please get in touch via the canvas sub forum first.

There is already support for creating multiple osgviewer windows in FlightGear, this is commonly used in multiscreen setups - to support the creation and usage of osgviewer windows in Canvas, we would need to look into adding a new placement type to the canvas system, so that osgviewer/OS windows can be created and controlled via the canvas system and a handful of placement-specific properties [7].

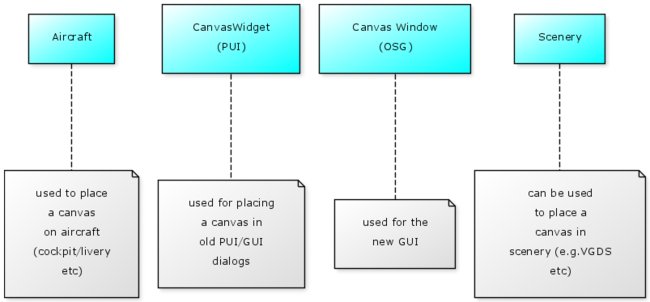

Placements

Obviously, users can use the canvas system for developing all sorts of features that may need to be accessible using different interfaces - for these reasons, the canvas uses the concept of so called placements, so that a canvas-texture can be shown inside GUI windows, GUI dialogs, cockpits, aircraft textures (liveries) - and also as part of the scenery (e.g. for a VGDS).

| Note The features described in the following section aren't currently supported or being worked on, but they've seen lots of community discussion over the years, so that this serves as a rough overview. |

Scenery Overlays

Also see Photoscenery via Canvas? and A project to create a source of free geo-referenced instrument charts

| Texture overlays - FG scenery engine does the chopping and texture co-ord generation. [1] — Paul Surgeon

|

| I am looking for a method for adding a graphical overlay channel to Flightgear. This overlay would consist of a dynamic texture that can be

modified in real time. I've used other OpenGL based systems with this feature but don't know where to start with implementing it in Flightgear.[2] — Noah Brickman

|

| Once the frame is converted to an opengl texture, then it would be a very simple matter of displaying it on the screen with a textured rectangle drawn in immediate mode ... possibly with some level of transparancy, or not ...

I'm involved in some UAV research where we are using FlightGear to render a synthetic view from the perspective of a live flying uav. Would be really cool to super impose the live video over the top of the FlightGear synthetic view. Or super impose an F-16 style HUD on top of the live video ... I have lots of fun ideas for someone with a fast frame grabber and a bit of time [...] Then do whatever bit fiddling is needed to scale/convert the raster image to an opengl texture. Then draw this texture on a quad that is aligned correctly relative to the camera. It might be possible to get fancy and alpha blend the edges a bit. Given an image and the location and orientation of the camera, it would be possible to locate world coordinates across a grid on that image. That would allow a quick/crude orthorectification where the image could be rubber sheeted onto the terrain. This would take some offline processing, but you could end up building up a near real time 3d view of the world than could then be viewed from a variety of perspectives. The offline tools could update the master images based on resolution or currency ... that's probably a phd project for someone, but many of the pieces are already in place and the results could be extremely nice and extremely useful (think managing the effort to fight a dynamic forest fire, or other emergency/disaster management, traffic monitoring, construction sites, city/county management & planning, etc.) I could even imagine some distrubuted use of this so that if you have several uav's out flying over an area, they could send their imagery back to a central location to update a master database ... then the individual operators could see near real time 3d views of places that another uav has already overflown. If we started building up more functionality in this area, there are a lot of different directions we could take it, all of which could be extremely cool.[3]— Curtis Olson

|

Could we generate the texture on the fly? Based on landclass and road data? I could see a number of advantages/disadvantages here as compared to our current, generic textures:

|

| A very interesting idea - so interesting I thought of it and discussed it with some people last year :) The summary answer is, it should be possible, it would

have pretty much the benefits and drawbacks you mention (especially the VRAM consumption), and it would allow nice LoD and solve some other issues. Especially it avoid the nasty clipping issues we have with surface data in TerraGear, since you just paint into the texture, no need to clip all the linear data.[5]— James Turner

|

What we could do is identify which hooks are needed to make this work and provide those via the Canvas system: Canvas textures can already be placed in the scenery, so there should be very little needed in terms of placement-specific attributes, and the corresponding code should be available in SimGear/FlightGear already.

The patch required to modify FlightGear obviously already uses shaders and effects, and it's mostly about exposing additional parameters to the shaders.

- ↑ Paul Surgeon. Scenery engine features..

- ↑ Noah Brickman. Overlay Plane.

- ↑ Curtis Olson. [http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg15459.html Replace fg visualization with streaming video Curtis Olson Fri, 25 Jan 2008 07:51:41 -0800].

- ↑ Thomas Albrecht. Generating ground textures on the fly?.

- ↑ James Turner. Generating ground textures on the fly?.

Native Windows

| Note People interested in working on this may want to check out the following files: |

Currently, all placements are within the main FlightGear window, however there's been talk about providing support for additional Canvas placements, such as e.g. osgviewer placements to help generalize our Window Management routines, so that a canvas can be rendered inside a dedicated OS window:

| Support multiple views/windows: Currently the GUI can only be placed inside one view/window (see Docs/README.multiscreen) but it would be nice to be able to move windows between views.[1] — Thomas Geymayer

|

| I have just been trying out the multiple screen feature in FG. I found that the GUI camera (including the menu bar, hud and 2D panel) appears in only one of the windows. Is there any way I can make the GUI to appear in all the windows? Actually I want to be able to view the hud and 2D panel in all the windows.[2] — Kavya Meyyappan

|

| there's a limitation in Plib that forces the GUI to be drawn on one window.[3] — Tim Moore

|

| I think you have just summarized all the limitations of the FlightGear multi-camera/view/display system. I know that in the case of menus, hud, 2d instrument panels, there would need to be some significant code restructuring to allow these to be displayed on other windows.[4] — Curtis Olson

|

| Good thing to have!!! Just still support graphics context on different screens/displays too ...[5] — Mathias Fröhlich

|

| it can be solved by using multiple osg windows to contain whatever GUI solution we go with - canvas, osgWidget or PUI-port.

Or to put it another way - the actual hard part is running the widgets in the main OpenGL window - which *is* a requirement for full-screen apps and multi-monitor setups. (Some people have claimed otherwise, but I believe we need the option of 'in-window' UI for many cases). So, this is a desirable feature, but doesn't dictate the choice of GUI technology. And can be done as a separate step from replacing PLIB.[6]— James Turner

|

- ↑ Thomas Geymayer (07-30-2012). Switching from PUI to osgWidget.

- ↑ Kavya Meyyappan (Fri, 19 Mar 2010 03:31:50 -0700). [Flightgear-devel] Help needed with multi-screen.

- ↑ Tim Moore (Sat, 20 Mar 2010 01:42:31 -0700). Re: [Flightgear-devel] Help needed with multi-screen.

- ↑ Curtis Olson (Fri, 19 Mar 2010 08:36:22 -0700). Re: [Flightgear-devel] Help needed with multi-screen.

- ↑ Mathias Fröhlich (Sat, 28 Jun 2008 00:05:19 -0700). Re: [Flightgear-devel] RFC: changes to views and cameras.

- ↑ James Turner (Wed, 25 Jul 2012 02:28:42 -0700). Switching from PUI to osgWidget.

Placement/Element for Streaming: Computer Vision

| Note There seem to be two main use-cases discussed by contributors: 1) The UAV guys want to view/use external live video inside FlightGear as an instrument/texture (which would require a new Canvas::Element to render an external video stream to a canvas) and 2) the computer vision (OpenCV) guys want to stream FlightGear live video itself to another application for image processing purposes - the latter would require streaming FlightGear's main window view to an external program (i.e. by using FlightGear's CameraGroup code), possibly by using a corresponding "virtual Placement" that opens a socket to provide a live stream of the FlightGear main window via background thread. |

People interested in doing UAV work that involves computer vision (e.g. using OpenCV, see ticket #924, [8], [9]) will probably also want to look into using a dedicated Canvas placement for this, in combination with adding a dedicated Canvas::Element to render scenery views to a texture using CompositeViewer Support - these two features would provide a straightforward mechanism to export a live video stream of FlightGear via a dedicated port.

| Note There were several early attempts at bringing streaming capabilities to FlightGear in the pre-OSG days that are meanwhile unmaintained, e.g.: |

| I'm new to FlightGear, and am trying to use it as an image generator for a simulator I'm developing...I've got it configured to take inputs

from a UDP port to fly, but I want to disable a lot of features so that all FlightGear does is draw scenery. [1] — Drew

|

| I would like to use FlightGear to generate the scene observed by a UAV's onboard camera.

Basically, this would translate to feeding FlightGear the FDM data and visualizing the image generated by FlightGear in another computer, across a network, using for example streaming video. I suppose this is a bit of a far-fetched idea, but is there any sort of support for this (or something similar) already implemented? [2]— Antonio Almeida

|

| I am interested in using it as a visualization tool for UAV's. I would like to replace the fg scenery with images captured from a camera onboard an aircraft. I was wondering if there is any way to import images into flightgear on the fly. The basic goal would be to show live video where available and fall over to flight gear visuals when the feed is lost(using a custom view from the camera perspective) .[3] — STEPHEN THISTLE

|

| I'm hooking up a lumenera Camera for a live video feed from a UAV, so that the video gets handed to Flightgear, which then draws its HUD over the video stream. In order to do this, I need to be able to communicate with the window controls. My camera can display the video in a new window, but I want it to draw to the video screen that Flightgear is already using.[4] — Bruce-Lockhart

|

| I don't think there's any current way to do this. However, I think what is needed is to link in some video capture library to do frame grabs from your video camera as quickly as possible. Then do whatever bit fiddling is needed to scale/convert the raster image to an opengl texture. Then draw this texture on a quad that is aligned correctly relative to the camera. It might be possible to get fancy and alpha blend the edges a bit.

Given an image and the location and orientation of the camera, it would be possible to locate world coordinates across a grid on that image. That would allow a quick/crude orthorectification where the image could be rubber sheeted onto the terrain. This would take some offline processing, but you could end up building up a near real time 3d view of the world than could then be viewed from a variety of perspectives. The offline tools could update the master images based on resolution or currency ... that's probably a phd project for someone, but many of the pieces are already in place and the results could be extremely nice and extremely useful (think managing the effort to fight a dynamic forest fire, or other emergency/disaster management, traffic monitoring, construction sites, city/county management & planning, etc.) I could even imagine some distrubuted use of this so that if you have several uav's out flying over an area, they could send their imagery back to a central location to update a master database ... then the individual operators could see near real time 3d views of places that another uav has already overflown. If we started building up more functionality in this area, there are a lot of different directions we could take it, all of which could be extremely cool.[5]— Curtis Olson

|

| Getting live video onto a texture is pretty standard stuff in the OpenSceneGraph community[6] — Tim Moore

|

| I imagined embedding some minimal routine that talks to the camera and grabs an image frame. Then usually you can directly map this into an opengl texture if you figure out the pixel format of your frame grab and pass the right flags to the opengl texture create call. Then you should be able to draw this texture on top of any surface just like any other texture ... you could map it to a rectangular area of the screen, you could map it to a rotating cube, map it to the earth surface, etc. That's about as far as far as I've gone with thinking through the problem.[7] — Curtis Olson

|

| I want draw something in the front face of the FlightGear view, but I don't wan to recompile / modify any codes, so, if the FlightGear could give me a interface to draw something myself through DLL, that's perfect.[8] — CHIV

|

- ↑ Drew (Tue, 25 Jan 2005 09:24:30 -0800). Disabling functionality.

- ↑ Antonio Almeida (Tue, 22 May 2007 10:14:46 -0700). Flightgear visualization as streaming video.

- ↑ STEPHEN THISTLE (Fri, 25 Jan 2008 06:32:03 -0800). Replace fg visualization with streaming video.

- ↑ cullam Bruce-Lockhart (Tue,29 Jul 2008 09:23:54 -0700). Window controls.

- ↑ Curtis Olson. [http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg15459.html Replace fg visualization with streaming video Curtis Olson Fri, 25 Jan 2008 07:51:41 -0800].

- ↑ Tim Moore (Fri, 25 Jan 2008 08:31:40 -0800). Replace fg visualization with streaming video.

- ↑ Curtis Olson. Window controls.

- ↑ CHIV (Thu May 08, 2014 3:03 am). One suggestion: FlightGear wolud support plugins like this!.

Adding new Placements

Projections

Also see ticket #550 and http://docs.freeflightsim.org/simgear/classsimgear_1_1canvas_1_1Projection.html

Adding new Projections

| Note Discuss base class that needs to be implemented |

Event Handling

| Note Discuss CanvasEventManager, CanvasEvent, CanvasEventVisitor |

Canvas Integration

| Note Discuss FGCanvasSystemAdapter |