Talk:Resource Tracking for FlightGear: Difference between revisions

(→VRAM tracking (pm2wiki): new section) |

|||

| Line 24: | Line 24: | ||

virtual int getVRAMUsageInKB() { | virtual int getVRAMUsageInKB() { | ||

SG_LOG(SG_GENERAL, SG_ALERT,"NVIDIA Function not yet implemented !"); | |||

return 100; | return 100; | ||

} | } | ||

| Line 31: | Line 32: | ||

class ATI_GPU: public GPUInfo { | class ATI_GPU: public GPUInfo { | ||

virtual int getVRAMUsageInKB() { | virtual int getVRAMUsageInKB() { | ||

SG_LOG(SG_GENERAL, SG_ALERT,"ATI Function not yet implemented !"); | |||

return 200; | return 200; | ||

} | } | ||

| Line 39: | Line 41: | ||

virtual int getVRAMUsageInKB() { | virtual int getVRAMUsageInKB() { | ||

SG_LOG(SG_GENERAL, SG_ALERT,"Intel Function not yet implemented !"); | |||

return 500; | return 500; | ||

} | } | ||

| Line 46: | Line 49: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

(100/200 and | (100/200 and 500 would be replaced with the corresponding code) | ||

In the LinuxMemoryInterface::LinuxMemoryInterface constructor, it would need to read the gpu-vendor property (as per the help/about dialog) and instantiate the correct class, by adding a pointer to it that holds the | In the LinuxMemoryInterface::LinuxMemoryInterface() constructor, it would need to read the gpu-vendor property (as per the help/about dialog) and instantiate the correct class, by adding a pointer to it that holds the object: | ||

<syntaxhighlight lang="cpp"> | <syntaxhighlight lang="cpp"> | ||

| Line 79: | Line 82: | ||

<syntaxhighlight lang="cpp"> | <syntaxhighlight lang="cpp"> | ||

+ MemoryInterface() { | + MemoryInterface() { | ||

+ SG_LOG(SG_GENERAL, SG_ALERT, "Check for GPU vendor here"); | + SG_LOG(SG_GENERAL, SG_ALERT, "TODO: Check for GPU vendor here, and dynamically allocate correct class"); | ||

+ } | + } | ||

Revision as of 15:07, 23 August 2015

Nasal

A while ago, I played with tracking the duration of Nasal callback execution, to get a list of scripts that tend to trigger the GC more frequently (i.e. higher "GC pressure"). And here, it's quite obvious that some scripts and function have a higher probability of triggering the Nasal GC than others - including some of your LW/AW loops, some of them trigger the GC very rarely, while others trigger it regularly. It shouldn't be too hard adapt that code and expose additional GC-internals to the property tree, so that we know how much memory is consumed per memory pool, and possibly even per loaded script or sub module.

VRAM tracking (pm2wiki)

looking at the wiki, the patch you've updated now should be fairly easy to extend for getting VRAM utilization, too - are you on NVIDIA or ATI/AMD hardware ?

Here are code snippets for AMD/ATI and NVIDIA, which can probably be copied directly into the ::update() method of the LinuxMemoryInterface class: http://www.geeks3d.com/20100531/program ... in-opengl/

This would give you the amount of VRAM that is available/used, which should also be useful for troubleshooting/feature-scaling purposes.

the right way for doing this the "OOP way" would be to come up with a "GPUInfo" class and inherit from that 2-3 classes for ATI/AMD, NVIDIA and Intel - i.e. to be filled in with the stubs for each vendor:

class GPUInfo {

private:

protected:

public:

virtual int getVRAMUsageInKB() = 0;

};

class NVIDIA_GPU: public GPUInfo {

virtual int getVRAMUsageInKB() {

SG_LOG(SG_GENERAL, SG_ALERT,"NVIDIA Function not yet implemented !");

return 100;

}

};

class ATI_GPU: public GPUInfo {

virtual int getVRAMUsageInKB() {

SG_LOG(SG_GENERAL, SG_ALERT,"ATI Function not yet implemented !");

return 200;

}

};

class INTEL_GPU : public GPUInfo {

virtual int getVRAMUsageInKB() {

SG_LOG(SG_GENERAL, SG_ALERT,"Intel Function not yet implemented !");

return 500;

}

};

(100/200 and 500 would be replaced with the corresponding code)

In the LinuxMemoryInterface::LinuxMemoryInterface() constructor, it would need to read the gpu-vendor property (as per the help/about dialog) and instantiate the correct class, by adding a pointer to it that holds the object:

class MemoryInterface {

+public:

+ MemoryInterface() {}

+ typedef map<const char*, double> RamMap;

+//protected:

+ virtual void update() = 0;

+

+ double getTotalSize() const {return _total_size;}

+ //virtual void setTotalSize(double t) {_total_size=t;}

+

+ double getSwapSize() const {return _swap_size;}

+ //virtual void setSwapSize(double s) {_swap_size=s;}

+protected:

+ RamMap _size;

+ std::string _path;

+ std::stringstream _pid;

+ GPUInfo* _gpu;

+

+ double _total_size;

+ double _swap_size;

+};

Next, we would need to change the constructor there to read the GL-vendor property and dynamically select the correct GPU class (ATI/AMD, NVIDIA or Intel):

+ MemoryInterface() {

+ SG_LOG(SG_GENERAL, SG_ALERT, "TODO: Check for GPU vendor here, and dynamically allocate correct class");

+ }

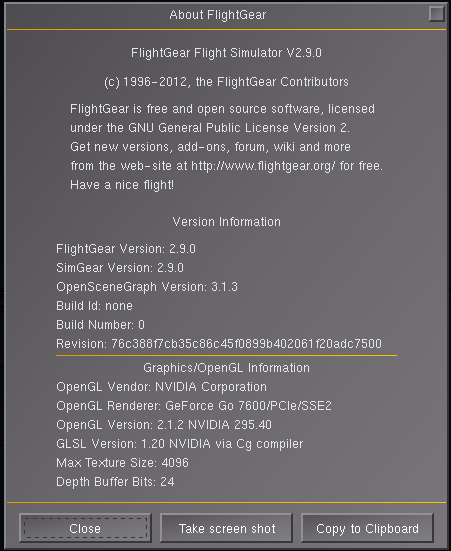

For that, we need to know the correct property to check, for instance, here's the help/about dialog showing the gl-vendor property:

The file being $FG_ROOT/gui/dialogs/about.xml, the exact line (=property) being: http://sourceforge.net/p/flightgear/fgdata/ci/next/tree/gui/dialogs/about.xml#l196

So, a corresponding check would have to do a case-insensitive check to look for the "NVIDIA" token as a substring in the property /sim/rendering/gl-vendor using the fgGetString() API: http://wiki.flightgear.org/Howto:Work_with_the_Property_Tree_API#Strings

#include <algorithm>

#include <string>

MemoryInterface() {

// get the string

std::string fallback = "NVIDIA"; //default value

// get the actual GL vendor string from the property tree, using the fallback

std::string glvendor = fgGetString("/sim/rendering/gl-vendor",fallback.c_str() );

// make it upper case: http://stackoverflow.com/questions/735204/convert-a-string-in-c-to-upper-case

std::transform(glvendor.begin(), glvendor.end(), glvendor.begin(), ::toupper);

// look for the "NVIDIA" substring: http://www.cplusplus.com/reference/string/string/find/

std::size_t found = str.find("NVIDIA");

if (found!=std::string::npos) {

SG_LOG(SG_GENERAL, SG_ALERT, "Supported GPU found: NVIDIA");

_gpu = new NVIDIA_GPU;

}

else {

SG_LOG(SG_GENERAL, SG_ALERT, "Unsupported GPU vendor:" << glvendor);

}

// the constructor would need to be changed to delete the _gpu pointer:

LinuxMemoryInterface::~LinuxMemoryInterface() {

delete _gpu;

}

next, we need to change the update method to also call _gpu->getVRAMUsageInKB():

// or log the whole thing to the property tree

SG_LOG(SG_GENERAL, SG_ALERT, "VRAM usage:" << _gpu->getVRAMUsageInKB() << std::endl );