Canvas development: Difference between revisions

| Line 461: | Line 461: | ||

{{Note|The features described in the following section aren't currently supported or being worked on, but they've seen lots of community discussion over the years, so that this serves as a rough overview. However, this doesn't necessarily mean that work on these features is any way prioritized or even endorsed by fellow contributors -often enough, such discussions may become outdated pretty quickly due to recent developments. So if in doubt, please do get in touch via the Canvas sub-forum before starting to work on anything related to help coordinate things a little. Thank you!}} | {{Note|The features described in the following section aren't currently supported or being worked on, but they've seen lots of community discussion over the years, so that this serves as a rough overview. However, this doesn't necessarily mean that work on these features is any way prioritized or even endorsed by fellow contributors -often enough, such discussions may become outdated pretty quickly due to recent developments. So if in doubt, please do get in touch via the Canvas sub-forum before starting to work on anything related to help coordinate things a little. Thank you!}} | ||

===AI/MP textures === | |||

It appears as though it is not possible for Canvas to locate a texture that is in a multiplayer aircraft model; this has also been seen in the efforts to get Canvas displays working on the B777.<ref>{{cite web | |||

|url = https://forum.flightgear.org/viewtopic.php?p=279540#p279540 | |||

|title = <nowiki> Re: Dual control for Boeing 777 </nowiki> | |||

|author = <nowiki> Hyde </nowiki> | |||

|date = Mar 15th, 2016 | |||

|added = Mar 15th, 2016 | |||

|script_version = 0.40 | |||

}}</ref> | |||

in simgear Canvas::update it appears to be using the factories to find the element; and this means that it can't find the named OSG node, which makes me think that maybe it is only looking in the ownship (which is a null model).<ref>{{cite web | |||

|url = https://sourceforge.net/p/flightgear/mailman/message/35089070/ | |||

|title = <nowiki> [Flightgear-devel] Canvas in dynamically loaded scene models </nowiki> | |||

|author = <nowiki> Richard Harrison </nowiki> | |||

|date = May 15th, 2016 | |||

|added = May 15th, 2016 | |||

|script_version = 0.40 | |||

}}</ref> | |||

=== Property I/O observations === | === Property I/O observations === | ||

Revision as of 13:49, 15 May 2016

| The FlightGear forum has a subforum related to: Canvas |

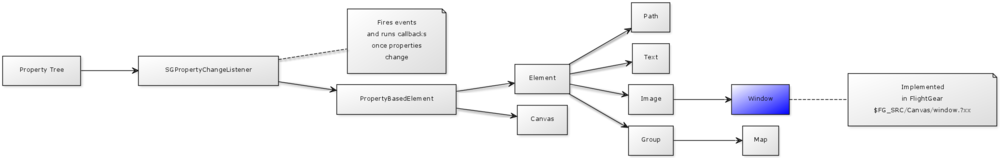

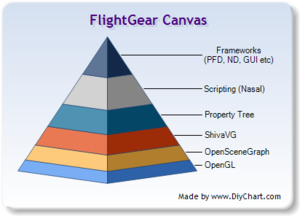

Note This article is primarily of interest to people familiar with Building FlightGear from source, and wanting to extend the Canvas 2D rendering system in SimGear ($SG_SRC/canvas). Readers are assumed to be familiar with C++ and OSG

, the Property Tree and fundamental FlightGear APIs like SGPropertyNode (doxygen), Property Objects, SGSubsystem and SGPropertyChangeListener (the latter being wrapped via simgear::PropertyBasedElement). The Canvas code itself makes extensive use of the STL and Boost. The latest Canvas/Doxygen docs can be found here.

There are two main ways to extend FlightGear's Canvas system:

Whenever all existing Canvas elements (group, map, text, image, path) should benefit from an addition, such as for example adding effects/shader support, it makes sense to extend the underlying base class itself, i.e. Canvas::Element. In addition, the map element (a subclass of group) can be extended to support additional map projections (see simgear/simgear/canvas/elements/map/projection.hxx). People just wanting to add a new layer to an existing dialog or instrument, will probably want to refer to Canvas MapStructure instead. |

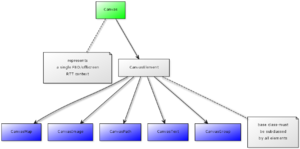

The canvas system is a property-driven FlightGear subsystem that allows creating, rendering and updating dynamic OpenGL textures at runtime by setting properties in the main FlightGear Property Tree.

The [[Property Tree] is the sole interfacing mechanism in use by the Canvas system. A so called listener-based subsystem (via SGPropertyChangeListener) will watch the canvas sub tree in the main property tree for supported "events" (i.e. properties being set, written to/modified), and then update each associated texture accordingly, e.g. by adding a requested vector or raster image, drawing a map/item, placing symbols or placing text labels with custom fonts.

Elements can be nested and added to groups which support showing/hiding and clipping of segments. Vector drawing is handled via ShivaVG (OpenVG).

All property updates result in native C++/OSG data structures being updated (typically using OSG /STL/Boost containers), so that the property tree and scripting are solely used to send update events, which ensures that Canvas-based systems are typically fast enough, often delivering frame rates beyond ~40-60 fps.

Animations are currently not directly supported, instead these can be implemented by using separate canvas groups and hiding/showing them as needed, or simply by changing the size/color/styling attributes of a canvas group using Nasal timers/listeners. Another option to update a canvas without relying on Nasal timers (i.e. due to GC considerations) is using so called "Property Rules", which are currently not yet exposed to Nasal, but which can be used for any needs where scripting overhead should be minimal. Sooner or later, we're probably going to come up with a scripting space wrapper for encapsulating most animation needs, so that existing Canvas frameworks can use a single back-end, which can be customized and optimized over time, possibly by adding native support for animations and/or by allowing animations to be handled without going through scripting space.

The Canvas fully supports recursion, by allowing other canvases (and sub-regions of them via texture-mapping) to be referenced and used as raster images, so that multiple canvases can be chained together, but also through the notion of "groups", which are containers for other canvas elements, including child groups or elements referencing other canvases.

This can be particularly useful for projects requiring multi-texturing and other multi-pass texturing stages. This mechanism is also one of the main building blocks used by the MapStructure charting framework to implement caching support via texture maps, without needing any changes on the C++ side to handle symbol instancing.

The canvas itself is developed with a focus on primarily being an enabling technology. In other words, the canvas is not about implementing individual features like a PFD, ND, EFIS, EICAS or other MFD instruments like a moving map or a GUI library.

Rather, the Canvas system is all about coming up with a flexible and efficient system that allows end-users (aircraft developers and other base package contributors) to develop such features themselves in user space (i.e. the base package) via scripting - without having to be proficient C++ programmers, without having to rebuild FlightGear from source, and without having to be familiar with OpenSceneGraph, OpenGL or other technologies that typically involve a steep learning curve (i.e. STL/Boost).

This approach has several major advantages - in particular, it frees core developers from having to develop and maintain end-user features like a wxradar, groundradar or Navigational Display/PFD by empowering content/base package developers to handle the implementation of such features themselves.

Thus, development of content is moved to user space (i.e. the base package). Recently, we've seen a shift in trend here, because more and more core developers focusing on end user requests, instead of implementing those feature requests, they implement the building blocks and infrastructure to delegate the implementation of these features to user space.

Besides, core developers are generally overstretched, and there are not enough core developers to handle all core development related tasks:

| Unfortunately, most of the active FG developers are currently very overstretched in terms of the areas that they have ownership of, which is affecting how much can actually be done. Fundamentally we need more core devs. [1] — Stuart Buchanan

|

- ↑ Stuart Buchanan (Thu, 25 Apr 2013 07:28:28 -0700). Atmospheric Light Scattering.

The only way to deal with this is to shift the core development focus from developing complex high level end users features (such as an ND, TCAS or WXRADAR) that take years to fully develop, to just providing lower level API s (like a navdb API or a 2D drawing API like Canvas) to enable base package developers to develop those really high level features themselves, without being affected by any core development related "bottlenecks".

This is the route that seemed to work out fairly well for the local weather system, which was prototyped and implemented by a single base package developer in scripting space, who just asked for certain scripting hooks to be provided at some point.

For example, when Stuart, Torsten or Erik implemented LW-specific core extensions, these were about providing new hooks to be used by Thorsten. They didn't commit to implementing a weather system, they just enabled somebody else to continue his work. So this strategy is as much about delegation, as it is about organizing core development.

Core developers cannot possibly implement all the ideas and feature requests that aircraft developers and end users may have, but they can at least provide a toolbox for base package developers to implement such features. Now, without doubt, implementing a WXRADAR, TCAS, AGRADAR or even a full ND /MFD is incredibly complex and time-consuming, especially when taking into account the plethora of instrument variations in existence today.

Exposing a 2D drawing API or a navdb API to base package developers would have been much simpler and less time-consuming, at the cost of possibly not providing certain instruments/features directly - while still providing the building blocks for skilled base package contributors to implement such instruments eventually within the base package, rather than within the C++ source code where evolution and maintenance of such instruments is inherently limited by the availability of C++ developers.

Given the progress we've seen in Canvas-related contributions boosted by having a 2D API, this is a very worthwhile route for developing MFD-style instruments or other end-users features without being limited by our shortage of core developers.

Furthermore, the amount of specialized code in the main FlightGear code base is significantly reduced and increasingly unified: One major aspect of adopting the Canvas system was Unifying the 2D rendering backend via canvas, so that more and more of the old/legacy code can be incrementally re-implemented and modernized through corresponding wrappers, which includes scripting-space frameworks for existing features like the Hud system, but also our existing PLIB/PUI-based GUI, and the old 2D panels code or the Map dialog.

Many of these features are currently using legacy code that hasn't been maintained in years, causing issues when it comes to making use of certain OSG optimizations, or interoperability with new code.

In addition, widgets and instruments will no longer be hard-coded, but rather "compiled" into hardware-accelerated Canvas data structures while being initialized, which will be typically animated by using timers or listeners (via scripting or property rules). The fact that previously hard-coded widgets or instruments are now fully implemented in scripting space also means that deployment of updates no longer requires manual installations of binaries necessarily.

This is analogous to how more and more software programs, such as browsers like Firefox/Chrome, are using an increasingly scripted approach towards implementing functionality, i.e. using JavaScript/XUL (Chrome) to move the implementation of certain features out of native code.

Finally, an increasingly unified 2D rendering back-end also provides the opportunity to make porting/re-targeting FlightGear increasingly feasible, no matter if this is about mobile gaming platforms, mobile phones (e.g. Android) or embedded hardware like a Rasberry PI: Without a unified 2D rendering back-end, all other subsystems doing 2D rendering would need to be manually ported (hud, cockpit, instruments, GUI etc):

| Right, not only is OpenVG natively supported in hardware, but there's even a vector font library available named "vgfont". This OSG discussion may also be of interest for anybody pursuing this venture: http://forum.openscenegraph.org/viewtop ... &view=next |

A unified 2D rendering back-end using the Canvas system ensures instead that all Canvas-based features will remain functional, as long as the Canvas itself is able to run on the corresponding platform/hardware, because there's really just a single subsystem that handles all 2D rendering via different user-space wrappers, and that would need porting (e.g. to support OpenGL ES).

Also, GUI dialogs and instruments can make use of other Canvas-based features, e.g. for showing a GUI dialog on an instrument, or instruments in dialogs.

The property tree centric implementation approach of the Canvas also means that all Canvas-based frameworks could technically work in a standalone FGCanvas/FGPanel mode eventually, but also in multi-instance (master/slave) setups such as those common for FSWeekend/LinuxTag.

This is yet another novelty, because most existing hard-coded instruments cannot be easily modified to work in such multi-instance setup scenarios. The Canvas system however -being based on the property tree- could retrieve property updates from external instances, e.g. via telnet/UDP or HLA without requiring major re-architecting.

This also means that Canvas-based GUI dialogs could similarly be shown by a separate fgfs instance - for example, in order to provide an Instructor Station or to display a MapStructure-based moving map dialog/window.

Frameworks

Obviously, the Canvas API s themselves are not intended for specific end-user features like developing a PFD, ND or EICAS - therefore, you will typically see wrappers implemented in scripting space for certain needs - i.e. Canvas frameworks intended to help with the development of certain types of instruments for example. Frameworks will usually use the Canvas scripting space API directly, while providing a more concrete, use-case specific API, on top.

- Canvas EFIS Framework (2020/02): jsb

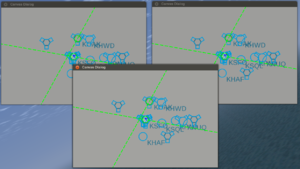

- Canvas MapStructure (2013/2014: Philosopher & Hooray)

- NavDisplay (2013/2014: Gijs, Hyde)

- Canvas GUI (2013-2015: TheTom)

- Canvas MCDU Framework (2012: TheTom)

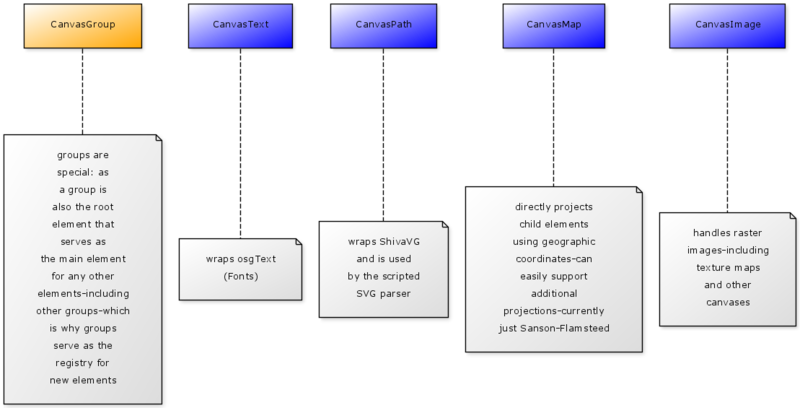

Elements

All new canvas elements need to implement the Canvas::Element interface (new elements can also sub-class existing elements, e.g. see the implementation of the Map and Window($FG_SRC/Canvas) elements), the canvas system currently supports the following primitives (see $SG_SRC/canvas/elements):

- CanvasGroup - main element: for grouping (a group of arbitrary canvas primitives, including other groups)

- CanvasText - for rendering texts (mapped to osgText)

- CanvasPath - for rendering vector graphics (mapped to OpenVG, currently also used to render SVGs into groups)

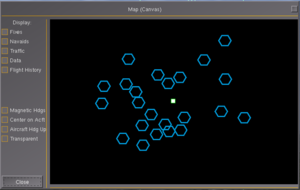

- CanvasMap - for rendering maps (automatic projection of geographic coordinates to screen coordinates, subclass of group)

- CanvasImage - for rendering raster images (mapped to osg::Image)

- CanvasWindow - this is part of $FG_SRC/Canvas/Window.?xx, it's a subclass of CanvasImage, used to implement windows (as of 05/2014 also to be found in simgear)

Most end-user features can be decomposed into lower-level components that need to be available in order to implement the corresponding feature in user-space.

Thus, the canvas system is based on a handful of rendering modes, each supporting different primitives - each of those modes is implemented as a so called "Canvas Element", which is a property-tree controlled subtree of a canvas texture using a certain name, that supports specific events and notifications.

According to the development philosophy outlined above, you obviously won't see new canvas elements that are highly use-case specific, such as a "night vision" or FLIR element. Instead, what is more likely to be supported are the lower level building blocks to enable end-users creating such features, i.e. by adding support for running custom effects/shaders and by rendering scenery views to canvas textures.

Adding a new Element

You will want to add a new Canvas::Element whenever you want to add support for features which cannot be currently expressed easily (or efficiently) using existing means (i.e. via existing elements and scripting space frameworks). For example, this may involve projects requiring camera support, rendering scenery views to a texture, rendering 3D models to a texture or doing a complete moving map with terrain elevations/height maps (even though the latter could be implemented by sub-classing Canvas::Image to some degree).

Another good example for implementing new elements is rendering file formats like PDF, 3d models or ESRI shape files.

To add a new element, these are the main steps:

- Navigate to $SG_SRC/canvas/elements

- Create a new set of files myElement.cxx myElement.hxx

- add them to $SG_SRC/canvas/elements/CMakeLists.txt (as per Developing using CMake)

diff --git a/simgear/canvas/elements/CMakeLists.txt b/simgear/canvas/elements/CMakeLists.txt

index bd21c13..9fdd48d 100644

--- a/simgear/canvas/elements/CMakeLists.txt

+++ b/simgear/canvas/elements/CMakeLists.txt

@@ -1,6 +1,7 @@

include (SimGearComponent)

set(HEADERS

+ myElement.hxx

CanvasElement.hxx

CanvasGroup.hxx

CanvasImage.hxx

@@ -14,6 +15,7 @@ set(DETAIL_HEADERS

)

set(SOURCES

+ myElement.cxx

CanvasElement.cxx

CanvasGroup.cxx

CanvasImage.cxx

@@ -23,4 +25,4 @@ set(SOURCES

)

Next, open the header file and add a new Element classs:

#ifndef CANVAS_MYELEMENT_HXX_

#define CANVAS_MYELEMENT_HXX_

#include <simgear/props/propsfwd.hxx>

#include "CanvasElement.hxx"

namespace simgear

{

namespace canvas

{

class myElement : public Element

{

public:

static const std::string TYPE_NAME;

static void staticInit();

myElement( const CanvasWeakPtr& canvas,

const SGPropertyNode_ptr& node,

const Style& parent_style = Style(),

Element* parent = 0 );

virtual ~myElement();

protected:

virtual void update(double dt);

private:

myElement(const myElement&) /* = delete */;

myElement& operator=(const myElement&) /* = delete */;

};

} // namespace canvas

} // namespace simgear

#endif /* CANVAS_MYELEMENT_HXX_ */

)

Next, add the source file implementing the new myElement class:

#include "myElement.hxx"

#include <simgear/props/props.hxx>

#include <simgear/misc/sg_path.hxx>

namespace simgear

{

namespace canvas

{

const std::string myElement::TYPE_NAME = "myelement";

void myElement::staticInit()

{

if( isInit<myElement>() )

return;

}

//----------------------------------------------------------------------------

myElement::myElement( const CanvasWeakPtr& canvas,

const SGPropertyNode_ptr& node,

const Style& parent_style,

Element* parent ):

Element(canvas, node, parent_style, parent)

{

SG_LOG(SG_GENERAL, SG_ALERT, "New Canvas::myElement element added!");

}

//----------------------------------------------------------------------------

myElement::~myElement()

{

SG_LOG(SG_GENERAL, SG_ALERT, "Canvas::myElement element destroyed!");

}

void myElement::update(double dt)

{

}

} // namespace canvas

} // namespace simgear

Next, edit CanvasGroup.cxx to register your new element (each canvas has a top-level root group, so that's how elements show up), navigate to Group::staticInit() and add your new element type there (don't forget to add your new header):

diff --git a/simgear/canvas/elements/CanvasGroup.cxx b/simgear/canvas/elements/CanvasGroup.cxx

index 51523f4..24e19d3 100644

--- a/simgear/canvas/elements/CanvasGroup.cxx

+++ b/simgear/canvas/elements/CanvasGroup.cxx

@@ -21,6 +21,7 @@

#include "CanvasMap.hxx"

#include "CanvasPath.hxx"

#include "CanvasText.hxx"

+#include "myElement.hxx"

#include <simgear/canvas/CanvasEventVisitor.hxx>

#include <simgear/canvas/MouseEvent.hxx>

@@ -60,6 +61,7 @@ namespace canvas

return;

add<Group>(_child_factories);

+ add<myElement>(_child_factories);

add<Image>(_child_factories);

add<Map >(_child_factories);

add<Path >(_child_factories);

Next, navigate to $FG_ROOT/Nasal/canvas/api.nas and extend the module to add support for your new element:

diff --git a/Nasal/canvas/api.nas b/Nasal/canvas/api.nas

index 85f336a..81c0fa0 100644

--- a/Nasal/canvas/api.nas

+++ b/Nasal/canvas/api.nas

@@ -314,6 +314,18 @@ var Element = {

}

};

+# myElement

+# ==============================================================================

+# Class for a group element on a canvas

+#

+var myElement = {

+# public:

+ new: func(ghost)

+ {

+ return { parents: [myElement, Element.new(ghost)] };

+ },

+};

+

# Group

# ==============================================================================

# Class for a group element on a canvas

@@ -958,7 +970,8 @@ Group._element_factories = {

"map": Map.new,

"text": Text.new,

"path": Path.new,

- "image": Image.new

+ "image": Image.new,

+ "myelement": myElement.new,

};

Next, rebuild SG/FG and open the Nasal Console and run a simple demo to test your new element:

var CanvasApplication = {

##

# constructor

new: func(x=300,y=200) {

var m = { parents: [CanvasApplication] };

m.dlg = canvas.Window.new([x,y],"dialog");

m.canvas = m.dlg.createCanvas().setColorBackground(1,1,1,1);

m.root = m.canvas.createGroup();

##

# creates a new element

m.myElement = m.root.createChild("myelement");

m.init();

return m;

}, # new

init: func() {

var filename = "Textures/Splash1.png";

# create an image child for the texture

var child=me.root.createChild("image")

.setFile( filename )

.setTranslation(25,25)

.setSize(250,250);

}, #init

}; # end of CanvasApplication

var splash = CanvasApplication.new(x:300, y:300);

print("Script parsed");vyou may also want to check out $FG_SRC/Scripting/NasalCanvas.?xx to learn more about exposing custom elements to scripting space via Nasal/CppBind. Next, you'll want to implement the update() methods and the various notification methods supported by CanvasElement:

- childAdded

- childRemoved

- childChanged

- valueChanged

For event handling purposes, you'll also want to check out the following virtual CanvasElement methods:

- accept()

- ascend()

- traverse()

- handleEvent()

- hitBound()

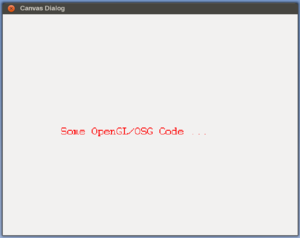

Integrating OSG/OpenGL Code

Once you have the basic boilerplate code in place, you can directly invoke pretty muchh arbitrary OpenGL/OSG code - for instance, the following snippet will render an osgText string to the Canvas element (added simply to the constructor here for clarity):

osg::Geode* geode = new osg::Geode();

osg::Projection* ProjectionMatrix = new osg::Projection;

ProjectionMatrix->setMatrix(osg::Matrix::ortho2D(0,1024,0,768));

std::string timesFont("fonts/arial.ttf");

// turn lighting off for the text and disable depth test to ensure it's always ontop.

osg::StateSet* stateset = geode->getOrCreateStateSet();

stateset->setMode(GL_LIGHTING,osg::StateAttribute::OFF);

osgText::Text* text = new osgText::Text;

geode->addDrawable(text);

text->setFont(timesFont);

osg::Vec3 position(200.0f,350.0f,0.0f);

text->setPosition(position);

text->setText("Some OpenGL/OSG Code ...");

text->setColor(osg::Vec4(1.0f,0.0f,0.0,1.0f));

// add the geode to the project matrix

ProjectionMatrix->addChild(geode);

// add the projection matrix to the transform used by the Canvas element

_transform->addChild(ProjectionMatrix);

For testing purposes, you can use the following Nasal snippet (e.g. executed via the Nasal Console:

var element_name = 'myelement';

var window = canvas.Window.new([640,480],"dialog");

var myCanvas = window.createCanvas().set("background", canvas.style.getColor("bg_color"));

var root = myCanvas.createGroup();

var osgemap = root.createChild(element_name);Discussed Enhancements

| Note The features described in the following section aren't currently supported or being worked on, but they've seen lots of community discussion over the years, so that this serves as a rough overview. However, this doesn't necessarily mean that work on these features is any way prioritized or even endorsed by fellow contributors -often enough, such discussions may become outdated pretty quickly due to recent developments. So if in doubt, please do get in touch via the Canvas sub-forum before starting to work on anything related to help coordinate things a little. Thank you! |

AI/MP textures

It appears as though it is not possible for Canvas to locate a texture that is in a multiplayer aircraft model; this has also been seen in the efforts to get Canvas displays working on the B777.[1] in simgear Canvas::update it appears to be using the factories to find the element; and this means that it can't find the named OSG node, which makes me think that maybe it is only looking in the ownship (which is a null model).[2]

Property I/O observations

The workload is certainly a function of the number of screens (canvas textures/FBOs) (unless you can assume have duplicate screens, in which case you can cut it by re-using a canvas or using a data provider). Creating the property structure by simply copying it turned out to be the largest drag in setting up a canvas display.[3] if you have a page that displays 90 data values in text, you actually have to fetch all 90 of them. With 9 displays open, that's 810 properties to be fetched and then to be written so that canvas can display them. If you try that per frame, you'll see quickly why it doesn't work.[4] Of course there needs to be an information merging and representation stage which the Shuttle doesn't have - but if you put this into the display code itself... see above. Fetching doens of properties when all you need is four pre-computed ones is a bad idea.[5]

In an extreme case, the shuttle needs to read (and canvas later write) some 800 properties for one screen processing cycle. Part of those trigger unit conversions, mappings to strings,... A small subset goes into translating, rotating and scaling line elements. Our experience is that property reading and writing is usually the most expensive part - with AW Thorsten did not manage even with complex cloud setup calls squeeed into a single frame to make even a dent in the framerate or latency (not for lack of trying), but property access does it as soon as you reach ~1000 per frame.[6]

500+ property updates (polling) would surely show up - especially given that a few years ago, that was pretty much the load caused by the whole simulator per frame. So it will be interesting to see if/how the complexity of these instruments is adding up (or not).

But all the sprintf/getprop-level overhead that is accumulating through update() loops invoked via timers would be straightforward to reduce significantly (or even eliminate) by extending CanvasElement/CanvasText so that it supports labels in the form of sprintf format strings that are populated by using a property node (sub-tree), which would mean that there would beero Nasal overhead for those labels/nodes that can be expressed using static format strings and a fixed set of dynamic properties.

All the polling could be prevented then, and updating would be moved to C++ space.

We ended up using a similar approach when we noticed that drawing taxiway layers would create remarkable property overhead, so that we troubleshooted the whole thing, at which point, TheTom added helpers to further reduce system/Nasal load[7]

common coding constructs (such as sprintf/getprop idiom) are put into a helper function, which can later on be re-implemented/optimied, without having to touch tons of files/functions.[8]

In the case of propery-driven labels that are formatted using sprintf(), it would probably be easier to just introduce a helper function, and delegate that to C++ code - as per the comments at: viewtopic.php?f=71&t=28160[9] It would be better to extend the Canvas system to directly support a new "property mode" using sprintf-style format strings that are assembled/updated in C++ space, i.e. without any Nasal overhead, which would benefit other efforts, too - including the PFD/ND efforts, re-implementing HUD/2D panels on top of Canvas, but even pui2canvas[10]

It is all about updating properties and updating a label/text element accordingly, we could dramaticaly reduce the degree of Nasal overhead by allowing text to be specified using printf-style format strings that get their values from a sub-branch in the element's tree (one node for each %s, %d) - that way, the whole thing could be processed in C++ space, and we would not need to use any Nasal for updating/building strings. If this could be supported, we could also provide two modes: polling and on-update, to ensure that there is no unnecessary C++ overhead. Complex dialogs with lots of dynamic labels could then be re-implemented much more easily, without having to register 5-10 callbacks per metrics (or timers/listeners), even though a timer-based update mode may also be useful for the C++ change. Note that this would also be useful for the PUI parser itself, because that already supports values that may be provided by a property using printf-style formatting, there, it is limited to a single fomat string - with Canvas, we could support an arbitrary number of sub-nodes that are updated as needed. Ultimately, that would also help with HUD/2D panels stuff, because taking values from properties and updating them using sprintf-style code is extremely common there, too - and we could avoid tons of Nasal overhead like that.

References

|

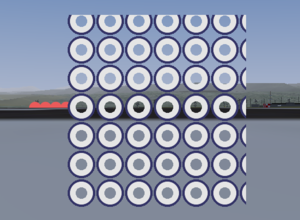

Instancing

| way to easily instantiate a symbol/geometry/group multiple times, in a cached fashion, without eating up unnecessary memory for multiple independently-animated instances of a symbol |

| I'm currently not sure if we can share the canvas elements across displays, so I've made copies of everything for each display. |

| You are right, that would help reduce the OSG-level workload, i.e. scene graph-level instancing.

But for the time being, Canvas does not support anything like that. |

| It's also lead me onto wonder if instancing could be generally useful (as we have a quite a lot of items in the scenery that are the same model); but to be honest I've not really got enough of a clue how the culling would work. — Richard (Dec 19th, 2015). Re: optimizing random trees: strong effect on performance ?.

(powered by Instant-Cquotes) |

| this is one of the most common feature requests related to Canvas — Hooray (Dec 19th, 2015). Re: Canvas::Element Instancing at the OSG level.

(powered by Instant-Cquotes) |

| The main reason for doing that is to ensure that you can easily adopt more native primitives if/when they become available - for instance, the lack of a dedicated animation-handling element at the Canvas::Element level is one of the most obvious issues, because it links rendering related OSG code to Nasal space callbacks that are running within the FlightGear main loop.

And one of the most logical optimiations would be to look up suitable OSG-level data structures and expose those as Canvas::Elements that we can then reuse to implement such animations/updates without going necessarily through Nasal space - there are quite a few osg classes that could help with that, some of which we are currently re-implementing via Nasal to animate PFD/ND logic. Looking specifically at some of the most complex Canvas-based avionics we have in FlightGear, things like Avidyne Entegra R9 will be difficult to update easily once such a dedicated element becomes available - but people can easily make that possible by using a single helper function/class that handles the update/animation semantics, and which isolates the remaining code from any internals - so that things like an animated bar can be easily delegated to OSG/C++ code as soon as the corresponding OSG classes are mapped to a dedicated Canvas element: Canvas Sandbox#CanvasAnimation |

- http://learnopengl.com/#!Advanced-OpenGL/Instancing

- http://www.opengl-tutorial.org/intermediate-tutorials/billboards-particles/particles-instancing/

- http://forum.openscenegraph.org/viewtopic.php?t=5592

- http://android-developers.blogspot.com/2015/05/game-performance-geometry-instancing.html

- http://www.informit.com/articles/article.aspx?p=2033340&seqNum=5

- http://3dcgtutorials.blogspot.com/2013/08/instancing-with-openscenegraph.html

- http://3dcgtutorials.blogspot.com/2013/09/instancing-with-openscenegraph-part-ii.html

- http://http.developer.nvidia.com/GPUGems2/gpugems2_chapter03.html

- http://trac.openscenegraph.org/projects/osg//browser/OpenSceneGraph/trunk/examples/osgdrawinstanced/osgdrawinstanced.cpp

Canvas-based Splash Screens

Supporting Cameras

Note People interested in working on this may want to check out the following pointers:

|

Given how FlightGear has evolved over time, not just regarding effects/shaders, but also complementary efforts like deferred rendering (via rembrandt), we'll probably see cameras (and maybe individual rendering stages) exposed as Canvases, so that there's a well-defined interface for hooking up custom effects/shaders to each stage in the pipeline - Zan's newcamera work demonstrates just how much flexibility can be accomplished this way, basically schemes like Rembrandt could then be entirely maintained in XML/effects and shader (fgdata) space. And even the fgviewer code base could be significantly unified by just working in terms of canvases that deal with camera views, which also simplifies serialization for HLA.

Background:

- Rembrandt Status

- Talk:Project_Rembrandt

- CompositeViewer Support

- Howto:Use a Camera View in an Instrument

- $FG_ROOT/Docs/README.multiscreen

- http://api-docs.freeflightsim.org/flightgear/classflightgear_1_1CameraGroup.html

- https://gitorious.org/fg/zans-flightgear?p=fg:zans-flightgear.git;a=commit;h=09832d8076a985a329500c027c1ed4f9b72bb1a9

- http://trac.openscenegraph.org/projects/osg/wiki/Support/ProgrammingGuide/ViewerVSCompositeViewer

Also see The FlightGear Rendering Pipeline

| Taxi Camera on navigation display (as seen on FSX and X-Plane) |

| I have to create two master cameras to controls the two different views in two different scene rendering dynamically.

but flightgear only using viewer class . This is createing one master camera no.of slave cameras. but i need CompositeViewer class. how to use CompositeViewer class in flightgear and how to render through CompositeViewer class. — Divi (Tue Dec 23). How to create 2 master camera and 2 views in flightgear 3.0.

(powered by Instant-Cquotes) |

| any idea how loan to wait for this is add in canvas (with custom render options)? |

| I think we need soon add this to canvas for camera. |

| Anyway, I'm talking about rendering (terrain) camera view to texture using od_gauge. I know you can get terrain camera view and place it on the screen. Alright, it doesn't even have to be terrain, just normal camera view. It's rendered to screen every frame. The same way, can't we use the od_gauge instrument to render the view to texture? I just need some good info/doc on how we can do it.

— Merlion Aerosuperb (2012-03-21). [Flightgear-devel] Rendering Terrain Camera View to Texture.

(powered by Instant-Cquotes) |

| Back when the whole Canvas idea was originally discussed, none of the people involved in that discussion stepped up to actually prototype, let alone, implement the system - so it took a few years until the idea took shape, and the developer who prototyped and designed the system went quite a bit further than originally anticipated - but I think it's safe to say that not even Tom was foreseeing the increasing focus on GUI and MFD use-cases, as well as as the increasing trend to use it for mapping/charting purposes.

So the original focus on 2D rendering is/was very valid, and the system is sufficiently flexible to allow it to be extended using custom elements for rendering camera/scenery views at some point. All the community support and momentum certainly is there, and I'm sure that TheTom will gladly review any contributions related to this. — Hooray (Sun Jan 04). Re: How to create 2 master camera and 2 views in flightgear.

(powered by Instant-Cquotes) |

| hi i need to create 2 window with different view. |

| To support this kind of thing via Canvas, we'd need to adapt the existing view manager code and render a slave camera to a Canvas group - i.e. by turning the whole thing into a CanvasElement sooner or later. That would allow cameras to be specified according to the existing syntax/properties.

|

| I think, but I'm not really sure, that FligthGear does not support two different views even if you have two windows.

|

| while we've had a number of discussions about possibly supporting camera views as Canvas elements, this isn't currently supported. At some point, this will probably be added, because it would simplify quite a bit of existing code (especially the view manager, and the way camera groups are set up) - however, the corresponding C++ predates Canvas by many years, so it would involve a bit of work.

|

| We're waiting for the Canvas Properties 2D drawing API and Camera View so we can create the PFD.

|

| I'm looking to replicate a camera with a fixed viewpoint from the aircraft. For example looking directly down.

Is there a way I can use some scripting method to call a new window displayed in the bottom right hand side of the screen showing a fixed camera view, without having to edit the preferences for my machine? I'd like it to be easily distributable. [1] — Avionyx

|

| I was wondering if it were possible to restrict the camera output to only one half of the running FG window? I'm hoping to do this so that I may have the map and route manager GUIs active in the other half, so that they aren't obscuring the camera view (and also have the entire HUD visible). So basically, half the window straight down the center - left half is just black, right half is the camera.

Although this would also be solved if there were an external FG dynamic navigational map program, that also displayed waypoints... (I don't think there is one, right?). Additionally, I would love to hear that this question can be answered with Nasal, as I really can't afford to edit the source code and recompile (it's for a project, and I have no admin rights on the laboratory machines).[2]— seabutler

|

| I'm trying to debug reflection shader I'm working on. I have a camera attached to a scene graph, which pre-renders (osg::Camera::PRE_RENDER) scene into offscreen surface (osg::Camera::FRAME_BUFFER_OBJECT); For a debugging purposes I have to see the result of that render pass.

I'm not very good yet in FG internal structure, so I'd like to ask - can this camera be somehow attached to FG camera views (v), or embedded as separate window ?[3] — Vladimir Karmisin

|

| I want to give access to every stage of the rendering to the effect system. The geometry pass outputs to render target, but the fog, the lights, the bloom need to have access to the textures of the buffer, and there is a separate one for each camera associated to windows or sub windows. [4] — Frederic Bouvier

|

| It would be nice if the Effects framework had a way to load arbitrary textures and make them available to effects.I don't know if there is a better way to create your texture offline than write C++ code in simgear. OSG will read a TIFF file with 32bits per component as a floating point texture... assuming you can create

such a thing.[5] — Tim Moore

|

| modify the Renderer class to separate from the scenegraph, terrain and models on one hand, the skydome and stars on the other, and finally the clouds.

These three elements are passed to the CameraGroup class in order to be treated separately in the new rendering engine (and put together in the current one).[6] — Frederic Bouvier

|

| I want to point out my work on my "newcameras" branch: https://gitorious.org/fg/zans-flightgear?p=fg:zans-flightgear.git;a=shortlog;h=refs/heads/newcameras which allows user to define the rendering pipeline in preferences.xml. It does not (yet?) have everything Rembrandt's pipeline needs, but most likely is easily enhanced to support those things.

Basically this version adds support for multiple camera passes, texture targets, texture formats, passing textures from one pass to another etc, while preserving the standard rendering line if user wants that. I wish this work could be extended (or maybe even I can extend it ;) to handle the Rembrandt camera system. This will not solve all problems in the merge, but some of them.[7]— Lauri Peltonen

|

| I was not aware of your work. But given what you write here, this looks pretty promising. Fred mentioned your name in an offline mail. I would highly apprechiate that we do not lock out low end graphics boards by not having any fallback. May you both should combine forces?

From what I read, I think both are heading in the same global direction and both implementations have some benefits over the other?[8] — Mathias Fröhlich

|

| I would like to extend the format to avoid duplicating the stages when you have more than one viewport. What I see is to specify a pipeline as a template, with conditions like in effects, and have the current camera layout refer the pipeline that would be duplicated, resized and positioned for each declared viewport[9] — Frederic Bouvier

|

| Mapping cameras to different windows, which can be opened on arbitrary screens, will absolutely still be supported. I know that multi-GPU setups are

important for professional users and our demos.[10] — Tim Moore

|

| I believe that we need to distinguish between different render to texture cameras. Camera nodes must be accessible from within flightgear. That ones that will end in mfd displays or hud or whatever that is pinned into models. And one that are real application windows like what you describe - additional fly by view, and so on. And I believe that we should keep that separate and not intermix the code required for application level stuff with building of 3d models that do not need anything application level code to animate the models ... I think of some kind of separation that will also be good if we would do HLA between a viewer and an application computing physical models or controlling an additional view hooking into a federate ...[11] — Mathias Fröhlich

|

| I've done some work with setting up a model of a pan/tilt camera system that can point at a specific wgs84 point or along a specific NED vector

(i.e. nadir, or exactly at my shadow, etc.) This was [unfortunately] for a paid consulting project so that code doesn't live in the FlightGear tree. However, it's really easy to configure a view that stays locked on a specific lon/lat and I hacked a small bit of nasal to copy the point you click on over into the view target variables so you can click any where in the scene and the pan/tilt camera will hold center on that exact location. FlightGear offers a lot of flexibility and comparability in this arena.[12] — Curtis Olson

|

| Would it be possible to place the new "view" into a window instead of having a dedicated view? That would allow you to have an instrument panel with a blank cut-out that could hold this newscam/FLIR window.The easiest way to visualize the idea I have is to think about the view you'd see in one of the rear-view mirrors that most fighters have along the canopy bow (and the Spitfire has mounted on top of the canopy bow, outside the cockpit). You'd see your full screen view as usual, but you'd also have these "mirrors" showing the view behind you at the same time.[13] — Gene Buckle

|

| One thing we have to consider with rear view mirrors is that we don't currently have the ability to flip the display for the "mirror" affect.There's got to be a very simple view transform matrix that would invert the display in the horizontal direction. Probably the identity matrix with the appropriate axis negated (-1). It might be a relatively simple thing to add to the view transform pipeline at some point.[14] — Curtis Olson

|

| I had a look at the this idea a while back - the problem I came across was that the camera would show the view to the rear NOT the mirror image. I

couldn't see a way around that without a great deal of processing. At hat point I gave up.[15] — Vivian Meazza

|

| As has been said previously, the proper way to support "cameras" via Canvas is using CompositeViewer, which does require a re-architecting of several parts of FG: CompositeViewer SupportGiven the current state of things, that seems at least another 3-4 release cycles away. So, short of that, the only thing that we can currently support with reasonable effort is "slaved views" (as per $FG_ROOT/Docs/README.multiscreen).That would not require too much in terms of coding, because the code is already there - in fact, CameraGroup.cxx already contains a RTT/FBO (render-to-texture) implementation that renders slaved views to an offscreen context. This is also how Rembrandt buffers are set up behind the scenes.So basically, the code is there, it would need to be extracted/genralied and turned into a CanvasElement, and possibly integrated with the existing view manager code. |

| And then, there also is Zan's newcameras branch, which exposes rendering stages (passes) to XML/property tree space, so that individual stages are made accessible to shaders/effects. Thus, most of the code is there, it is mainly a matter of integrating things, i.e. that would require someone able to build SG/FG from source, familiar with C++ and willing/able to work through some OSG tutorials/docs to make this work: Canvas Development#Supporting CamerasOn the other hand, Canvas is/was primarily about exposing 2D rendering to fgdata space, so that fgdata developers could incorporatedevelop and maintain 2D rendering related features without having to be core developers (core development being an obvious bottleneck, as well as having significant barrier to entry).In other words, people would need to be convinced that they want to let Canvas evolve beyond the 2D use-case, i.e. by allowing effects/shaders per element, but also to let Cameras be created/controlled easily.Personally, I do believe that this is a worthwhile thing to aim for, as it would help unify (and simplify) most RTT/FBO handling in SG/FG, and make this available to people like Thorsten who have a track record of doing really fancy, unprecedented stuff, with this flexibility.Equally, there are tons of use-cases where aircraft/scenery developers may want to set up custom cameras (A380 tail cam, space shuttle) and render those to an offscreen texture (e.g. GUI dialog and/or MFD screen). |

| tail cams are slaved cameras, so could be using code that already exists in FG, which would need to be integrated with the Canvas system, to be exposed as a dedicated Canvas element (kinda like the view manager rendering everything to a texture/osg::Geode).There's window setup/handling code in CameraGroup.cxx which sets up these slaved views and renders the whole thing to a osg::TextureRectangle, which is pretty much what needs to be extracted and integrated with a new "CanvasCamera" element - the boilerplate for which can be seen at: [CanvasThe whole RTT/FBO texture setup can be seen here: http://sourceforge.net/p/flightgear/flightgear/ci/next/tree/src/Viewer/CameraGroup.cxx#l994That code would be redundant in the Canvas context, i.e. could be replaced by a Canvas FBO instead.The next step would then be wrapping the whole thing in a CanvasCamera and exposing the corresponding view parameters as properties (propertyObject) so that slaved cameras can be controlled via Canvas.Otherwise, there is only very little else needed, because the CanvasMgr would handle updating the Camera, and render everything to the texture that you specified. |

- ↑ Avionyx (Wed Mar 12, 2014 7:08 am). Sub window view.

- ↑ seabutler (Fri Jan 24, 2014 5:38 am). "Half" the FG window?.

- ↑ Vladimir Karmisin (Thu, 08 Jan 2009 05:17:07 -0800). FG - camera for debugging purposes..

- ↑ Frederic Bouvier (Sun, 01 Jan 2012 07:14:43 -0800). Announcing Project Rembrandt.

- ↑ Tim Moore (Tue, 24 Jul 2012 22:38:35 -0700). Functions to textures?.

- ↑ Frederic Bouvier (Wed, 07 Mar 2012 05:08:06 -0800). RFC: changes to views and cameras.

- ↑ Lauri Peltonen (Wed, 07 Mar 2012 04:58:44 -0800). Rembrandt the plan.

- ↑ Mathias Fröhlich (Wed, 07 Mar 2012 10:15:31 -0800). Rembrandt the plan.

- ↑ Frederic Bouvier (Wed, 07 Mar 2012 05:08:06 -0800). RFC: changes to views and cameras.

- ↑ Tim Moore (30 Jun 2008 22:46:34 -0700). RFC: changes to views and cameras.

- ↑ Mathias Fröhlich (30 Jun 2008 22:46:34 -0700). RFC: changes to views and cameras.

- ↑ Curtis Olson (Tue, 15 May 2012 14:19:34 -700). LiDAR simulation in FG and powerline scenery.

- ↑ Gene Buckle (Thu, 23 Jul 2009 10:11:05 -0700). view manager "look at" mode.

- ↑ Curtis Olson (Thu, 23 Jul 2009 10:11:05 -0700). view manager "look at" mode.

- ↑ Vivian Meazza (Thu, 23 Jul 2009 10:11:05 -0700). view manager "look at" mode.

Effects / Shaders

Note When it comes to supporting effects and shaders, people generally have two use-cases in mind for Canvas:

|

| Could canvas be used to take a view from a certain area in a certain direction and render it onto a fuselage--in other words, to create a reflection? |

The effects system pre-dates Canvas by several years - meanwhile, it would be one of the more natural choices to optionally provide support for interfacing/integrating both, without this integration bein specific to a single use/case (e.g. aircraft/liveries). We've got other useful work related to effects that never made it into git and that predates Canvas by several years - but when it comes to managing dynamically created textures, canvas can probably be considered the common denominator and it doesn't make much sense to add even more disparate features that cannot be used elsewhere.

| I'm currently experimenting with a 2D Canvas and rendering everything to a texture. For this I use FGODGauge to render to texture and

FGODGauge::set_texture to replace a texture in the cockpit with the texture from the fbo. This works very well [...] I have just extended the ReplaceStaticTextureVisitor::apply(osg::Geode& node) method to also replace texture inside effects. It works now by using the same technique as for the SGMaterialAnimation where a group is placed in between the object whose texture should be changed and its parent. This group overrides the texture:virtual void apply(osg::Geode& node)

{

simgear::EffectGeode* eg =

dynamic_cast<simgear::EffectGeode*>(&node);

if( eg )

{

osg::StateSet* ss = eg->getEffect()->getDefaultStateSet();

if( ss )

changeStateSetTexture(ss);

}

else

if( node.getStateSet() )

changeStateSetTexture(node.getStateSet());

int numDrawables = node.getNumDrawables();

for (int i = 0; i < numDrawables; i++) {

osg::Drawable* drawable = node.getDrawable(i);

osg::StateSet* ss = drawable->getStateSet();

if (ss)

changeStateSetTexture(ss);

}

traverse(node);

}

stateSet->setTextureAttribute(0, _new_texture,

osg::StateAttribute::OVERRIDE);

stateSet->setTextureMode(0, GL_TEXTURE_2D, osg::StateAttribute::ON);

— Thomas Geymayer

|

| If you want to pass substantial amounts of data, I’d suggest to use a texture (with filtering disabled, probably) to pass the info. Since we don’t have much chance of using the ‘correct’ solution (UBOs) in the near future.

If you need help generating a suitable texture on the CPU side, let me know.[2] — James Turner

|

- ↑ Thomas Geymayer (Tue, 01 May 2012 15:34:41 -0700). Replace texture with RTT.

- ↑ James Turner ( 2014-03-07 10:27:40). Passing arrays to a shader.

At some point, the canvas system itself could probably benefit from being also able to use the Effects/Shader framework, so that canvas textures can also be processed via effects and shaders optionally, before they get drawn. That should make all sorts of fancy effects possible, such as night vision cameras or thermal view, rendered to canvas textures/groups.

It is currently not yet clear how to address this best, the easiest option might be to specify if effects or vertex/fragment shaders shall be invoked via properties (boolean), including their file names referring to $FG_ROOT?

That would then disable the default rendering pipeline for those canvas textures and use shaders.

Basically, anything that's not directly possible via the core canvas system or via its Nasal wrappers, would then be handled via effects/shaders. So we would gain lots of flexibility, and performance benefits.

For the time being, neither effects nor shaders are exposed/accessible to the Canvas system, so depending on what you have in mind, you may need to extend the underlying base class accordingly - a simple proof-of-concept to get you going would be this:

#include <osg/Shader>

....

osg::ref_ptr<osg::Program> shadeProg(new osg::Program);

// set up Vertex shader

osg::ref_ptr<osg::Shader> vertShader(

osg::Shader::readShaderFile(osg::Shader::VERTEX, filename1));

// set up fragment shader

osg::ref_ptr<osg::Shader> fragShader(

osg::Shader::readShaderFile(osg::Shader::FRAGMENT, filename2));

//Bind each shader to the program

shadeProg->addShader(vertShader.get());

shadeProg->addShader(vertShader.get());

//Attaching the shader program to the node

osg::ref_ptr<osg::StateSet> objSS = _transform->getOrCreateStateSet();

objSS->setAttribute(shadeProg.get());

To make things better configurable, you can expose things like the type of shader and filename to the property tree by using the propertyObject<> template, e.g.:

#include <simgear/props/propertyObject.hxx>

....

simgear::propertyObject<std::string> vertex_filename(PropertyObject<std::string>::create(n,"shader.vert"));

simgear::propertyObject<std::string> fragment_filename(PropertyObject<std::string>::create(n,"shader.frag"));

For additional details, refer to Howto:Use Property Tree Objects.

Ideally, there could be a simple interface class, so that these things can be customized via listeners, like the property-observer helper, just specific to enabling shaders for a canvas texture.

So if people really want to create really fancy textures or camera views, they could use effects/shaders then, which would keep the design truly generic, and it would ensure that there's no bloat introduced into the main canvas system.

We did have some discussions about supporting per-canvas (actually per canvas::Element) effects and shaders via properties, TheTom even mentioned that he was interested in supporting this at some point, especially given the number of projects that could be realized like that (FLIR, night vision, thermal imaging etc) - but so far, there are quite a few other things that are taking precedence obviously - so, as far as I am aware, there's nobody working on effects/shader support for canvas, even though I am sure that this would be highly appreciated.

At the time of writing this (02/2014) the Canvas does not yet include any support for applying custom effects or Shaders to canvas elements or the whole canvas itself - however, supporting this is something that's been repeatedly discussed over time, so we're probably going to look into supporting this eventually[2].

If the canvas can internally be referenced by a texture2D() call, then it should be easy - the fragment shader knows screen resolution pixel coordinates, so it's straightforward to look up the local pixel from the texture and then blur or recolor it, distort it or whatever you have in mind.

Menu lighting based on light in the scene might be cool

These shouldn't even be very complicated to do

Assuming the canvas is internally a quad with a properly uv-mapped texture, then:

- making the vertex shader just pass everything through and

- uniform sampler2D myTexture; should make that texture available to the fragment shader

- vec2 coords = gl_TexCoord[0].xy; should get the coordinates of the local pixel inside the texture

#version 120

uniform sampler2D input_tex;

void main() {

// get the texture coords of the pixel

vec2 coords = gl_TexCoord[0].xy;

//look up the pixel color from the input texture

vec4 color = texture2D( input_tex, coords) ;

// and pass the pixel color through

gl_FragColor = color;

}

There are at least 2-3 people who can help with pointers, but we don't have time to implement this ourselves - so if anybody is interested, please get in touch via the canvas subforum.

The Effects framework is implemented in SimGear: https://sourceforge.net/p/flightgear/simgear/ci/next/tree/simgear/scene/material

void main(void) {

gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0);

}

// based on:

// http://people.freedesktop.org/~idr/OpenGL_tutorials/03-fragment-intro.html

// adapted by i4dnf as per: http://wiki.flightgear.org/Talk:Canvas_Development

// ** untested **

void main(void)

{

vec4 baseColor = vec4(.90, .90, .90, 0.0);

vec4 subtractColor = vec4(-.70, -.70, -.50, -0.2);

float doSubtract = step(400.0, dist_squared);

vec4 fragColor = doSubtract * subtractColor + baseColor;

gl_FragColor = fragColor;

}

Implementation-wise, supporting shaders per canvas seems straightforward to support - but it would probably be better to support shaders per element, where each element would render its own sub-texture if shaders/effects are specified, and apply the canvas' osg::StateSet otherwise. We could add an Interface on top of SimGear's "Effects" framework which would be implemented by the Canvas itself, but also by Canvas::Element

- Probably need to extend the Effects framework to support reloading effects/shaders from disk for testing purposes

Also see:

- http://trac.openscenegraph.org/projects/osg/wiki/Support/Tutorials/ShadersIntroduction

- http://trac.openscenegraph.org/projects/osg/wiki/Support/Tutorials/ShadersUpdating

- http://en.wikipedia.org/wiki/OpenGL_Shading_Language

- http://www.cuboslocos.com/tutorials/OSG-Shader

- http://forum.flightgear.org/viewtopic.php?f=71&t=22166

- http://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=canvas+shader

- http://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=canvas+shaders

- http://forum.flightgear.org/search.php?st=0&sk=t&sd=d&sr=posts&keywords=canvas+effects

| References

|

there's also been talk about possibly supporting a dedicated PDF element eventually:

| Hmmm, I'm now wondering about a canvas PDF viewer! |

| Now to see what happens with the EFB ideas and the canvas PDF support. |

| Canvas cannot currently deal with PDF files directly - even though OSG does have support for doing this kind of thing, but we would need to add a few dependencies, i.e. a PDF rendering library like "poppler" that would render a PDF to an osg::Image. At that point, it could be dealt with like a conventional canvas image, and could even be retrieved via HTTP. Extending Canvas accordingly could actually be useful, because it would even allow us to render other PDFs inside dialogs - such as for example the manual itself, i.e. as part of some kind of integrated "help" system. The question is if TheTom can be convinced that this is a worthwhile goal or not. But it's clearly something for post 3.2

|

| It may make sense to revisit this idea, supporting a subset of PDF would not be too difficult, but it would be better to really use a PDF library and OSG's built-in suport for rendering a PDF to a texture, which could the be easily turned into a new Canvas Element, as per the example at: Canvas Development#Adding a new ElementThe coding part is relatively straightforward (basically copy&paste), but getting the dependencies/cmake magic right for all supported FG platforms would probably require a bit of work. |

| More recently, another idea is to add dedicated PDF support to the core Canvas system, so that arbitrary PDF files can be rendered onto a Canvas: viewtopic.php?f=71&t=27499&p=258282#p258282 |

If you are interested in working on any of these, please get in touch via the canvas sub forum first.

You will want to add a new Canvas::Element subclass whenever you want to add support for features which cannot be currently expressed easily (or efficiently) using existing means/canvas drawing primitives (i.e. via existing elements and scripting space frameworks).

For example, this may involve projects requiring camera support, i.e. rendering scenery views to a texture, rendering 3D models to a texture or doing a complete moving map with terrain elevations/height maps (even though the latter could be implemented by sub-classing Canvas::Image to some degree).

Another good example for implementing new elements is rendering file formats like PDF, 3d models or ESRI shape files.

To create a new element, you need to create a new child class which inherits from Canvas::Element base class (or any of its child-classes, e.g. Canvas::Image) and implement the interface of the parent class by providing/overriding the correspond virtual methods.

To add a new element, these are the main steps:

- Set up a working build environment (including simgear): Building FlightGear

- update/pull simgear,flightgear and fgdata

- check out a new set of topic branches for each repo: git checkout -b topic/canvas-CanvasPDF

- Navigate to $SG_SRC/canvas/elements

- Create a new set of files CanvasPDF.cxx/.hxx (as per Adding a new Canvas element)

- add them to $SG_SRC/canvas/elements/CMakeLists.txt (as per Developing using CMake)

- edit $SG_SRC/canvas/elements/CanvasGroup.cxx to register your new element (header and staticInit)

- begin replacing the stubs with your own C++ code

- map the corresponding OSG/library APIs to properties/events understood by the Canvas element (see the valueChanged() and update() methods)

- alternatively, consider using dedicated Nasal/CppBind bindings

Below, you can find patches illustrating how to approach each of these steps using boilerplate code, which you will need to customize/replace accordingly:

Caution This custom Canvas element requires a 3rd party library which is not currently used by SimGear/FlightGear, so that the top-level CMakeLists.txt file in $SG_SRC needs to be modified to add a corresponding findPackage() call and you also need to download/install the corresponding library for building sg/fg. In addition, the CMake module itself may need to be placed in $SG_SRC/CMakeModules:

CanvasPDF: (required cmake changes)

|

Discussed new Elements

|

|

The previously mentioned primitives alone can already be used to create very sophisticated avionics and dialogs - however, depending on your needs, you may want to extend the canvas system to support additional primitives. Typically, you'll want to add new primitives in order to optimize performance or simplify the creation of more sophisticated avionics and/or dialogs (e.g. for mapping/charting purposes). If you are interested in adding new primitives, please take a look at the sources in $SG_SRC/canvas/elements.

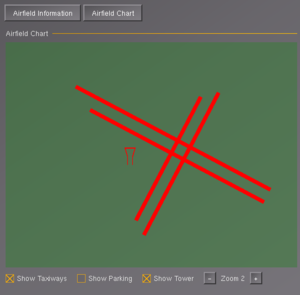

For example, there's been talk about possibly adding the following additional primitives at some point. However, none of these are currently a priority or being worked on by anybody:

- support for a vertical mapping mode (e.g. to create Vertical Situation Displays or flight path evaluation dialogs), would probably make sense to use PROJ4 for additional projcetion support?

- support for rendering scenery views (e.g. for tail cameras or mirrors etc) [3] [4] ticket #1250

- support for ESRI shapefiles (instead of using shapelib, it would make sense to use GDAL/OGR here, or directly the OSG/ReaderWriterOGR plugin) [5] (FlightGear/osgEarth now depends on GDAL, so should be straightforward dependency-wise):

- support for GeoTIFF files or terrain height profiles using the tile cache

- rendering 3D objects

- support for ortographic moving map displays, e.g. using atlas [6] (ideally usingCompositeViewer Support):

There is already support for creating multiple osgviewer windows in FlightGear, this is commonly used in multiscreen setups - to support the creation and usage of osgviewer windows in Canvas, we would need to look into adding a new placement type to the canvas system, so that osgviewer/OS windows can be created and controlled via the canvas system and a handful of placement-specific properties [7].

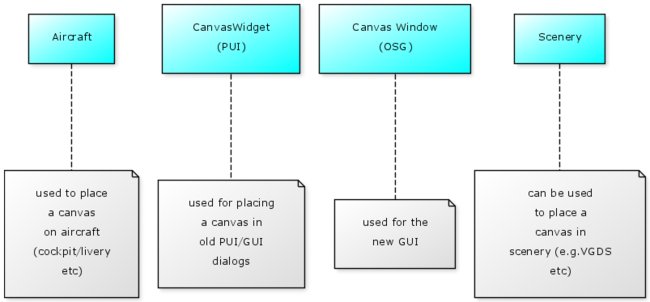

Placements

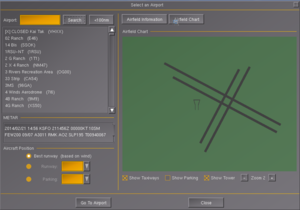

Obviously, users can use the canvas system for developing all sorts of features that may need to be accessible using different interfaces - for these reasons, the canvas uses the concept of so called placements, so that a canvas-texture can be shown inside GUI windows, GUI dialogs, cockpits, aircraft textures (liveries) - and also as part of the scenery (e.g. for a VGDS).

| Note The features described in the following section aren't currently supported or being worked on, but they've seen lots of community discussion over the years, so that this serves as a rough overview. |

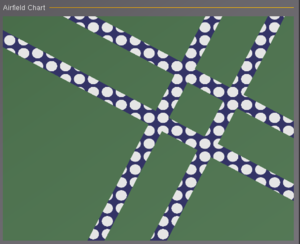

Scenery Overlays

Also see Photoscenery via Canvas? and A project to create a source of free geo-referenced instrument charts

| I've been wondering how hard it would be to add a tile loader mode where the default texture is ignored, and instead, a photo texture of the tile is applied. It may not be an optimal photo-texture implementation (but it might be good enough to be fun and interesting?) — Curtis Olson (Oct 1st, 2008). Re: [Flightgear-devel] Loading Textures for Photo-Scenery?.

(powered by Instant-Cquotes) |

| lightGear to superimpose a given texture over a whole terrain tile, given that a texture file with the same name as the tile is found. I think that this would require that either a) TerraGear generate appropriate texture coordinates for the tile, mapping the texture continuously over the whole tile, or b) in case of loadPhotoScenery, the texture coordinates contained in the .btg.g must be ignored and rebuilt on the fly by FlightGear. — Ralf Gerlich (Oct 1st, 2008). Re: [Flightgear-devel] Loading Textures for Photo-Scenery?.

(powered by Instant-Cquotes) |

| Chris Schmitt, Pete & myself have also discussed the MSFS approach, where we render surface information to textures, either on the CPU or GPU. This solves a whole bunch of issues in airports, and allows the generation of the textures to be defined based on user settings, performance, available texture RAM and so on. (Don’t render roads, render fancy boundaries for coastlines, paint snow onto crops based on season) If the textures are re-generated dynamically based on changing view, the user need never see a ‘blurry’ texture. The generated texture doesn’t need to encode RGB, it can encode whatever inputs the shaders like - eg material ID, gradient, distance to boundaries. (And of course, for far away areas, we generate or read a coarse, low-resolution map very cheaply) From my perspective the appeal is this work can be done on a spare CPU core, and it actually fits quite well with something like osgEarth - we let osgEarth handle the elevation data, and the texture-generating code simply becomes the source of raster data which osgEarth overlays on top. With the GPU-based flattening of elevation data it even works to make roads/railways interact with terrain nicely. Whether or not the memory-bandwith burned in moving textures to the GPU is better or worse than doing everything GPU-side as Tim suggests with decals, I have no clue about. Similarly I don’t know how disruptive this scheme would be architecturally - intuitively osgEarth must handle loading different resolutions of raster data interactively - that’s exactly what it does for photo-scenery after all - but I haven’t looked at the API to see how easy or hard such an integration would be. — James Turner (Nov 27th, 2013). Re: [Flightgear-devel] Rendering strategies.

(powered by Instant-Cquotes) |

| Texture overlays - FG scenery engine does the chopping and texture co-ord generation. [1] — Paul Surgeon

|

| For the sake of completeness, and I am not saying that you should do this (and it almost certainly going to be much worse performance-wise than any shaders) - but if you want the shadow to be accurate despite potential terrain sloping, you could apply a Canvas texture onto the surface (admittedly, this is much more straightforward in the case of an actual 3D model like a carrier) - otherwise, you'll also want to use a workaround and attach the texture to the 3D model (aka main aircraft). But people have been using Canvas for all sorts of purposes, including even liveries: Howto:Dynamic_Liveries_via_Canvas

But unlike glsl/shaders, a Canvas is not primarily a GPU thing, i.e. there's lots of CPU-level stuff going on affecting performance. |

| I am looking for a method for adding a graphical overlay channel to Flightgear. This overlay would consist of a dynamic texture that can be

modified in real time. I've used other OpenGL based systems with this feature but don't know where to start with implementing it in Flightgear.[2] — Noah Brickman

|

| Once the frame is converted to an opengl texture, then it would be a very simple matter of displaying it on the screen with a textured rectangle drawn in immediate mode ... possibly with some level of transparancy, or not ...

I'm involved in some UAV research where we are using FlightGear to render a synthetic view from the perspective of a live flying uav. Would be really cool to super impose the live video over the top of the FlightGear synthetic view. Or super impose an F-16 style HUD on top of the live video ... I have lots of fun ideas for someone with a fast frame grabber and a bit of time [...] Then do whatever bit fiddling is needed to scale/convert the raster image to an opengl texture. Then draw this texture on a quad that is aligned correctly relative to the camera. It might be possible to get fancy and alpha blend the edges a bit. Given an image and the location and orientation of the camera, it would be possible to locate world coordinates across a grid on that image. That would allow a quick/crude orthorectification where the image could be rubber sheeted onto the terrain. This would take some offline processing, but you could end up building up a near real time 3d view of the world than could then be viewed from a variety of perspectives. The offline tools could update the master images based on resolution or currency ... that's probably a phd project for someone, but many of the pieces are already in place and the results could be extremely nice and extremely useful (think managing the effort to fight a dynamic forest fire, or other emergency/disaster management, traffic monitoring, construction sites, city/county management & planning, etc.) I could even imagine some distrubuted use of this so that if you have several uav's out flying over an area, they could send their imagery back to a central location to update a master database ... then the individual operators could see near real time 3d views of places that another uav has already overflown. If we started building up more functionality in this area, there are a lot of different directions we could take it, all of which could be extremely cool.[3]— Curtis Olson

|

Could we generate the texture on the fly? Based on landclass and road data? I could see a number of advantages/disadvantages here as compared to our current, generic textures:

|

| A very interesting idea - so interesting I thought of it and discussed it with some people last year :) The summary answer is, it should be possible, it would

have pretty much the benefits and drawbacks you mention (especially the VRAM consumption), and it would allow nice LoD and solve some other issues. Especially it avoid the nasty clipping issues we have with surface data in TerraGear, since you just paint into the texture, no need to clip all the linear data.[5]— James Turner

|

What we could do is identify which hooks are needed to make this work and provide those via the Canvas system: Canvas textures can already be placed in the scenery, so there should be very little needed in terms of placement-specific attributes, and the corresponding code should be available in SimGear/FlightGear already.

The patch required to modify FlightGear obviously already uses shaders and effects, and it's mostly about exposing additional parameters to the shaders.

- ↑ Paul Surgeon. Scenery engine features..

- ↑ Noah Brickman. Overlay Plane.

- ↑ Curtis Olson. [http://www.mail-archive.com/flightgear-devel@lists.sourceforge.net/msg15459.html Replace fg visualization with streaming video Curtis Olson Fri, 25 Jan 2008 07:51:41 -0800].

- ↑ Thomas Albrecht. Generating ground textures on the fly?.

- ↑ James Turner. Generating ground textures on the fly?.

Native Windows

| Note People interested in working on this may want to check out the following files: |

Currently, all placements are within the main FlightGear window, however there's been talk about providing support for additional Canvas placements, such as e.g. osgviewer placements to help generalize our Window Management routines, so that a canvas can be rendered inside a dedicated OS window:

| Support multiple views/windows: Currently the GUI can only be placed inside one view/window (see Docs/README.multiscreen) but it would be nice to be able to move windows between views.[1] — Thomas Geymayer

|

| I have just been trying out the multiple screen feature in FG. I found that the GUI camera (including the menu bar, hud and 2D panel) appears in only one of the windows. Is there any way I can make the GUI to appear in all the windows? Actually I want to be able to view the hud and 2D panel in all the windows.[2] — Kavya Meyyappan

|

| there's a limitation in Plib that forces the GUI to be drawn on one window.[3] — Tim Moore

|

| I think you have just summarized all the limitations of the FlightGear multi-camera/view/display system. I know that in the case of menus, hud, 2d instrument panels, there would need to be some significant code restructuring to allow these to be displayed on other windows.[4] — Curtis Olson

|

| Good thing to have!!! Just still support graphics context on different screens/displays too ...[5] — Mathias Fröhlich

|

| it can be solved by using multiple osg windows to contain whatever GUI solution we go with - canvas, osgWidget or PUI-port.

Or to put it another way - the actual hard part is running the widgets in the main OpenGL window - which *is* a requirement for full-screen apps and multi-monitor setups. (Some people have claimed otherwise, but I believe we need the option of 'in-window' UI for many cases). So, this is a desirable feature, but doesn't dictate the choice of GUI technology. And can be done as a separate step from replacing PLIB.[6]— James Turner

|

- ↑ Thomas Geymayer (07-30-2012). Switching from PUI to osgWidget.

- ↑ Kavya Meyyappan (Fri, 19 Mar 2010 03:31:50 -0700). [Flightgear-devel] Help needed with multi-screen.

- ↑ Tim Moore (Sat, 20 Mar 2010 01:42:31 -0700). Re: [Flightgear-devel] Help needed with multi-screen.

- ↑ Curtis Olson (Fri, 19 Mar 2010 08:36:22 -0700). Re: [Flightgear-devel] Help needed with multi-screen.

- ↑ Mathias Fröhlich (Sat, 28 Jun 2008 00:05:19 -0700). Re: [Flightgear-devel] RFC: changes to views and cameras.

- ↑ James Turner (Wed, 25 Jul 2012 02:28:42 -0700). Switching from PUI to osgWidget.

Placement/Element for Streaming: Computer Vision

Note There seem to be two main use-cases discussed by contributors:

|

| I am using the http stream feature to capture videos with ffmpeg. It is a great feature! — Adam Dershowitz (Aug 17th, 2015). [Flightgear-devel] httpd stream question.

(powered by Instant-Cquotes) |

| what is the current suggested easiest way to capture images and videos from FlightGear on a Mac? |

| It uses the same last-camera-callback technique and now supports mjpeg streaming, too. — Torsten Dreyer (May 30th, 2014). Re: [Flightgear-devel] Saving Videos.

(powered by Instant-Cquotes) |

| The problem is not the decoder but the encoder. I don't have a fast-enough real-time video encoder that lives happily in the FG main loop. I have experimented with ffmpeg which was promising, but it ended up on the very bottom of my backlog :-/ We can do MJPEG stream, try to use /screenshot?stream=y as the screenshot url. MJPEG is ugly and a resource hog but works reasonable well for image sies of probably 640x480. Scale down your FG window and give it a try. — Torsten Dreyer (Oct 12th, 2015). Re: [Flightgear-devel] phi interface updates.

(powered by Instant-Cquotes) |